Phi3 14B Instruct - Model Details

Phi3 14B Instruct is a large language model developed by Microsoft with 14b parameters. It operates under the Microsoft license and is specifically designed for instruct tasks. The model excels in instruction adherence, safety, and reasoning tasks, making it suitable for applications requiring precise and secure interactions.

Description of Phi3 14B Instruct

Phi3 14B Instruct is a 14B parameter large language model designed for efficiency and performance, trained on Phi-3 datasets that include synthetic data and filtered publicly available websites. It supports context lengths of 4K and 128K tokens, enabling flexibility for diverse tasks. The model undergoes supervised fine-tuning and direct preference optimization to ensure safety and alignment. It excels in reasoning, code generation, math, and logic tasks, making it suitable for commercial and research applications. Optimized for memory and compute-constrained environments, it performs well in latency-sensitive scenarios while maintaining strong reasoning capabilities.

Parameters & Context Length of Phi3 14B Instruct

Phi3 14B Instruct is a 14b parameter model, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 128k context length enables handling of very long texts, making it suitable for applications requiring extensive information processing, though it demands significant computational resources. This combination allows the model to excel in tasks like detailed reasoning, code generation, and complex problem-solving while maintaining adaptability for environments with constrained memory or latency requirements.

- Parameter Size: 14b

- Context Length: 128k

Possible Intended Uses of Phi3 14B Instruct

Phi3 14B Instruct is a model that could be used in memory/compute constrained environments, where its 14b parameter size and optimized design may allow for efficient deployment. It might also be suitable for latency bound scenarios, as its architecture could support quick responses in time-sensitive applications. Strong reasoning capabilities, particularly in code, math, and logic, suggest possible uses in tasks requiring analytical problem-solving or complex pattern recognition. These uses remain possible and would need thorough evaluation to ensure alignment with specific requirements. Other potential applications could include scenarios where extended context handling or resource efficiency is critical. However, these are possible directions that require further exploration to confirm their viability.

- memory/compute constrained environments

- latency bound scenarios

- strong reasoning (especially code, math and logic)

Possible Applications of Phi3 14B Instruct

Phi3 14B Instruct could be used in memory/compute constrained environments, where its 14b parameter size and optimized design might allow for efficient deployment in scenarios with limited resources. It might also be suitable for latency bound scenarios, as its architecture could support quick responses in time-sensitive tasks. Strong reasoning capabilities, particularly in code, math, and logic, suggest possible applications in tasks requiring analytical problem-solving or complex pattern recognition. These uses remain possible and would need thorough evaluation to ensure alignment with specific requirements. Other potential applications could include scenarios where extended context handling or resource efficiency is critical. However, these are possible directions that require further exploration to confirm their viability.

- memory/compute constrained environments

- latency bound scenarios

- strong reasoning (especially code, math and logic)

Quantized Versions & Hardware Requirements of Phi3 14B Instruct

Phi3 14B Instruct with the q4 quantization is designed for a balance between precision and performance, requiring a GPU with at least 20GB VRAM (e.g., RTX 3090) and 32GB system memory for optimal operation. It also needs adequate cooling and a power supply to support the GPU. These requirements ensure the model runs efficiently while maintaining accuracy for tasks like reasoning and code generation.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Phi3 14B Instruct is a 14b parameter model optimized for 128k context length, designed for memory/conpute constrained environments, latency bound scenarios, and strong reasoning tasks like code, math, and logic. It supports multiple quantizations including q4, requiring a GPU with at least 20GB VRAM for efficient deployment.

References

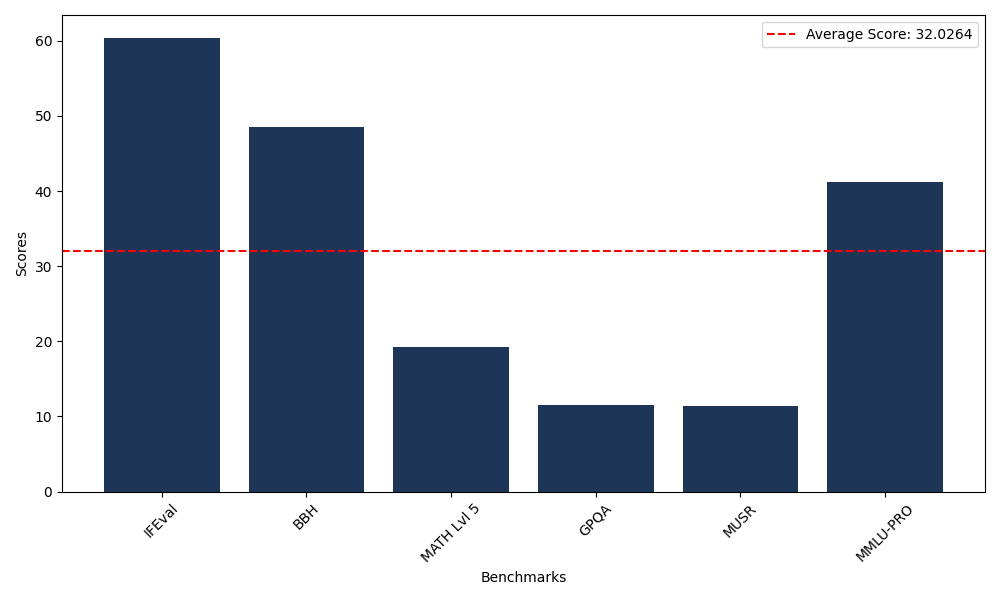

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 60.40 |

| Big Bench Hard (BBH) | 48.46 |

| Mathematical Reasoning Test (MATH Lvl 5) | 19.18 |

| General Purpose Question Answering (GPQA) | 11.52 |

| Multimodal Understanding and Reasoning (MUSR) | 11.35 |

| Massive Multitask Language Understanding (MMLU-PRO) | 41.24 |

Comments

No comments yet. Be the first to comment!

Leave a Comment