Phi3 3.8B Instruct - Model Details

Phi3 3.8B Instruct is a large language model developed by Microsoft with 3.8b parameters. It operates under the Microsoft license and is specifically designed for instruct tasks. The model excels in instruction adherence, safety, and reasoning, making it suitable for applications requiring precise and secure interactions.

Description of Phi3 3.8B Instruct

Phi3 3.8B Instruct is a 3.8B parameters lightweight, state-of-the-art open model from the Phi-3 family. It is trained on high-quality synthetic and filtered public data with a focus on reasoning and instruction following. The model supports context lengths of 4K and 128K tokens and undergoes post-training for safety and alignment. It excels in math, logic, and low-resource environments and is optimized for chat formats. Available in multiple formats including HF, ONNX, and GGUF, it balances performance and efficiency for diverse applications.

Parameters & Context Length of Phi3 3.8B Instruct

Phi3 3.8B Instruct has 3.8b parameters, placing it in the small model category, which ensures fast and resource-efficient performance ideal for tasks requiring simplicity and speed. Its 128k context length falls into the very long context range, enabling it to handle extensive texts but demanding significant computational resources. This combination allows the model to balance efficiency with capability, excelling in complex reasoning while maintaining adaptability for diverse applications.

- Parameter Size: 3.8b

- Context Length: 128k

Possible Intended Uses of Phi3 3.8B Instruct

Phi3 3.8B Instruct is a model designed for general purpose AI systems in memory or compute constrained environments, where possible applications might include lightweight task automation, embedded systems, or edge devices. Its latency-bound scenarios suggest possible uses in real-time interaction systems requiring quick responses, such as chatbots or interactive tools. The model’s strong reasoning capabilities in math, logic, and common sense point to possible applications in educational tools, problem-solving interfaces, or data analysis tasks. However, these possible uses need thorough investigation to ensure alignment with specific requirements and constraints.

- general purpose AI systems in memory/compute constrained environments

- latency-bound scenarios requiring quick responses

- applications needing strong reasoning capabilities (math, logic, and common sense)

Possible Applications of Phi3 3.8B Instruct

Phi3 3.8B Instruct is a model that could support possible applications in areas such as educational tools requiring reasoning for problem-solving, customer service chatbots needing quick and accurate responses, data analysis interfaces for non-sensitive tasks, and content creation systems for generating structured text. These possible uses might benefit from its efficiency in constrained environments and strong reasoning capabilities. However, each possible application must be thoroughly evaluated to ensure alignment with specific needs and constraints.

- educational tools requiring reasoning for problem-solving

- customer service chatbots needing quick and accurate responses

- data analysis interfaces for non-sensitive tasks

- content creation systems for generating structured text

Quantized Versions & Hardware Requirements of Phi3 3.8B Instruct

Phi3 3.8B Instruct with the q4 quantization requires a GPU with at least 8GB-16GB VRAM for efficient operation, making it suitable for systems with moderate hardware capabilities. This version balances precision and performance, allowing possible applications in environments where resource constraints are a priority. Additional considerations include 32GB+ RAM and adequate cooling to ensure stability.

Phi3 3.8B Instruct available in fp16, q2, q3, q4, q5, q6, q8.

Conclusion

Phi3 3.8B Instruct is a 3.8b-parameter model optimized for instruction adherence, reasoning, and chat formats, with a 128k token context length and support for multiple formats like HF, ONNX, and GGUF. It is designed for general-purpose AI systems in constrained environments, latency-sensitive tasks, and applications requiring strong reasoning capabilities.

References

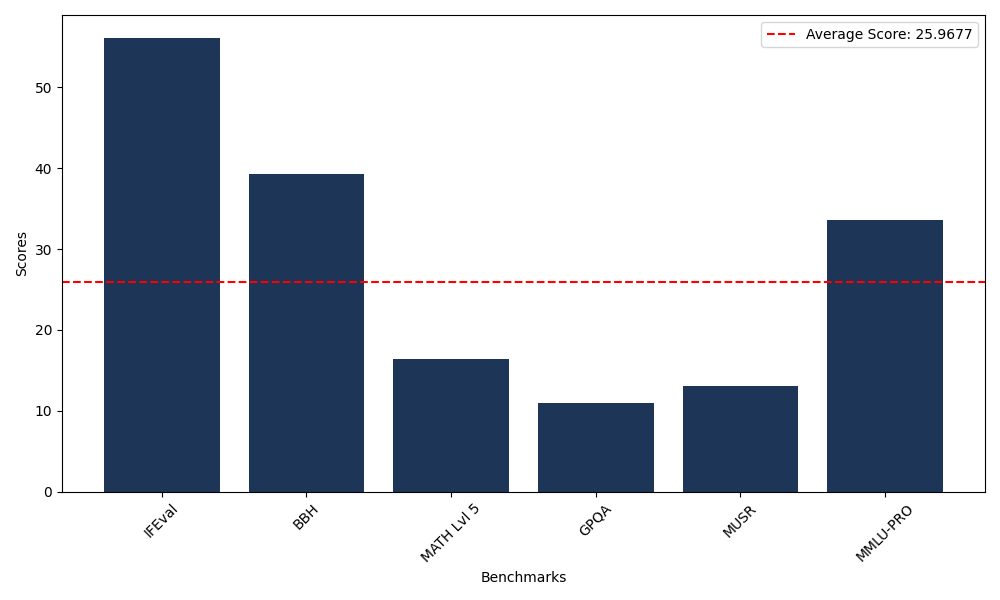

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 56.13 |

| Big Bench Hard (BBH) | 39.27 |

| Mathematical Reasoning Test (MATH Lvl 5) | 11.63 |

| General Purpose Question Answering (GPQA) | 9.28 |

| Multimodal Understanding and Reasoning (MUSR) | 7.64 |

| Massive Multitask Language Understanding (MMLU-PRO) | 31.85 |

| Instruction Following Evaluation (IFEval) | 54.77 |

| Big Bench Hard (BBH) | 36.56 |

| Mathematical Reasoning Test (MATH Lvl 5) | 16.39 |

| General Purpose Question Answering (GPQA) | 10.96 |

| Multimodal Understanding and Reasoning (MUSR) | 13.12 |

| Massive Multitask Language Understanding (MMLU-PRO) | 33.58 |

Comments

No comments yet. Be the first to comment!

Leave a Comment