Phi3.5 3.8B Instruct - Model Details

Phi3.5 3.8B Instruct is a large language model developed by Microsoft, featuring 3.8 billion parameters and designed for instruction following. It is licensed under the MIT License, offering flexibility for various applications. This lightweight model excels by outperforming larger counterparts in efficiency and performance, making it suitable for tasks requiring concise and accurate responses.

Description of Phi3.5 3.8B Instruct

Phi3.5 3.8B Instruct is a 3.8B parameter large language model trained on Phi-3 datasets including synthetic data and filtered publicly available websites. It supports 4K and 128K token context lengths, enabling flexibility for complex tasks. The model undergoes post-training for instruction following and safety, ensuring reliable performance. It excels in reasoning, math, and logic tasks, making it suitable for memory-constrained environments and latency-sensitive scenarios. Designed as a state-of-the-art open model, it balances efficiency with strong capabilities for general-purpose AI applications.

Parameters & Context Length of Phi3.5 3.8B Instruct

Phi3.5 3.8B Instruct is a 3.8B parameter model, placing it in the small to mid-scale range, which ensures fast and resource-efficient performance while maintaining capability for moderate complexity tasks. Its 128K token context length falls into the very long context category, enabling it to handle extensive text sequences but requiring significant computational resources. This combination makes it suitable for applications needing both efficiency and long-context understanding, such as document analysis or complex reasoning.

- Parameter Size: 3.8b – small to mid-scale, efficient for resource-constrained environments

- Context Length: 128k – very long, ideal for handling extensive text but resource-intensive

Possible Intended Uses of Phi3.5 3.8B Instruct

Phi3.5 3.8B Instruct is a 3.8B parameter model designed for general-purpose AI systems, research applications, latency-bound scenarios, math and logic reasoning tasks, and memory-constrained environments. Its lightweight architecture and efficient design make it a possible candidate for applications requiring quick responses or limited computational resources. Possible uses could include supporting research in natural language processing, optimizing AI systems for edge devices, or handling tasks that demand strong reasoning capabilities. However, these possible applications require thorough evaluation to ensure alignment with specific needs and constraints. The model’s 128K token context length and 3.8B parameter size offer flexibility for possible deployment in scenarios where balancing performance and resource usage is critical.

- general purpose ai systems

- research applications

- latency-bound scenarios

- math and logic reasoning tasks

- memory-constrained environments

Possible Applications of Phi3.5 3.8B Instruct

Phi3.5 3.8B Instruct is a 3.8B parameter model with a 128K token context length, making it a possible choice for applications requiring efficiency and scalability. Possible uses could include supporting general-purpose AI systems by balancing performance and resource usage, enabling research applications that benefit from its reasoning capabilities, or optimizing latency-bound scenarios where quick responses are critical. Possible deployment in memory-constrained environments might also be explored due to its lightweight design. However, these possible applications require thorough evaluation to ensure they meet specific requirements and constraints. Each application must be thoroughly evaluated and tested before use.

- general-purpose AI systems

- research applications

- latency-bound scenarios

- memory-constrained environments

Quantized Versions & Hardware Requirements of Phi3.5 3.8B Instruct

Phi3.5 3.8B Instruct's medium q4 version requires a GPU with at least 12GB VRAM and 32GB system memory to run efficiently, making it suitable for devices with moderate hardware capabilities. This 3.8B parameter model balances precision and performance, ideal for latency-bound scenarios or memory-constrained environments. However, hardware compatibility should be verified based on specific use cases.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Phi3.5 3.8B Instruct is a 3.8B parameter large language model with a 128K token context length, designed for general-purpose AI systems, research applications, and latency-bound scenarios, while maintaining efficiency through its MIT License and lightweight architecture. It supports multiple quantized versions, including q4, making it adaptable for diverse hardware environments.

References

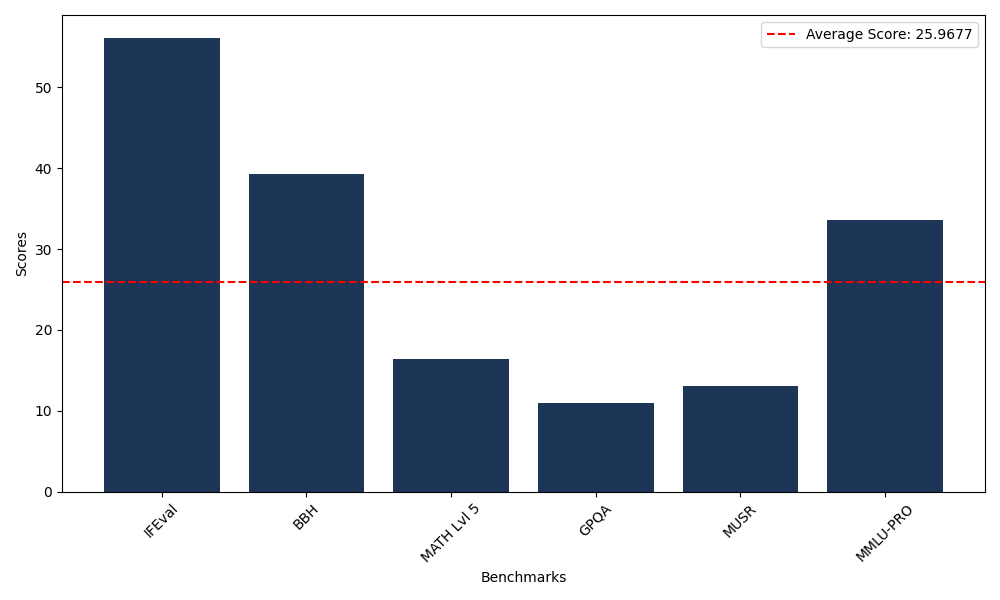

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 56.13 |

| Big Bench Hard (BBH) | 39.27 |

| Mathematical Reasoning Test (MATH Lvl 5) | 11.63 |

| General Purpose Question Answering (GPQA) | 9.28 |

| Multimodal Understanding and Reasoning (MUSR) | 7.64 |

| Massive Multitask Language Understanding (MMLU-PRO) | 31.85 |

| Instruction Following Evaluation (IFEval) | 54.77 |

| Big Bench Hard (BBH) | 36.56 |

| Mathematical Reasoning Test (MATH Lvl 5) | 16.39 |

| General Purpose Question Answering (GPQA) | 10.96 |

| Multimodal Understanding and Reasoning (MUSR) | 13.12 |

| Massive Multitask Language Understanding (MMLU-PRO) | 33.58 |

Comments

No comments yet. Be the first to comment!

Leave a Comment