Phi4 14B - Model Details

Phi4 14B is a state-of-the-art open large language model developed by Microsoft with a parameter size of 14b. It is released under the Microsoft license, offering flexibility for research and commercial use. The model is designed to deliver high-performance capabilities while maintaining accessibility for developers and organizations seeking advanced AI solutions.

Description of Phi4 14B

Phi-4 is a state-of-the-art open model built on a diverse mix of synthetic datasets, filtered public domain websites, and acquired academic books and Q&A resources. It leverages supervised fine-tuning and direct preference optimization to enhance alignment, ensuring strong instruction-following capabilities and robust safety measures. The model prioritizes precision and reliability through rigorous training and optimization processes.

Parameters & Context Length of Phi4 14B

Phi4 14B features a 14b parameter size, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 16k context length falls into the long context range, enabling effective handling of extended texts while requiring more computational resources. This combination makes the model suitable for tasks demanding deeper contextual understanding without excessive resource consumption.

- Parameter Size: 14b

- Context Length: 16k

Possible Intended Uses of Phi4 14B

Phi4 14B is a versatile model designed for a range of applications, with possible uses that require further exploration. Its 14b parameter size and 16k context length make it suitable for possible scenarios such as accelerating research on language models, where its architecture could enable faster experimentation and analysis. It could also serve as a possible foundation for generative AI features, offering flexibility for developers to integrate into tools or systems. In environments with memory/conpute constraints or latency-bound scenarios, its design might support possible implementations of general-purpose AI applications in English. Additionally, its reasoning capabilities could be possible avenues for tasks requiring structured problem-solving or contextual understanding. However, these possible uses must be thoroughly investigated to ensure alignment with specific requirements and limitations.

- accelerate research on language models

- serve as a building block for generative AI powered features

- support general purpose AI systems and applications in English requiring memory/conpute constrained environments

- latency bound scenarios

- reasoning

Possible Applications of Phi4 14B

Phi4 14B is a versatile model with possible applications in areas such as accelerating research on language models, where its 14b parameter size and 16k context length could enable possible advancements in understanding complex linguistic patterns. It might also serve as a possible foundation for generative AI features, offering possible flexibility for developers to integrate into tools requiring efficient processing. In environments with memory/conpute constraints or latency-bound scenarios, its design could support possible implementations of general-purpose AI systems in English. Additionally, its reasoning capabilities might be possible assets for tasks involving structured problem-solving or contextual analysis. However, these possible applications require thorough evaluation to ensure alignment with specific use cases and technical requirements.

- accelerate research on language models

- serve as a building block for generative AI powered features

- support general purpose AI systems and applications in English requiring memory/conpute constrained environments

- reasoning

Quantized Versions & Hardware Requirements of Phi4 14B

Phi4 14B with the q4 quantization is designed for a medium balance between precision and performance, requiring a GPU with at least 16GB VRAM for efficient operation, though specific needs may vary based on workload and system configuration. A multi-core CPU and 32GB+ system memory are recommended to support smooth execution, while adequate cooling and power supply are essential for stability. This version is optimized for environments where resource efficiency is critical, making it possible to run on mid-range GPUs.

- fp16, q4, q8

Conclusion

Phi4 14B is a state-of-the-art open-source large language model developed by Microsoft with 14b parameters and a 16k context length, designed for efficient performance in resource-constrained environments and versatile applications. It offers possible uses in research acceleration, generative AI features, and general-purpose systems, but each application requires thorough evaluation before deployment.

References

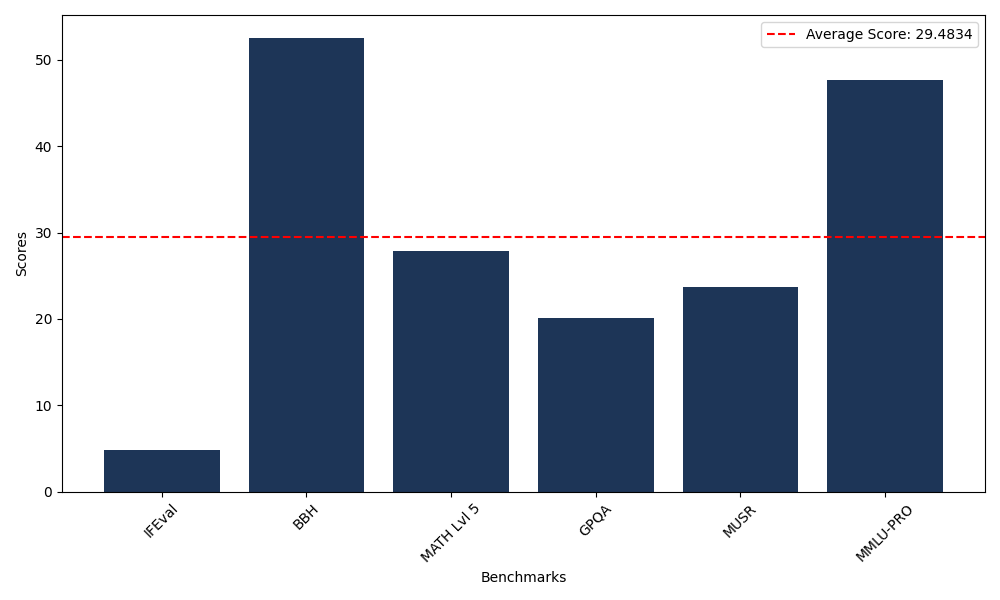

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 4.88 |

| Big Bench Hard (BBH) | 52.58 |

| Mathematical Reasoning Test (MATH Lvl 5) | 27.87 |

| General Purpose Question Answering (GPQA) | 20.13 |

| Multimodal Understanding and Reasoning (MUSR) | 23.72 |

| Massive Multitask Language Understanding (MMLU-PRO) | 47.72 |

Comments

No comments yet. Be the first to comment!

Leave a Comment