Phi4 Mini 3.8B

Phi4 Mini 3.8B is a large language model developed by Microsoft, featuring 3.8 billion parameters designed to enhance multilingual capabilities, reasoning, and mathematical problem-solving. Licensed under Microsoft, the model is maintained by the company as part of its efforts to advance AI technologies across diverse applications.

Description of Phi4 Mini 3.8B

Phi-4-mini-instruct is a lightweight open model with 3.8B parameters designed for memory-constrained environments and strong reasoning capabilities. It supports a 128K token context length, enabling efficient handling of extended inputs. The model excels in multilingual understanding, instruction following, function calling, and safety. Part of the Phi-4 family, it is trained on diverse datasets including synthetic data, filtered public websites, and high-quality educational content to enhance versatility and performance across tasks.

Parameters & Context Length of Phi4 Mini 3.8B

Phi-4-mini-instruct is a 3.8b parameter model with a 128k token context length, positioning it as a small-scale yet powerful tool for memory-constrained environments while enabling efficient handling of extended inputs. The 3.8b parameter size ensures resource efficiency and speed, making it ideal for tasks requiring quick responses without excessive computational demands, while the 128k context length allows for comprehensive processing of long texts, though it necessitates higher resource allocation compared to shorter contexts. This balance makes the model versatile for applications needing both performance and scalability.

- Name: Phi-4-mini-instruct

- Parameter Size: 3.8b

- Context Length: 128k

- Implications: Small-scale efficiency for memory-constrained tasks, long-context capability for extended input processing, requiring moderate to high resources.

Possible Intended Uses of Phi4 Mini 3.8B

Phi-4-mini-instruct is a 3.8b parameter model with a 128k token context length, designed for possible applications in general-purpose AI systems, reasoning tasks like math and logic, and environments where latency or memory constraints are critical. Its multilingual support across 30+ languages makes it a possible tool for research on language models, multimodal systems, and generative AI features. The model’s architecture could enable possible uses in scenarios requiring efficient processing of extended inputs, though further exploration is needed to confirm its suitability for specific tasks. The flexibility of its design suggests it might serve as a building block for various AI-driven applications, but these potential uses require thorough testing and validation.

- general purpose AI systems and applications requiring strong reasoning (math, logic)

- latency-bound scenarios and memory-constrained environments

- research on language and multimodal models as a building block for generative AI features

Possible Applications of Phi4 Mini 3.8B

Phi-4-mini-instruct is a 3.8b parameter model with a 128k token context length, which could be a possible tool for applications requiring strong reasoning, multilingual support, or efficient resource use. Possible uses might include educational tools that leverage its multilingual capabilities to support diverse language learners, or research projects exploring generative AI features through its flexible architecture. It could also be a possible choice for developing AI-driven customer service systems that need to handle complex queries across multiple languages. Additionally, its design might enable possible applications in content creation workflows where latency or memory constraints are critical. Each of these potential uses requires thorough evaluation and testing before deployment to ensure alignment with specific requirements.

- general purpose AI systems and applications requiring strong reasoning (math, logic)

- latency-bound scenarios and memory-constrained environments

- research on language and multimodal models as a building block for generative AI features

- multilingual applications benefiting from support for 30+ languages

Quantized Versions & Hardware Requirements of Phi4 Mini 3.8B

Phi-4-mini-instruct’s medium q4 version is optimized for a balance between precision and performance, requiring a GPU with at least 12GB VRAM and a system with 32GB RAM to run efficiently. This quantized version is designed to reduce memory usage while maintaining reasonable accuracy, making it a possible choice for users with mid-range hardware. The q4 variant is particularly suited for scenarios where resource constraints are a concern, though performance may vary depending on the specific application.

- Quantized Versions: fp16, q4, q8

Conclusion

Phi-4-mini-instruct is a 3.8b parameter model with a 128k token context length, designed for memory-constrained environments while supporting multilingual tasks across 30+ languages. Part of the Phi-4 family, it balances efficiency and performance for general-purpose AI applications, reasoning tasks, and research on language and multimodal models.

References

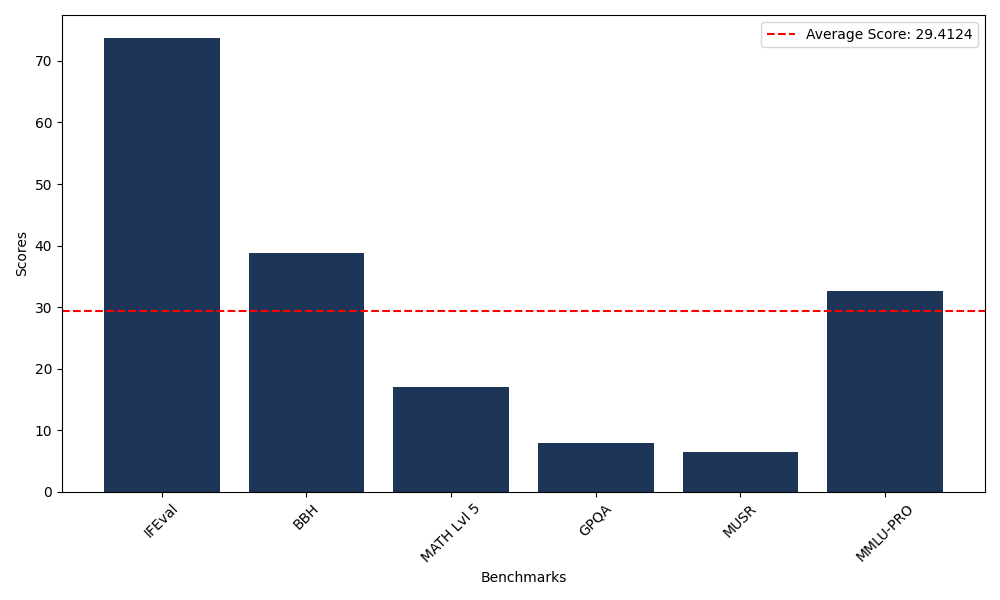

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 73.78 |

| Big Bench Hard (BBH) | 38.74 |

| Mathematical Reasoning Test (MATH Lvl 5) | 16.99 |

| General Purpose Question Answering (GPQA) | 7.94 |

| Multimodal Understanding and Reasoning (MUSR) | 6.45 |

| Massive Multitask Language Understanding (MMLU-PRO) | 32.58 |