Phi4 Mini Reasoning 3.8B

Phi4 Mini Reasoning 3.8B is a large language model developed by Microsoft, featuring 3.8 billion parameters. Designed for efficient and accurate mathematical reasoning in resource-constrained environments, it is released under the Microsoft license. This model emphasizes practical performance and accessibility, leveraging Microsoft's expertise to deliver robust capabilities tailored for specific computational needs.

Description of Phi4 Mini Reasoning 3.8B

Phi-4-mini-reasoning is a lightweight open model built on synthetic data with a focus on high-quality, reasoning-dense content. It belongs to the Phi-4 family and is optimized for mathematical reasoning tasks. The model features 3.8B parameters and a 128K token context length, enabling advanced problem-solving in constrained environments. It excels at multi-step reasoning and is tailored for educational applications, embedded tutoring systems, and edge deployments. Fine-tuned for accuracy, it balances efficiency with robust performance in resource-limited settings.

Parameters & Context Length of Phi4 Mini Reasoning 3.8B

Phi-4-mini-reasoning is a 3.8B parameter model with a 128K token context length, designed for efficient mathematical reasoning in resource-constrained settings. The 3.8B parameter size places it in the small to mid-scale range, offering fast inference and lower computational demands while maintaining precision for complex tasks. Its 128K context length enables handling extended sequences, making it suitable for multi-step problem-solving and detailed text analysis. These specifications balance performance and efficiency, allowing deployment in educational tools and edge devices without excessive resource consumption.

- Parameter Size: 3.8B (optimized for resource efficiency and mathematical accuracy)

- Context Length: 128K (supports long-form reasoning and complex problem-solving)

Possible Intended Uses of Phi4 Mini Reasoning 3.8B

Phi-4-mini-reasoning is a model designed for mathematical reasoning tasks, and its capabilities could support a range of possible applications. Possible uses might include tackling multi-step, logic-intensive problems, generating formal proofs, or handling symbolic computations. It could also be used for advanced word problems or scenarios requiring deep mathematical analysis. In educational contexts, it might assist in developing embedded tutoring systems or provide lightweight solutions for edge or mobile deployments. These possible applications highlight its potential for tasks that demand precision and structured reasoning, though further exploration would be necessary to confirm their viability.

- multi-step, logic-intensive mathematical problem-solving

- formal proof generation

- symbolic computation

- advanced word problems

- mathematical reasoning scenarios

- educational applications

- embedded tutoring systems

- lightweight deployment on edge or mobile systems

Possible Applications of Phi4 Mini Reasoning 3.8B

Phi-4-mini-reasoning is a model optimized for mathematical reasoning, and its design could enable possible applications in areas like multi-step problem-solving, formal proof generation, or symbolic computation. Possible uses might include supporting advanced word problems or mathematical reasoning scenarios that require structured, step-by-step analysis. It could also serve as a foundation for educational tools or embedded tutoring systems, where its lightweight nature allows possible deployment on edge devices. These possible applications highlight its potential for tasks demanding precision and logical depth, though each would require thorough evaluation to ensure alignment with specific needs.

- multi-step, logic-intensive mathematical problem-solving

- formal proof generation

- symbolic computation

- educational applications and embedded tutoring systems

Quantized Versions & Hardware Requirements of Phi4 Mini Reasoning 3.8B

Phi-4-mini-reasoning's medium q4 version is designed to run on systems with a GPU featuring at least 12GB VRAM, making it suitable for devices with moderate computational resources. This quantization balances precision and performance, requiring a multi-core CPU, at least 32GB of system memory, and adequate cooling to handle the workload efficiently. The model’s lightweight nature allows deployment on edge or mobile systems, though specific hardware configurations may vary based on usage demands.

- Quantized Versions: fp16, q4, q8

Conclusion

Phi-4-mini-reasoning is a 3.8B parameter model with a 128K token context, optimized for mathematical reasoning tasks. Developed by Microsoft, it balances efficiency and precision, making it suitable for educational tools, embedded systems, and edge deployments.

References

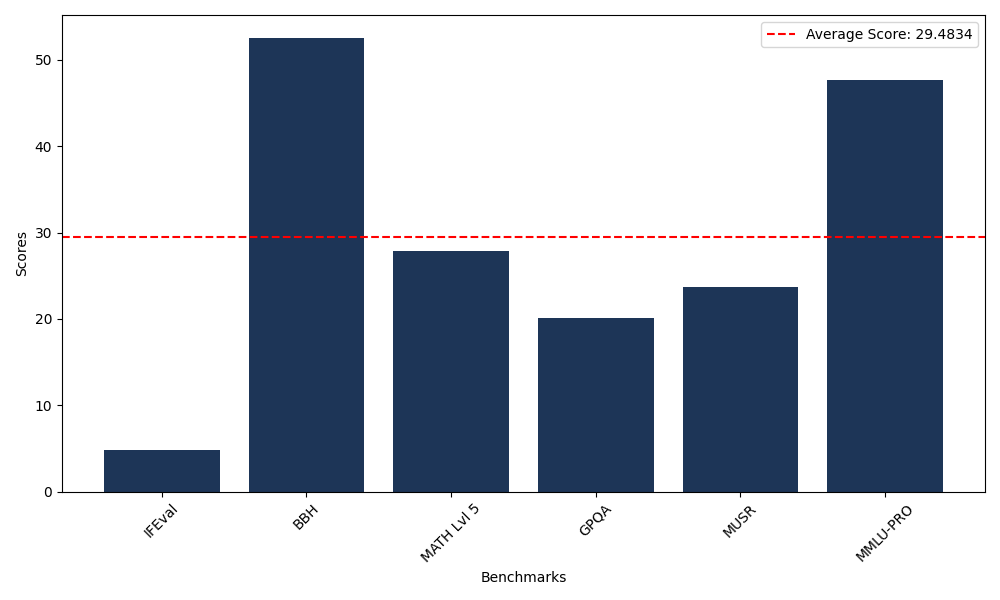

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 4.88 |

| Big Bench Hard (BBH) | 52.58 |

| Mathematical Reasoning Test (MATH Lvl 5) | 27.87 |

| General Purpose Question Answering (GPQA) | 20.13 |

| Multimodal Understanding and Reasoning (MUSR) | 23.72 |

| Massive Multitask Language Understanding (MMLU-PRO) | 47.72 |