Phi4 Reasoning 14B - Model Details

Phi4 Reasoning 14B is a large language model developed by Microsoft, featuring 14 billion parameters designed to balance size and performance with strong reasoning capabilities. The model is released under the Microsoft license, reflecting its focus on practical applications and accessibility. Microsoft, as the maintainer, ensures ongoing support and updates, making Phi4 Reasoning 14B a versatile tool for tasks requiring advanced logical and analytical skills.

Description of Phi4 Reasoning 14B

Phi4 Reasoning 14B is a state-of-the-art open-weight reasoning model fine-tuned from Phi-4 using supervised fine-tuning on chain-of-thought traces and reinforcement learning. It excels in reasoning and logic tasks, with a strong emphasis on math, science, and coding skills. The model generates responses in two distinct sections: a reasoning chain-of-thought block followed by a summarization block. Trained on English text, it is optimized for memory-constrained environments and latency-bound scenarios, making it efficient for real-world applications. Its design prioritizes balanced performance and advanced logical capabilities.

Parameters & Context Length of Phi4 Reasoning 14B

Phi4 Reasoning 14B features 14 billion parameters, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 32k context length enables handling extended texts and complex reasoning scenarios, though it requires more computational resources compared to shorter contexts. This combination makes the model suitable for tasks demanding both depth of analysis and extended information processing.

- Name: Phi4 Reasoning 14B

- Parameter Size: 14b

- Context Length: 32k

- Implications: Mid-scale parameters for balanced performance, long context for extended tasks but higher resource demands.

Possible Intended Uses of Phi4 Reasoning 14B

Phi4 Reasoning 14B is a versatile model designed for tasks requiring reasoning, logic, and problem-solving, with potential applications in areas like accelerating research on language models, developing generative AI-powered features, and supporting systems that operate in memory or compute-constrained environments. Possible uses could include enhancing automated reasoning tools, improving code generation workflows, or optimizing decision-making processes in scenarios where efficiency and logical accuracy are prioritized. These potential applications may benefit from the model’s structured approach to generating reasoning chains and its ability to handle extended contexts, though further investigation is necessary to confirm their viability. The model’s design suggests it could be adapted for tasks involving complex analysis, but its effectiveness in specific domains would depend on tailored training and evaluation.

- Name: Phi4 Reasoning 14B

- Purpose: Accelerating research on language models, building generative AI features, applications requiring reasoning and problem-solving

- Other Important Info: Designed for memory/compute constrained environments, supports extended contexts for complex reasoning

Possible Applications of Phi4 Reasoning 14B

Phi4 Reasoning 14B is a model with possible applications in areas requiring structured reasoning, such as possible code generation for software development, possible educational tools for teaching logic or problem-solving, possible data analysis tasks involving complex patterns, and possible automation of decision-making processes in constrained environments. These possible uses could leverage the model’s ability to break down problems into reasoning chains and operate efficiently in memory-limited settings. However, each possible application would need thorough evaluation to ensure alignment with specific requirements and constraints. The model’s design suggests it could support possible tasks in research, development, or optimization, but its effectiveness in any given scenario would depend on tailored implementation and validation.

- Name: Phi4 Reasoning 14B

- Possible Applications: code generation, educational tools, data analysis, automation of decision-making

- Other Important Info: designed for reasoning, memory-constrained environments, structured problem-solving

Quantized Versions & Hardware Requirements of Phi4 Reasoning 14B

Phi4 Reasoning 14B’s medium q4 version is optimized for a balance between precision and performance, requiring a GPU with at least 16GB VRAM and a system with 32GB RAM, making it suitable for memory-constrained environments. This quantized version reduces computational demands while maintaining reasonable accuracy, allowing possible deployment on mid-range hardware. However, specific requirements may vary based on workload and implementation.

- Name: Phi4 Reasoning 14B

- Quantized Versions: fp16, q4, q8

- Other Important Info: optimized for memory-constrained environments, requires GPU with 16GB+ VRAM for q4

Conclusion

Phi4 Reasoning 14B is a mid-scale language model with 14 billion parameters, optimized for reasoning tasks through quantized versions like q4, balancing performance and resource efficiency. It supports applications requiring logical analysis, code generation, and problem-solving in constrained environments, with potential for further adaptation across diverse use cases.

References

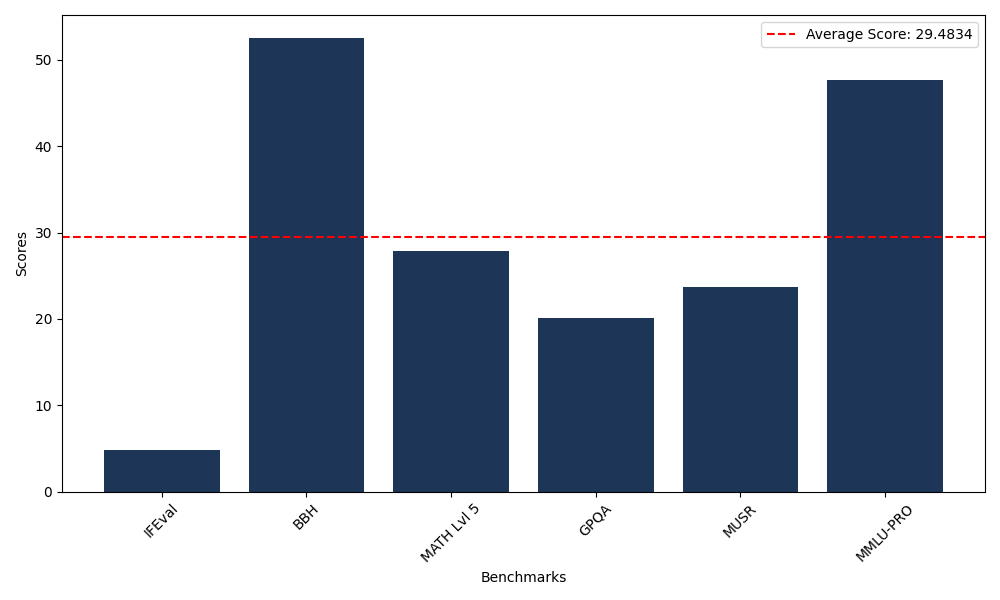

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 4.88 |

| Big Bench Hard (BBH) | 52.58 |

| Mathematical Reasoning Test (MATH Lvl 5) | 27.87 |

| General Purpose Question Answering (GPQA) | 20.13 |

| Multimodal Understanding and Reasoning (MUSR) | 23.72 |

| Massive Multitask Language Understanding (MMLU-PRO) | 47.72 |

Comments

No comments yet. Be the first to comment!

Leave a Comment