Qwen2 0.5B Instruct - Model Details

Qwen2 0.5B Instruct is a large language model developed by Qwen, a company, featuring 0.5b parameters. It is released under multiple licenses including Apache License 2.0 (Apache-2.0), Tongyi Qianwen License Agreement (TQ-LA), The Unlicense (Unlicense), Apache License 2.0 (Apache-2.0). Designed as an instruct model, it excels in multilingual tasks with extended context length and enhanced capabilities in coding and mathematics.

Description of Qwen2 0.5B Instruct

Qwen2 is a new series of Qwen large language models featuring base and instruction-tuned models with parameter sizes ranging from 0.5B to 72B. It demonstrates competitiveness against proprietary models in language understanding, generation, multilingual capability, coding, mathematics, and reasoning. The model employs a Transformer architecture with SwiGLU activation and an improved tokenizer adaptive to multiple natural languages and codes. It builds on previous versions with enhanced performance across diverse tasks.

Parameters & Context Length of Qwen2 0.5B Instruct

Qwen2 0.5B Instruct has 0.5b parameters, placing it in the small model category, which ensures fast and resource-efficient performance ideal for simple tasks. Its 4k context length falls under short contexts, making it suitable for brief interactions but limiting its ability to handle extended texts. This configuration balances accessibility and performance for users with constrained resources while prioritizing speed over handling very long documents.

- Parameter Size: 0.5b

- Context Length: 4k

Possible Intended Uses of Qwen2 0.5B Instruct

Qwen2 0.5B Instruct is a versatile model with possible uses in areas such as language understanding, language generation, multilingual capability, coding, mathematics, and reasoning. Its 0.5b parameter size and 4k context length make it suitable for possible applications like drafting text, translating between languages, solving mathematical problems, or assisting with logical reasoning tasks. However, these possible uses require thorough evaluation to ensure they align with specific needs and constraints. The model’s design supports possible scenarios where efficiency and simplicity are prioritized over handling highly complex or resource-intensive tasks.

- language understanding

- language generation

- multilingual capability

- coding

- mathematics

- reasoning

Possible Applications of Qwen2 0.5B Instruct

Qwen2 0.5B Instruct has possible applications in areas such as language understanding, language generation, multilingual tasks, and problem-solving scenarios. Its 0.5b parameter size and 4k context length make it a possible tool for tasks like drafting text, translating between languages, or assisting with logical reasoning. Possible uses could include educational support, content creation, or general-purpose coding assistance, though these possible applications require careful assessment to ensure they meet specific requirements. Possible scenarios might also involve simplifying complex information or generating creative ideas, but each possible use case must be thoroughly evaluated and tested before deployment.

- language understanding

- language generation

- multilingual tasks

- problem-solving scenarios

Quantized Versions & Hardware Requirements of Qwen2 0.5B Instruct

Qwen2 0.5B Instruct with the q4 quantized version requires at least 8GB VRAM for deployment, making it suitable for systems with mid-range GPUs. This medium-precision option balances performance and efficiency, allowing possible use on devices with limited resources while maintaining reasonable accuracy. Users should verify their GPU’s VRAM capacity and system memory to ensure compatibility. Other quantized versions include fp16, q2, q3, q5, q6, q8, each with varying hardware demands.

- fp16

- q2

- q3

- q4

- q5

- q6

- q8

Conclusion

Qwen2 0.5B Instruct is a compact large language model with 0.5b parameters and a 4k context length, optimized for efficient performance on resource-constrained systems. It supports language understanding, generation, multilingual tasks, coding, mathematics, and reasoning, making it a possible choice for applications requiring simplicity and speed over high-complexity processing.

References

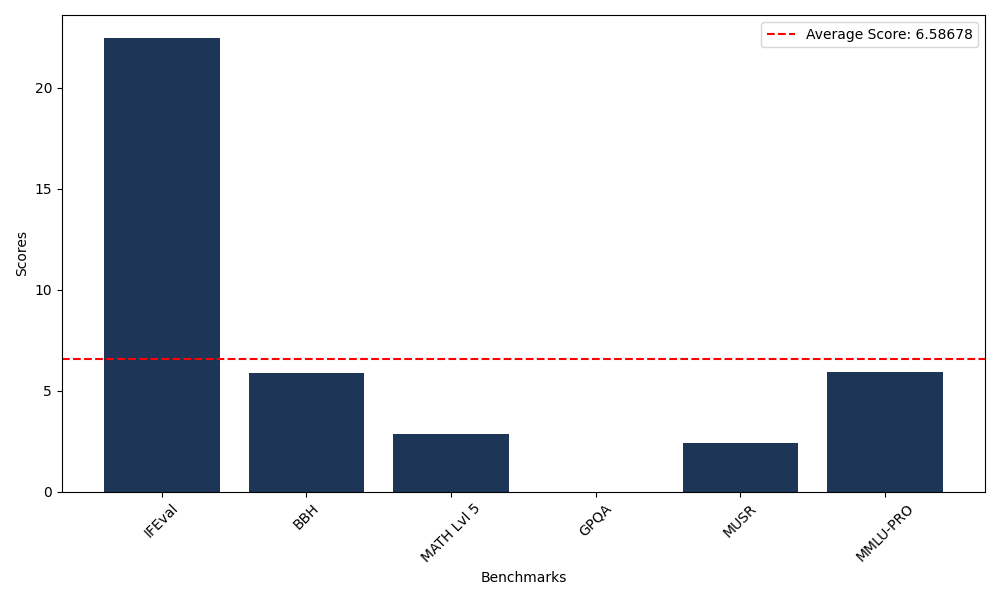

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 22.47 |

| Big Bench Hard (BBH) | 5.88 |

| Mathematical Reasoning Test (MATH Lvl 5) | 2.87 |

| General Purpose Question Answering (GPQA) | 0.00 |

| Multimodal Understanding and Reasoning (MUSR) | 2.41 |

| Massive Multitask Language Understanding (MMLU-PRO) | 5.90 |

Comments

No comments yet. Be the first to comment!

Leave a Comment