Qwen2 1.5B Instruct - Model Details

Qwen2 1.5B Instruct is a large language model developed by Qwen, a company, featuring 1.5b parameters. It is released under multiple licenses including Apache License 2.0 (Apache-2.0), Tongyi Qianwen License Agreement (TQ-LA), The Unlicense (Unlicense), and Apache License 2.0 (Apache-2.0) again. The model is designed for instruction-following tasks and excels in multilingual data processing, extended context length, and enhanced coding and mathematics capabilities.

Description of Qwen2 1.5B Instruct

Qwen2 is a series of large language models featuring base language models and instruction-tuned variants with parameters ranging from 0.5B to 72B. It includes a Mixture-of-Experts (MoE) model and demonstrates competitiveness against proprietary models in language understanding, generation, multilingual capability, coding, mathematics, and reasoning. The model utilizes a Transformer architecture with SwiGLU activation, attention QKV bias, and group query attention. It also includes an improved tokenizer adaptive to multiple natural languages and codes, enhancing its versatility and performance across diverse tasks.

Parameters & Context Length of Qwen2 1.5B Instruct

Qwen2 1.5B Instruct has 1.5b parameters and a 4k context length. The 1.5b parameter size places it in the small model category, offering fast and resource-efficient performance suitable for simple tasks. The 4k context length falls under short contexts, making it effective for concise tasks but limiting its ability to handle very long texts. These specifications balance accessibility and performance, making it ideal for applications where efficiency and simplicity are prioritized over handling extensive or complex content.

- Parameter_Size: 1.5b

- Context_Length: 4k

Possible Intended Uses of Qwen2 1.5B Instruct

Qwen2 1.5B Instruct is a versatile model with 1.5b parameters and a 4k context length, offering possible applications in areas like content generation, multilingual communication, and code development. Its design suggests possible utility for creating text-based content, such as articles or creative writing, though its effectiveness in specific scenarios would require testing. The model’s multilingual support could enable possible use in cross-language interactions, but its performance across diverse languages and dialects remains to be validated. For code development, it might assist in generating or explaining code snippets, though its accuracy and reliability in technical tasks would need further evaluation. These possible uses highlight the model’s flexibility but underscore the need for careful exploration to ensure suitability for specific tasks.

- content generation

- multilingual communication

- code development

Possible Applications of Qwen2 1.5B Instruct

Qwen2 1.5B Instruct is a model with 1.5b parameters and a 4k context length, which could be used for possible applications such as generating creative or informative text, facilitating cross-language interactions, assisting with coding tasks, and supporting educational content creation. These possible uses highlight its adaptability, but they require thorough evaluation to ensure alignment with specific needs. The model’s design suggests possible utility in scenarios where multilingual support, code-related tasks, or content generation are prioritized, though its effectiveness in these areas would need validation. Possible applications in customer service or collaborative writing could also emerge, but they must be tested rigorously before deployment. Each possible use case demands careful assessment to confirm suitability and performance.

- content generation

- multilingual communication

- code development

- educational content creation

Quantized Versions & Hardware Requirements of Qwen2 1.5B Instruct

Qwen2 1.5B Instruct with the q4 quantization offers a possible balance between precision and performance, requiring at least 8GB VRAM for deployment on a GPU, with 32GB system memory and adequate cooling. This version is suitable for systems with moderate resources, enabling possible use in scenarios where efficiency and accessibility are prioritized. The q4 variant reduces computational demands compared to higher-precision formats like fp16, making it more accessible for general-purpose tasks.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Qwen2 1.5B Instruct is a large language model with 1.5b parameters and a 4k context length, offering multiple quantized versions for varied deployment needs. It is designed for flexibility and efficiency, supporting tasks like content generation, multilingual communication, and code development through its adaptable architecture.

References

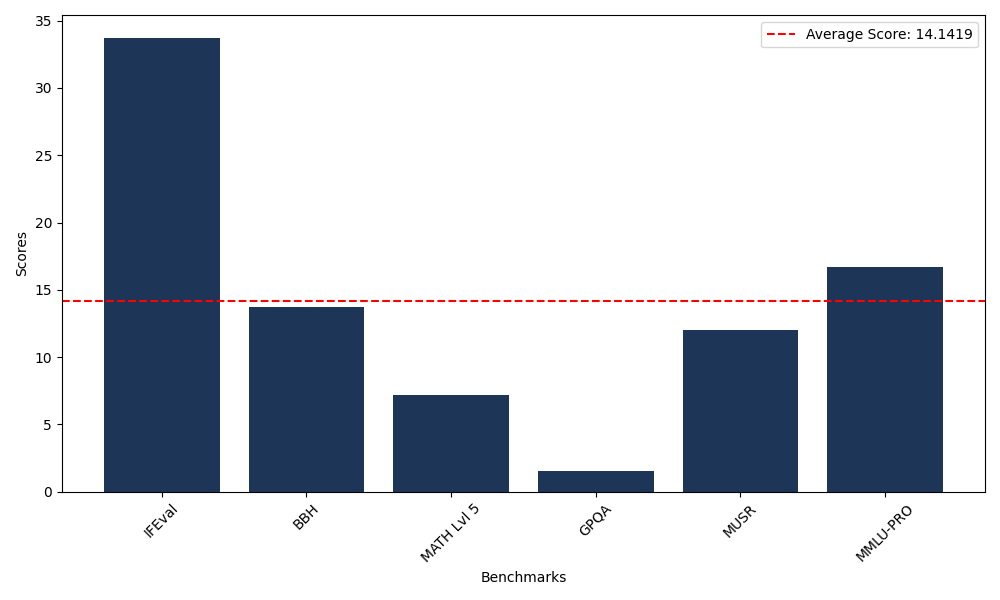

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 33.71 |

| Big Bench Hard (BBH) | 13.70 |

| Mathematical Reasoning Test (MATH Lvl 5) | 7.18 |

| General Purpose Question Answering (GPQA) | 1.57 |

| Multimodal Understanding and Reasoning (MUSR) | 12.03 |

| Massive Multitask Language Understanding (MMLU-PRO) | 16.68 |

Comments

No comments yet. Be the first to comment!

Leave a Comment