Qwen2 72B - Model Details

Qwen2 72B is a large language model developed by Qwen, a company, featuring 72 billion parameters. It is trained on multilingual data with extended context length and improved coding and mathematics performance. The model is available under multiple licenses including the Apache License 2.0, Tongyi Qianwen License Agreement, and The Unlicense.

Description of Qwen2 72B

Qwen2 72B is a large language model with 72 billion parameters, part of the Qwen2 series that includes base and instruction-tuned models ranging from 0.5B to 72B. It is a decoder-based language model using the Transformer architecture with SwiGLU activation, attention QKV bias, and group query attention. The model features an adaptive tokenizer optimized for multiple natural languages and code. It excels in language understanding, generation, multilingual tasks, coding, mathematics, and reasoning. The 72B variant is available under Apache License 2.0, Tongyi Qianwen License Agreement, and The Unlicense.

Parameters & Context Length of Qwen2 72B

Qwen2 72B is a 72b parameter model, placing it in the very large models category, which excels at complex tasks but requires significant computational resources. Its 128k context length falls into the very long contexts range, enabling it to process extensive texts efficiently but at the cost of higher resource demands. This combination makes it suitable for advanced applications requiring deep analysis of lengthy data, though it may not be feasible for all environments.

- Parameter Size: 72b (Very Large Models: Best for complex tasks, requiring significant resources)

- Context Length: 128k (Very Long Contexts: Ideal for very long texts, highly resource-intensive)

Possible Intended Uses of Qwen2 72B

Qwen2 72B is a versatile large language model that could support possible applications in natural language processing tasks, such as text summarization, question answering, or sentiment analysis, though its effectiveness for specific scenarios would require further testing. It might also serve as a possible tool for code generation and analysis, assisting developers in writing or optimizing code, but its performance in specialized programming domains would need validation. Additionally, the model could enable possible advancements in multilingual communication and translation, leveraging its multilingual training to bridge language gaps, though real-world accuracy and cultural nuances would demand careful evaluation. These possible uses highlight the model’s flexibility but underscore the need for tailored experimentation and verification before deployment.

- natural language processing tasks

- code generation and analysis

- multilingual communication and translation

Possible Applications of Qwen2 72B

Qwen2 72B is a large-scale language model that could support possible applications in areas such as advanced text generation for creative writing or content creation, where its multilingual capabilities might offer possible benefits. It could also be used for possible tasks in code analysis and development, providing possible assistance in generating or optimizing code. Additionally, the model might enable possible improvements in multilingual communication, offering possible support for translation or language learning. Another possible use could be in data analysis, where its ability to process large contexts might help in generating insights from extensive datasets. However, each of these possible applications would require thorough evaluation and testing to ensure they meet specific needs and perform reliably in real-world scenarios.

- natural language processing tasks

- code generation and analysis

- multilingual communication and translation

- data analysis and reporting

Quantized Versions & Hardware Requirements of Qwen2 72B

Qwen2 72B is a large language model that could require significant hardware resources for the medium q4 version, which balances precision and performance. For this quantized variant, a system with multiple GPUs totaling at least 48GB VRAM (e.g., A100, RTX 4090/6000 series) is likely necessary, along with at least 32GB RAM, adequate cooling, and a sufficient power supply. These requirements ensure the model can operate efficiently while maintaining accuracy.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Qwen2 72B is a large language model with 72 billion parameters and a 128k context length, designed for complex tasks like multilingual processing, coding, and reasoning. It offers flexibility through multiple quantized versions, making it suitable for diverse applications while requiring careful evaluation for specific use cases.

References

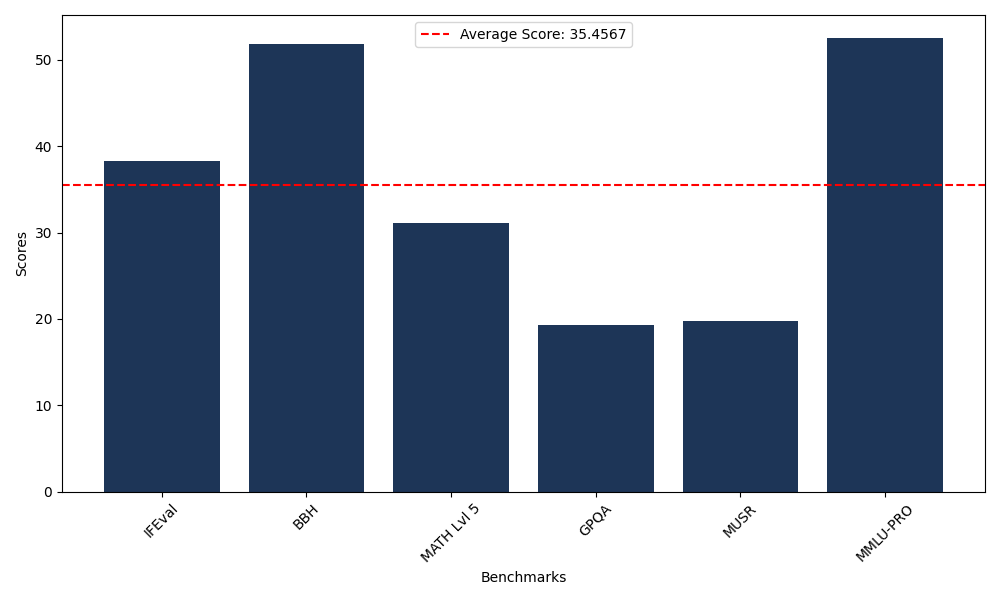

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 38.24 |

| Big Bench Hard (BBH) | 51.86 |

| Mathematical Reasoning Test (MATH Lvl 5) | 31.12 |

| General Purpose Question Answering (GPQA) | 19.24 |

| Multimodal Understanding and Reasoning (MUSR) | 19.73 |

| Massive Multitask Language Understanding (MMLU-PRO) | 52.56 |

Comments

No comments yet. Be the first to comment!

Leave a Comment