Qwen2 72B Instruct - Model Details

Qwen2 72B Instruct is a large language model developed by Qwen, a company, featuring 72b parameters. It is designed for instruction-following tasks and is trained on multilingual data with extended context length and enhanced coding and mathematics capabilities. The model is available under multiple licenses including Apache License 2.0, Tongyi Qianwen License Agreement, and The Unlicense, offering flexibility for various use cases.

Description of Qwen2 72B Instruct

Qwen2 is a series of large language models with base and instruction-tuned variants ranging from 0.5 to 72 billion parameters. It employs a Transformer architecture with SwiGLU activation, attention QKV bias, and group query attention to enhance efficiency and performance. The model supports an extended context length of up to 131,072 tokens and includes an improved tokenizer adapted for multiple natural languages and code. It demonstrates strong capabilities in language understanding, generation, multilingual tasks, coding, mathematics, and reasoning, competing effectively with proprietary models in benchmarks.

Parameters & Context Length of Qwen2 72B Instruct

Qwen2 72B Instruct is a large language model with 72b parameters, placing it in the very large models category, which excels at complex tasks but requires significant computational resources. Its 128k context length enables handling extremely long texts, making it ideal for tasks involving extensive data, though it demands higher memory and processing power. The combination of a large parameter count and extended context length positions Qwen2 72B Instruct as a powerful tool for advanced applications, albeit with substantial resource requirements.

- Parameter Size: 72b (Very Large Models: Best for complex tasks, requiring significant resources)

- Context Length: 128k (Very Long Contexts: Best for very long texts, highly resource-intensive)

Possible Intended Uses of Qwen2 72B Instruct

Qwen2 72B Instruct is a large language model designed for tasks requiring advanced reasoning and adaptability, with possible applications including answering complex questions across multiple domains, generating and debugging code, and facilitating multilingual communication and translation. Its large parameter size and extended context length suggest it could support possible uses such as analyzing extensive documents, handling intricate programming tasks, or enabling real-time language translation for diverse audiences. However, these possible uses would need thorough testing and validation to ensure effectiveness and alignment with specific requirements. The model’s capabilities also raise possible opportunities for research, education, or creative projects, though further exploration is necessary to understand its limitations and optimal deployment.

- answering complex questions across multiple domains

- code generation and debugging

- multilingual communication and translation

Possible Applications of Qwen2 72B Instruct

Qwen2 72B Instruct is a large-scale language model with possible applications in domains requiring advanced reasoning, multilingual support, and extended context handling. Possible uses could include tackling complex, cross-domain questions, generating and refining code, facilitating multilingual communication, and enabling translation tasks. These possible applications leverage the model’s 72b parameter size and 128k context length, but they remain possible opportunities that require rigorous testing and validation to ensure alignment with specific needs. Possible scenarios might also involve analyzing extensive documents or supporting creative workflows, though each possible use must be thoroughly evaluated before deployment.

- answering complex questions across multiple domains

- code generation and debugging

- multilingual communication and translation

- handling extended context for document analysis

Quantized Versions & Hardware Requirements of Qwen2 72B Instruct

Qwen2 72B Instruct with the q4 quantization offers a possible balance between precision and performance, requiring at least 24GB VRAM for deployment on a single GPU, though higher VRAM or multiple GPUs may be necessary for optimal efficiency. Possible applications of this version would depend on the specific hardware, with system memory of at least 32GB and adequate cooling recommended. Possible users should evaluate their GPU capabilities, as the 72b parameter size and q4 quantization demand significant resources.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Qwen2 72B Instruct is a large language model with 72b parameters and a 128k context length, designed for complex tasks like multilingual communication, code generation, and cross-domain question answering. It offers multiple quantized versions (fp16, q2, q3, q4, q5, q6, q8) to balance performance and resource requirements, making it adaptable for various applications while requiring careful evaluation for specific use cases.

References

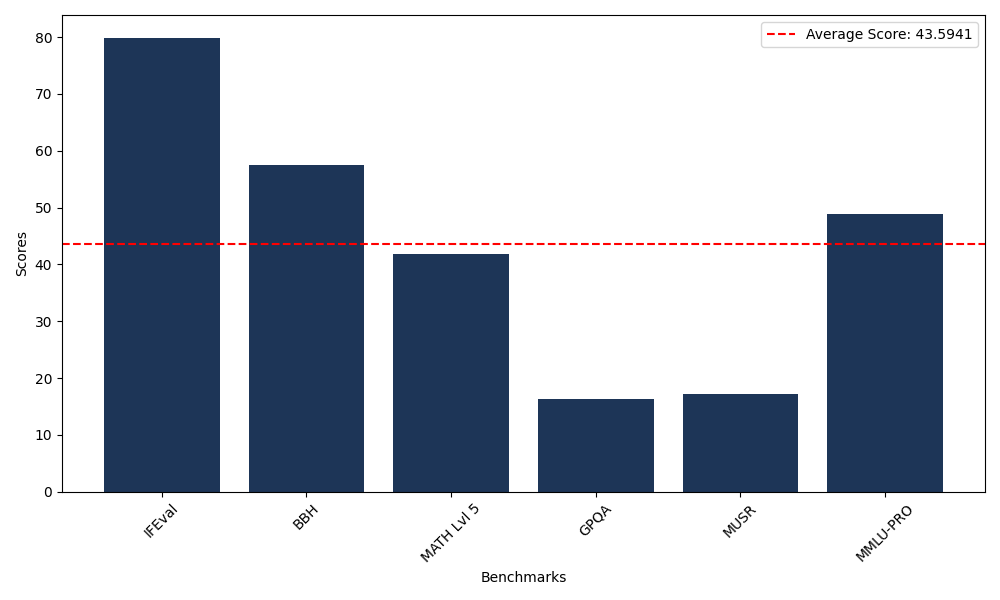

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 79.89 |

| Big Bench Hard (BBH) | 57.48 |

| Mathematical Reasoning Test (MATH Lvl 5) | 41.77 |

| General Purpose Question Answering (GPQA) | 16.33 |

| Multimodal Understanding and Reasoning (MUSR) | 17.17 |

| Massive Multitask Language Understanding (MMLU-PRO) | 48.92 |

Comments

No comments yet. Be the first to comment!

Leave a Comment