Qwen2.5 0.5B Instruct - Model Details

Qwen2.5 0.5B Instruct is a large language model developed by Alibaba Qwen with 0.5 billion parameters. It is released under the Apache License 2.0, allowing broad usage and modification. The model is designed to enhance factual knowledge and coding capabilities, making it suitable for a wide range of applications.

Description of Qwen2.5 0.5B Instruct

Qwen2.5 is the latest series of Qwen large language models, featuring significant advancements in knowledge, coding, mathematics, instruction following, and long text generation. It supports long-context processing up to 128K tokens and can generate up to 8K tokens in a single response. The model excels in understanding structured data and generating structured outputs like JSON. It offers multilingual support for over 29 languages, including Chinese, English, French, Spanish, Portuguese, German, Italian, Russian, Japanese, Korean, Vietnamese, Thai, Arabic, and more.

Parameters & Context Length of Qwen2.5 0.5B Instruct

Qwen2.5 0.5B Instruct is a small-scale model with 0.5b parameters, making it fast and resource-efficient for simple tasks. Its 32k context length falls into the long context category, enabling it to handle extended texts while requiring more computational resources compared to shorter contexts. This balance allows it to manage complex tasks without the heavy demands of larger models.

- Parameter Size: 0.5b (Small models, fast and resource-efficient, suitable for simple tasks)

- Context Length: 32k (Long contexts, ideal for long texts, requires more resources)

Possible Intended Uses of Qwen2.5 0.5B Instruct

Qwen2.5 0.5B Instruct is a small-scale model with 0.5b parameters, making it fast and resource-efficient for simple tasks. Its 32k context length falls into the long context category, enabling it to handle extended texts while requiring more computational resources compared to shorter contexts. This balance allows it to manage complex tasks without the heavy demands of larger models.

- Parameter Size: 0.5b (Small models, fast and resource-efficient, suitable for simple tasks)

- Context Length: 32k (Long contexts, ideal for long texts, requires more resources)

Possible Applications of Qwen2.5 0.5B Instruct

Qwen2.5 0.5B Instruct is a small-scale model with 0.5b parameters, making it fast and resource-efficient for simple tasks. Its 32k context length falls into the long context category, enabling it to handle extended texts while requiring more computational resources compared to shorter contexts. This balance allows it to manage complex tasks without the heavy demands of larger models.

- Parameter Size: 0.5b (Small models, fast and resource-efficient, suitable for simple tasks)

- Context Length: 32k (Long contexts, ideal for long texts, requires more resources)

Quantized Versions & Hardware Requirements of Qwen2.5 0.5B Instruct

Qwen2.5 0.5B Instruct's q4 quantized version offers a good balance between precision and performance, requiring at least 8GB VRAM for GPU deployment or a multi-core CPU. This makes it suitable for systems with moderate hardware while maintaining efficiency. The model's 0.5b parameter size ensures it remains lightweight, allowing possible use in environments with limited resources.

fp16, q2, q3, q4, q5, q6, q8

Conclusion

Qwen2.5 0.5B Instruct is a small-scale model with 0.5b parameters and a 32k context length, offering a balance between efficiency and performance for tasks requiring extended text handling. It supports instruction following, long text generation, structured data understanding, and multilingual capabilities across over 29 languages, making it suitable for diverse applications.

References

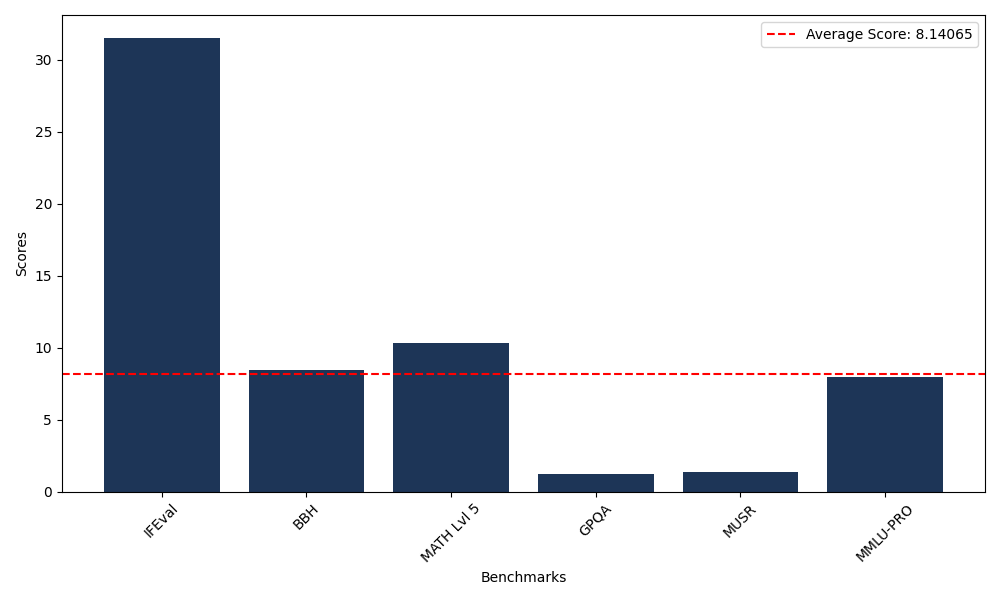

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 30.71 |

| Big Bench Hard (BBH) | 8.43 |

| Mathematical Reasoning Test (MATH Lvl 5) | 0.00 |

| General Purpose Question Answering (GPQA) | 1.01 |

| Multimodal Understanding and Reasoning (MUSR) | 0.94 |

| Massive Multitask Language Understanding (MMLU-PRO) | 7.75 |

| Instruction Following Evaluation (IFEval) | 31.53 |

| Big Bench Hard (BBH) | 8.17 |

| Mathematical Reasoning Test (MATH Lvl 5) | 10.35 |

| General Purpose Question Answering (GPQA) | 1.23 |

| Multimodal Understanding and Reasoning (MUSR) | 1.37 |

| Massive Multitask Language Understanding (MMLU-PRO) | 8.00 |

Comments

No comments yet. Be the first to comment!

Leave a Comment