Qwen2.5 1.5B Instruct - Model Details

Qwen2.5 1.5B Instruct is a large language model developed by Alibaba Qwen with 1.5B parameters. It operates under the Apache License 2.0 and is designed to enhance factual knowledge and coding capabilities. The model prioritizes instruction-following tasks, making it suitable for a wide range of applications requiring precision and technical expertise.

Description of Qwen2.5 1.5B Instruct

Qwen2.5 is the latest series of Qwen large language models, featuring significant improvements in knowledge, coding, mathematics, instruction following, long text generation, structured data understanding, and multilingual support. The 1.5B parameter model includes a causal architecture with RoPE, SwiGLU, and RMSNorm for enhanced performance. It supports a 32,768 token context length and can generate up to 8,192 tokens. Developed by Alibaba Qwen, the model operates under the Apache License 2.0 and is optimized for precision and technical tasks.

Parameters & Context Length of Qwen2.5 1.5B Instruct

Qwen2.5 1.5B Instruct features 1.5B parameters, placing it in the small to mid-scale range of open-source LLMs, which typically offers fast and resource-efficient performance for simpler tasks. Its 32k context length falls into the long context category, enabling it to handle extended texts and complex sequences while requiring more computational resources. This combination allows the model to balance efficiency with the ability to process detailed and lengthy inputs, making it versatile for tasks requiring both precision and extended contextual understanding.

- Parameter Size: 1.5b

- Context Length: 32k

Possible Intended Uses of Qwen2.5 1.5B Instruct

Qwen2.5 1.5B Instruct is a versatile model designed for a range of tasks, with possible applications in natural language processing, code generation, and multilingual communication. Its multilingual capabilities support languages such as Japanese, English, Russian, Italian, French, Chinese, Korean, Portuguese, Thai, Arabic, Vietnamese, German, and Spanish, making it a possible tool for cross-language interactions. The model’s 1.5B parameters and advanced architecture enable it to handle complex sequences, which could be possible for tasks requiring nuanced understanding or structured data. However, these possible uses require thorough testing and validation to ensure alignment with specific needs. The model’s flexibility suggests it could be possible for scenarios involving technical writing, language translation, or collaborative coding, but further exploration is necessary.

- natural language processing tasks

- code generation

- multilingual communication

Possible Applications of Qwen2.5 1.5B Instruct

Qwen2.5 1.5B Instruct is a versatile model with possible applications in areas such as technical documentation, multilingual customer support, code assistance, and content creation. Its possible ability to handle long contexts and multiple languages could make it possible for tasks requiring nuanced language understanding or cross-lingual interactions. The model’s possible efficiency with 1.5B parameters might suit scenarios where resource constraints are a factor, while its possible focus on instruction-following could aid in structured tasks. However, these possible uses require careful validation to ensure they meet specific requirements. Each application must be thoroughly evaluated and tested before deployment.

- technical documentation

- multilingual customer support

- code assistance

- content creation

Quantized Versions & Hardware Requirements of Qwen2.5 1.5B Instruct

Qwen2.5 1.5B Instruct’s medium Q4 version is designed for a balance between precision and performance, requiring at least 8GB VRAM for efficient operation, though systems with 12GB VRAM may handle it more smoothly. This version is possible to run on mid-range GPUs, provided the system has sufficient RAM and cooling. The 1.5B parameter model benefits from quantization to reduce resource demands, making it possible for users with limited hardware. However, specific requirements may vary based on workload and implementation.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Qwen2.5 1.5B Instruct is a large language model with 1.5B parameters designed for instruction-following tasks, featuring advanced architectures like RoPE and SwiGLU, and operating under the Apache License 2.0. It supports a 32k context length and multilingual capabilities, making it suitable for coding, factual knowledge, and complex text generation while balancing efficiency and performance.

References

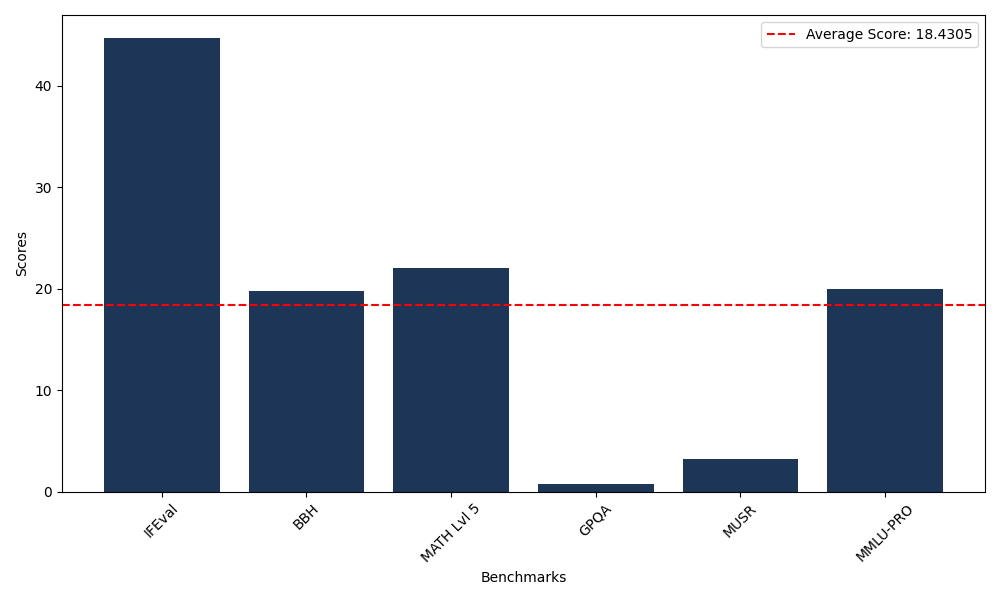

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 44.76 |

| Big Bench Hard (BBH) | 19.81 |

| Mathematical Reasoning Test (MATH Lvl 5) | 22.05 |

| General Purpose Question Answering (GPQA) | 0.78 |

| Multimodal Understanding and Reasoning (MUSR) | 3.19 |

| Massive Multitask Language Understanding (MMLU-PRO) | 19.99 |

Comments

No comments yet. Be the first to comment!

Leave a Comment