Qwen2.5 14B Instruct - Model Details

Qwen2.5 14B Instruct is a large language model developed by Alibaba Qwen with 14B parameters. It operates under the Apache License 2.0 and is designed to enhance factual knowledge and coding capabilities. The model prioritizes instruction-following tasks, making it suitable for a wide range of applications requiring precision and technical expertise.

Description of Qwen2.5 14B Instruct

Qwen2.5 is the latest series of Qwen large language models, designed to deliver advanced capabilities across multiple domains. It excels in knowledge retention, coding, mathematical reasoning, and instruction following, while supporting long text generation of over 8,000 tokens. The model also enhances structured data understanding and offers multilingual support for over 29 languages, making it versatile for diverse applications. Its improvements ensure greater accuracy and efficiency in handling complex tasks.

Parameters & Context Length of Qwen2.5 14B Instruct

Qwen2.5 14B Instruct is a large language model with 14B parameters, placing it in the mid-scale category, offering balanced performance for moderate complexity tasks. Its 128K token context length allows for handling very long texts, though it requires significant resources. This combination makes it suitable for applications needing both depth and extended context.

- Parameter Size: 14B

- Context Length: 128K

Possible Intended Uses of Qwen2.5 14B Instruct

Qwen2.5 14B Instruct is a versatile model that could offer possible applications in tasks requiring instruction following, long text generation, or structured data processing. Its multilingual support for languages like Japanese, English, Russian, and others suggests possible uses in cross-lingual projects or content creation. The model’s design might enable potential applications in scenarios needing extended context or complex data handling, though further exploration would be necessary to confirm its effectiveness. Possible uses could include drafting detailed documents, analyzing structured datasets, or generating coherent long-form text, but these remain speculative and require thorough testing.

- instruction following

- long text generation

- structured data processing

Possible Applications of Qwen2.5 14B Instruct

Qwen2.5 14B Instruct is a model that could offer possible applications in areas such as detailed instruction following, where it might assist with complex task execution. It could also support possible uses in generating long-form text, enabling users to create extended content with coherence. Additionally, its structured data processing capabilities might allow for potential applications in organizing or analyzing data-driven information. The model’s multilingual support could further enable possible uses in cross-lingual projects or content creation across multiple languages. Each of these applications remains a possible area of exploration and must be thoroughly evaluated and tested before implementation.

- instruction following

- long text generation

- structured data processing

Quantized Versions & Hardware Requirements of Qwen2.5 14B Instruct

Qwen2.5 14B Instruct with the Q4 quantization is a possible choice for users seeking a balance between precision and performance, requiring a GPU with at least 16GB VRAM for mid-scale models, though larger parameter sizes may demand higher VRAM. System memory of at least 32GB and adequate cooling are also recommended. The Q4 version is particularly suited for applications where resource efficiency and reasonable accuracy are priorities.

fp16, q2, q3, q4, q5, q6, q8

Conclusion

Qwen2.5 14B Instruct is a large language model with 14B parameters and a 128K token context length, designed for tasks like instruction following, long text generation, and structured data processing. It supports over 29 languages and operates under the Apache License 2.0, making it a flexible and accessible tool for diverse applications.

References

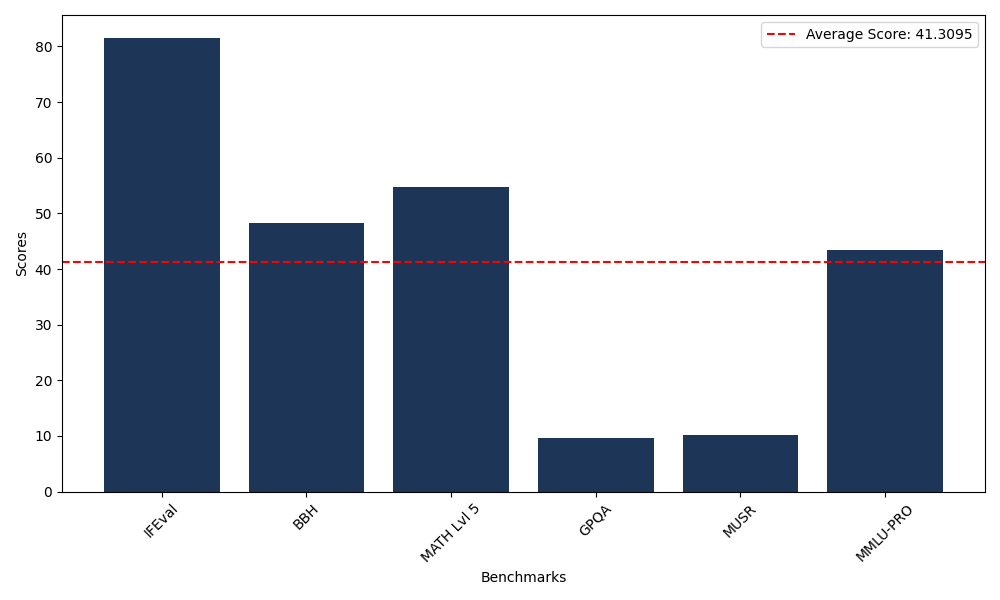

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 81.58 |

| Big Bench Hard (BBH) | 48.36 |

| Mathematical Reasoning Test (MATH Lvl 5) | 54.76 |

| General Purpose Question Answering (GPQA) | 9.62 |

| Multimodal Understanding and Reasoning (MUSR) | 10.16 |

| Massive Multitask Language Understanding (MMLU-PRO) | 43.38 |

Comments

No comments yet. Be the first to comment!

Leave a Comment