Qwen2.5 3B Instruct - Model Details

Qwen2.5 3B Instruct is a large language model developed by Alibaba Qwen with 3 billion parameters. It operates under the Qwen Research License Agreement (Qwen-RESEARCH) and is designed for instruction following, emphasizing enhanced factual knowledge and coding capabilities. The model is tailored to deliver precise, context-aware responses while adhering to the licensing terms set by its maintainer.

Description of Qwen2.5 3B Instruct

Qwen2.5 is the latest series of Qwen large language models, featuring significant improvements in knowledge, coding, mathematics, instruction following, long text generation (over 8,000 tokens), structured data understanding, and multilingual support. It supports a context length of 32,768 tokens for input and 8,192 tokens for generation, making it suitable for complex tasks. The model is instruction-tuned with a transformer architecture incorporating RoPE, SwiGLU, RMSNorm, and attention QKV bias to enhance performance and efficiency.

Parameters & Context Length of Qwen2.5 3B Instruct

Qwen2.5 3B Instruct has 3 billion parameters, placing it in the small model category, which ensures fast and resource-efficient performance suitable for simple tasks. Its 32,768-token context length falls into the long context range, enabling handling of extended texts while requiring more computational resources compared to shorter contexts. This combination allows the model to balance efficiency with capabilities for complex, lengthy input.

- Parameter Size: 3b

- Context Length: 32k

Possible Intended Uses of Qwen2.5 3B Instruct

Qwen2.5 3B Instruct is a versatile model that could be applied to a range of tasks, though these uses are still under exploration. Its instruction-following capabilities might be possible in scenarios requiring step-by-step guidance, such as interactive tutorials or task automation. The long text generation feature could be possible for creating extended content like reports or narratives, though its effectiveness for such purposes would need further testing. The multilingual support makes it possible to assist with cross-language communication or content localization, given its ability to handle multiple languages including Japanese, English, Russian, and others. These potential applications highlight the model’s flexibility, but they remain to be thoroughly validated in real-world settings.

- instruction following

- long text generation

- multilingual support

Possible Applications of Qwen2.5 3B Instruct

Qwen2.5 3B Instruct could be possible for applications such as instruction-based task automation, where its ability to follow complex steps might be possible in scenarios like guided tutorials or workflow management. It could be possible for long-form content creation, leveraging its extended context length to generate detailed narratives or reports. Multilingual communication might be possible, as its support for multiple languages could be possible in cross-cultural collaboration or localized content adaptation. Additionally, interactive educational tools could be possible, using its instruction-following capabilities to provide tailored learning experiences. These potential uses highlight the model’s adaptability, but each would require thorough evaluation to ensure alignment with specific needs.

- instruction-based task automation

- long-form content creation

- multilingual communication

- interactive educational tools

Quantized Versions & Hardware Requirements of Qwen2.5 3B Instruct

Qwen2.5 3B Instruct’s medium Q4 version requires a GPU with at least 12GB VRAM and 32GB system memory for optimal performance, balancing precision and efficiency. This configuration allows the model to run on mid-range hardware, though specific needs may vary based on workload. The Q4 quantization reduces memory usage while maintaining reasonable accuracy, making it a possible choice for users with limited resources.

fp16, q2, q3, q4, q5, q6, q8

Conclusion

Qwen2.5 3B Instruct is a large language model with 3 billion parameters and a 32,768-token context length, designed for instruction following and enhanced factual knowledge and coding capabilities. It leverages a transformer architecture with advanced techniques like RoPE and SwiGLU, making it suitable for tasks requiring long text generation and multilingual support.

References

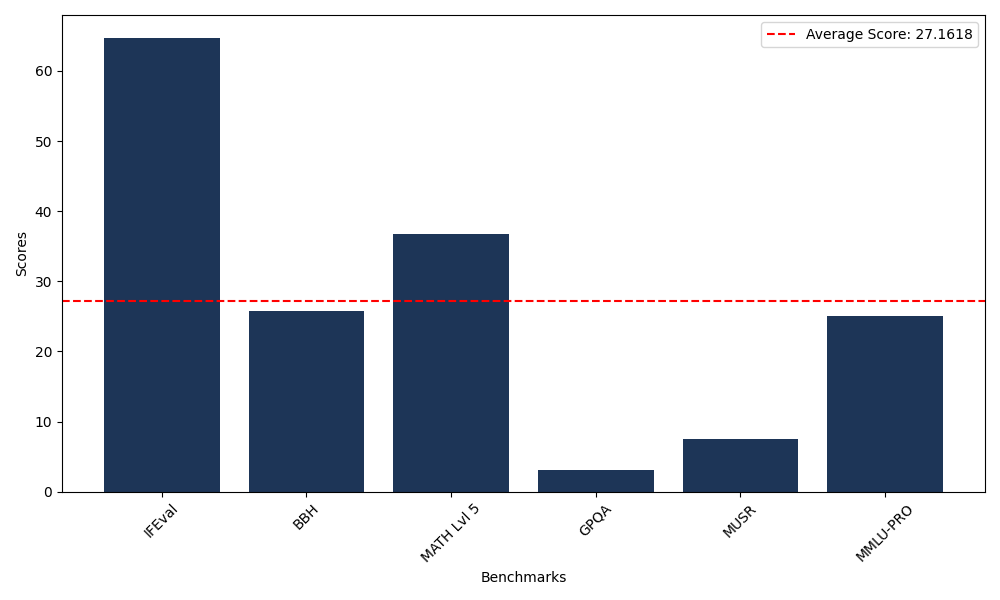

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 64.75 |

| Big Bench Hard (BBH) | 25.80 |

| Mathematical Reasoning Test (MATH Lvl 5) | 36.78 |

| General Purpose Question Answering (GPQA) | 3.02 |

| Multimodal Understanding and Reasoning (MUSR) | 7.57 |

| Massive Multitask Language Understanding (MMLU-PRO) | 25.05 |

Comments

No comments yet. Be the first to comment!

Leave a Comment