Qwen2.5 72B Instruct - Model Details

Qwen2.5 72B Instruct is a large language model developed by Alibaba Qwen with 72b parameters. It operates under the Qwen Research License Agreement (Qwen-RESEARCH) and is designed for instruction following. The model emphasizes enhanced factual knowledge and coding capabilities, making it suitable for complex tasks requiring precision and technical expertise.

Description of Qwen2.5 72B Instruct

Qwen2.5 is the latest series of Qwen large language models, offering significant advancements in knowledge, coding, mathematics, and instruction following. It supports long text generation of over 8,000 tokens and excels at understanding and generating structured data. The model can process a context length of 131,072 tokens and generate up to 8,192 tokens in a single response. It provides multilingual support for over 29 languages, including Chinese, English, French, Spanish, and more. Its enhanced capabilities make it well-suited for complex tasks requiring precision, technical expertise, and adaptability across diverse linguistic and data formats.

Parameters & Context Length of Qwen2.5 72B Instruct

Qwen2.5 72B Instruct is a 72b parameter model with a 128k context length, placing it in the very large models and very long contexts categories. This scale enables advanced handling of complex tasks, such as detailed reasoning, long-text generation, and intricate data processing, but requires substantial computational resources. The 128k context length allows for extensive text analysis and generation, making it ideal for applications involving lengthy documents or multi-step reasoning, though it demands higher memory and processing power.

- Parameter Size: 72b

- Context Length: 128k

Possible Intended Uses of Qwen2.5 72B Instruct

Qwen2.5 72B Instruct is a versatile large language model with 72b parameters and 128k context length, designed for text generation, multilingual support, and structured data handling. Its multilingual capabilities in languages like Japanese, English, Russian, and others suggest possible applications in cross-lingual content creation, translation, or localized information processing. The model’s ability to manage structured data could enable possible uses in tasks like data extraction, report generation, or system automation. While its text generation capacity might support possible scenarios such as drafting documents or creative writing, these possible uses require thorough testing to ensure alignment with specific needs. The model’s design emphasizes flexibility, but possible applications should be evaluated carefully before deployment.

- text generation

- multilingual support

- structured data handling

Possible Applications of Qwen2.5 72B Instruct

Qwen2.5 72B Instruct is a large-scale language model with 72b parameters and 128k context length, designed for text generation, multilingual support, and structured data handling. Its multilingual capabilities in languages like Japanese, English, and Russian suggest possible applications in cross-lingual content creation or localized information processing, though these possible uses require careful validation. The model’s ability to manage structured data could enable possible scenarios such as automated report generation or data-driven task automation, but these possible applications must be tested for suitability. Its text generation capacity might support possible uses in drafting creative or technical documents, though possible outcomes depend on specific requirements. The model’s flexibility opens possible opportunities in areas like educational tools or collaborative workflows, but possible implementations demand thorough evaluation.

- text generation

- multilingual support

- structured data handling

Quantized Versions & Hardware Requirements of Qwen2.5 72B Instruct

Qwen2.5 72B Instruct’s medium Q4 quantized version requires a GPU with at least 16GB VRAM for models up to 8B parameters, though larger models may need more. System memory should be at least 32GB, and adequate cooling is essential. These possible requirements depend on the specific model size and workload, so users should verify their hardware against the model’s parameter count.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Qwen2.5 72B Instruct is a large language model with 72b parameters and a 128k context length, designed for text generation, multilingual support, and structured data handling. Its capabilities make it suitable for a range of applications requiring advanced language processing and adaptability across different tasks and languages.

References

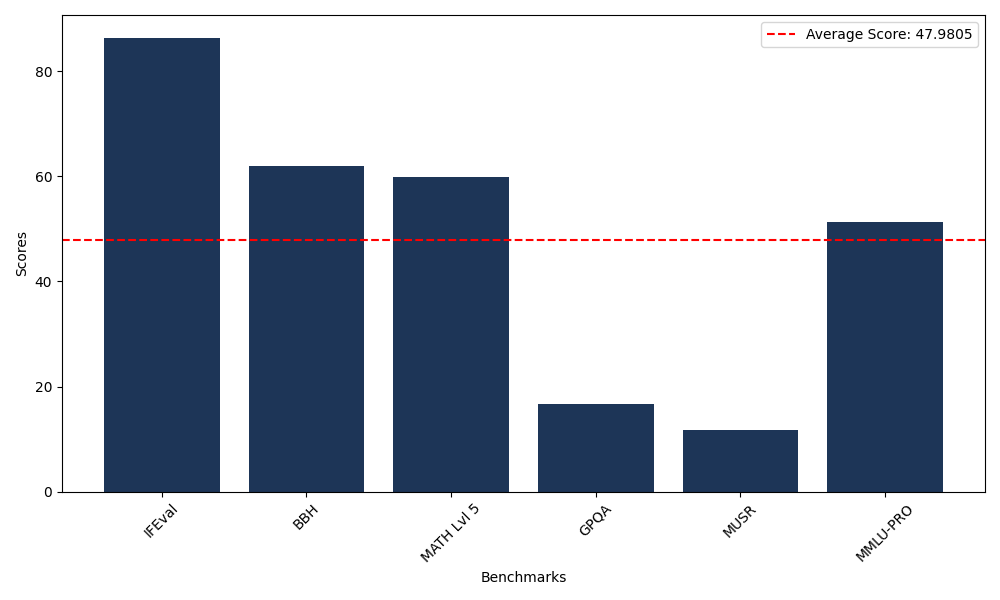

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 86.38 |

| Big Bench Hard (BBH) | 61.87 |

| Mathematical Reasoning Test (MATH Lvl 5) | 59.82 |

| General Purpose Question Answering (GPQA) | 16.67 |

| Multimodal Understanding and Reasoning (MUSR) | 11.74 |

| Massive Multitask Language Understanding (MMLU-PRO) | 51.40 |

Comments

No comments yet. Be the first to comment!

Leave a Comment