Qwen2.5 7B Instruct - Model Details

Qwen2.5 7B Instruct is a large language model developed by Alibaba Qwen with 7 billion parameters. It is released under the Apache License 2.0, allowing flexible use and modification. Designed as an instruct model, it excels in following instructions and has been enhanced for factual knowledge and coding capabilities.

Description of Qwen2.5 7B Instruct

Qwen2.5 is the latest series of Qwen large language models, featuring significant improvements in knowledge, coding, mathematics, instruction following, and long text generation (over 8,192 tokens). It excels in understanding structured data and generating structured outputs. The model supports multilingual input and output for over 29 languages and offers an extended context length of up to 131,072 tokens, making it suitable for complex and lengthy tasks.

Parameters & Context Length of Qwen2.5 7B Instruct

Qwen2.5 7B Instruct is a large language model with 7 billion parameters, placing it in the small to mid-scale range, which ensures fast and resource-efficient performance for tasks requiring simplicity and speed. Its 128,000-token context length falls into the very long context category, enabling it to handle extensive texts and complex tasks that demand deep contextual understanding. This combination allows the model to balance efficiency with the ability to process lengthy inputs, making it versatile for applications where both speed and extended context are critical.

- Name: Qwen2.5 7B Instruct

- Parameter Size: 7B

- Context Length: 128K

- Implications: Efficient for simple tasks, capable of handling very long texts with high resource demands.

Possible Intended Uses of Qwen2.5 7B Instruct

Qwen2.5 7B Instruct is a large language model designed for generating long texts, understanding structured data, and supporting multilingual tasks. Possible uses could include creating detailed reports, analyzing structured datasets, or handling content in multiple languages. Possible applications may involve translating documents, summarizing lengthy texts, or assisting with data-driven tasks that require contextual awareness. Possible scenarios might also include developing tools for cross-lingual communication or processing complex information in diverse formats. While these uses are possible, they would require thorough testing to ensure alignment with specific requirements. The model’s multilingual support covers languages such as Japanese, English, Russian, Italian, French, Chinese, Korean, Portuguese, Thai, Arabic, Vietnamese, German, and Spanish, offering flexibility for potential applications in various domains.

- generating long texts

- understanding structured data

- multilingual support

Possible Applications of Qwen2.5 7B Instruct

Qwen2.5 7B Instruct is a large language model with possible applications in generating detailed long-form content, analyzing structured datasets, and supporting multilingual workflows. Possible uses might include creating comprehensive documentation, extracting insights from tabular data, or facilitating communication across languages. Possible scenarios could involve automating content creation for non-sensitive topics, assisting with data interpretation in research, or enabling cross-lingual collaboration. Possible opportunities may also arise in educational tools, creative writing, or processing extensive textual information. While these possible applications highlight the model’s versatility, each would require careful evaluation to ensure suitability for specific tasks.

- generating long-form content

- analyzing structured datasets

- multilingual workflows

- cross-lingual collaboration

Quantized Versions & Hardware Requirements of Qwen2.5 7B Instruct

Qwen2.5 7B Instruct’s medium Q4 version requires a GPU with at least 16GB VRAM for efficient operation, making it suitable for systems with mid-range hardware. Possible applications of this quantized version may include tasks needing a balance between speed and accuracy, such as text generation or data processing. Potential users should ensure their system has at least 32GB RAM and adequate cooling to handle the workload. Important considerations include verifying GPU compatibility and power supply requirements.

fp16, q2, q3, q4, q5, q6, q8

Conclusion

Qwen2.5 7B Instruct is a large language model with 7 billion parameters and a 128,000-token context length, designed for generating long texts, understanding structured data, and supporting multilingual tasks across 13 languages. It is released under the Apache License 2.0, offering flexibility for various applications while requiring careful evaluation for specific use cases.

References

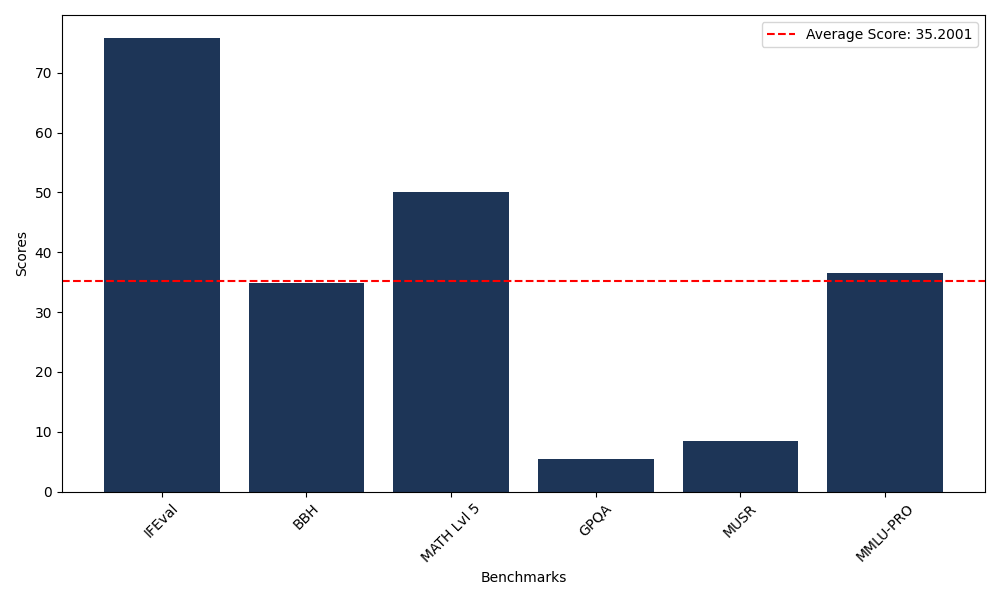

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 75.85 |

| Big Bench Hard (BBH) | 34.89 |

| Mathematical Reasoning Test (MATH Lvl 5) | 50.00 |

| General Purpose Question Answering (GPQA) | 5.48 |

| Multimodal Understanding and Reasoning (MUSR) | 8.45 |

| Massive Multitask Language Understanding (MMLU-PRO) | 36.52 |

Comments

No comments yet. Be the first to comment!

Leave a Comment