Qwen2.5 Coder 0.5B Instruct - Model Details

Qwen2.5 Coder 0.5B Instruct is a large language model developed by Alibaba Qwen. It features 0.5b parameters, making it suitable for efficient and scalable tasks. The model is released under the Apache License 2.0, ensuring open and flexible usage. Designed for instruct tasks, it excels in advanced code generation, reasoning, and repair across multiple programming languages.

Description of Qwen2.5 Coder 0.5B Instruct

Qwen2.5 is the latest series of Qwen large language models, designed to enhance performance in knowledge, coding, mathematics, instruction following, and long text generation (over 8,192 tokens). It supports 29+ languages and features a 32,768-token context length with 8,192-token generation capabilities. The model is instruction-tuned with 0.49B parameters and includes specialized architectures for improved tasks like structured data understanding and JSON generation.

Parameters & Context Length of Qwen2.5 Coder 0.5B Instruct

Qwen2.5 Coder 0.5B Instruct is a 0.5b parameter model designed for efficient and scalable tasks, falling into the small model category, which ensures fast performance and low resource consumption. Its 32k context length places it in the long context range, enabling it to handle extended documents or complex codebases while requiring more computational resources than shorter contexts. This combination makes it well-suited for tasks demanding both precision and extended input processing.

- Parameter Size: 0.5b

- Context Length: 32k

Possible Intended Uses of Qwen2.5 Coder 0.5B Instruct

Qwen2.5 Coder 0.5B Instruct is a model designed for instruction following and role-play implementation, offering possible applications in scenarios requiring structured interactions or simulated dialogues. Its ability to generate long-form texts and structured outputs like JSON suggests possible uses in tasks involving data formatting, documentation, or content creation. The model’s multilingual capabilities support possible applications across 29+ languages, enabling possible uses in cross-lingual communication or translation efforts. However, these possible uses would need thorough testing to ensure alignment with specific requirements.

- instruction following and role-play implementation

- generating long-form texts and structured outputs (e.g., json)

- multilingual communication and translation tasks

Possible Applications of Qwen2.5 Coder 0.5B Instruct

Qwen2.5 Coder 0.5B Instruct is a model that could support possible applications in areas like generating structured data formats, such as JSON, for possible uses in content automation or data management. Its multilingual capabilities suggest possible opportunities for cross-lingual tasks, such as translating or adapting materials across 29+ languages. The model’s focus on instruction following and role-play implementation could enable possible scenarios for interactive dialogue systems or educational tools. Additionally, its ability to handle long-form texts might offer possible benefits for creating detailed documentation or collaborative writing projects. These possible applications would require thorough evaluation to ensure they meet specific needs.

- generating structured data formats (e.g., JSON)

- cross-lingual tasks across 29+ languages

- interactive dialogue systems or role-play scenarios

- long-form text creation for documentation or collaboration

Quantized Versions & Hardware Requirements of Qwen2.5 Coder 0.5B Instruct

Qwen2.5 Coder 0.5B Instruct’s q4 version is optimized for a medium balance between precision and performance, requiring a GPU with at least 8GB VRAM for efficient operation. This makes it suitable for systems with moderate hardware capabilities, though higher-precision versions like fp16 or q8 may demand more resources. The q4 variant is ideal for users seeking a practical trade-off without excessive computational overhead.

fp16, q2, q3, q4, q5, q6, q8

Conclusion

Qwen2.5 Coder 0.5B Instruct is a 0.5b parameter model optimized for instruction following and code generation, with a 32k context length enabling efficient handling of long texts and structured data. It operates under the Apache License 2.0, offering flexibility for development while balancing performance and resource efficiency.

References

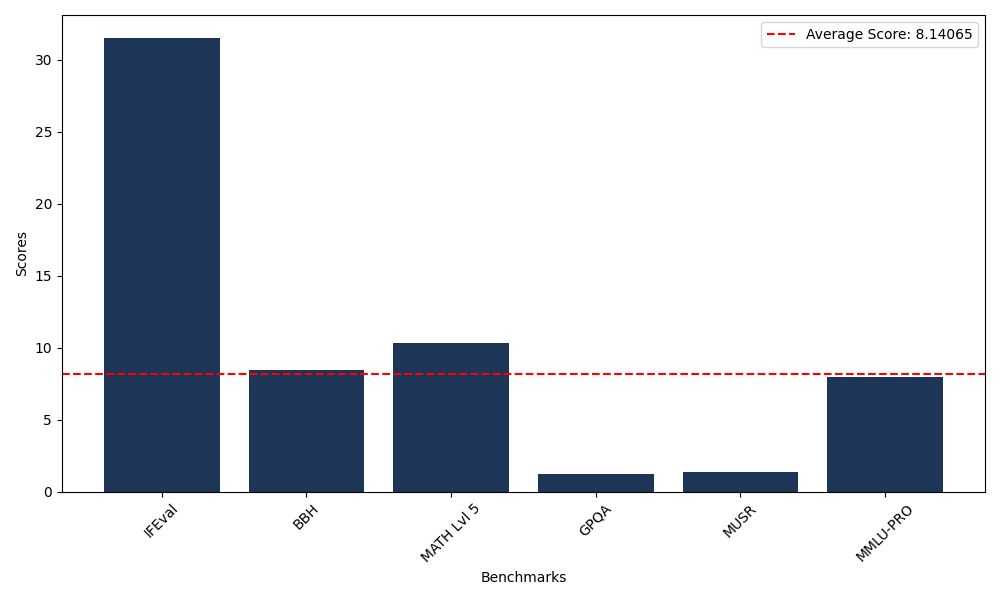

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 30.71 |

| Big Bench Hard (BBH) | 8.43 |

| Mathematical Reasoning Test (MATH Lvl 5) | 0.00 |

| General Purpose Question Answering (GPQA) | 1.01 |

| Multimodal Understanding and Reasoning (MUSR) | 0.94 |

| Massive Multitask Language Understanding (MMLU-PRO) | 7.75 |

| Instruction Following Evaluation (IFEval) | 31.53 |

| Big Bench Hard (BBH) | 8.17 |

| Mathematical Reasoning Test (MATH Lvl 5) | 10.35 |

| General Purpose Question Answering (GPQA) | 1.23 |

| Multimodal Understanding and Reasoning (MUSR) | 1.37 |

| Massive Multitask Language Understanding (MMLU-PRO) | 8.00 |

Comments

No comments yet. Be the first to comment!

Leave a Comment