Qwen2.5 Coder 14B Base - Model Details

Qwen2.5 Coder 14B Base is a large language model developed by Alibaba Qwen, featuring 14B parameters and released under the Apache License 2.0. It excels in advanced code generation, reasoning, and repair across multiple programming languages, making it a versatile tool for developers and researchers.

Description of Qwen2.5 Coder 14B Base

Qwen2.5-Coder is the latest series of Code-Specific Qwen large language models, designed to enhance code generation, code reasoning, and code fixing. Trained on 5.5 trillion tokens of diverse data including source code, text-code grounding, and synthetic data, it supports long contexts up to 128K tokens (131,072 tokens). The 14B version features 14.7B parameters, with 13.1B non-embedding parameters, 48 layers, and 40 attention heads for Q and 8 for KV, making it highly effective for real-world applications like Code Agents.

Parameters & Context Length of Qwen2.5 Coder 14B Base

Qwen2.5 Coder 14B Base features 14B parameters, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for handling moderate complexity tasks. Its 128K context length enables processing of extended texts, making it suitable for intricate code analysis and long-document tasks, though it demands higher computational resources. This combination allows the model to excel in real-world coding scenarios while maintaining flexibility for diverse applications.

- Parameter Size: 14B

- Context Length: 128K

Possible Intended Uses of Qwen2.5 Coder 14B Base

Qwen2.5 Coder 14B Base is designed for code generation, code reasoning, and code fixing, with potential applications in areas like software development, algorithm design, and code optimization. Possible uses could include assisting developers in writing efficient code, analyzing complex codebases for logical errors, or automating repetitive coding tasks. These uses are still under exploration, and their effectiveness may vary depending on specific scenarios. Possible applications might extend to educational tools for teaching programming concepts or supporting collaborative coding environments. However, these potential uses require thorough investigation to ensure they meet practical needs and technical limitations. The model’s capabilities in handling multiple programming languages and long contexts could enable novel approaches to problem-solving, but further research is needed to validate these possibilities.

- code generation

- code reasoning

- code fixing

Possible Applications of Qwen2.5 Coder 14B Base

Qwen2.5 Coder 14B Base is a large-scale language model with possible applications in areas like code generation, code reasoning, and code fixing, which could support developers in creating efficient solutions or debugging complex systems. Possible uses might include automating repetitive coding tasks, enhancing code quality through logical analysis, or aiding in the development of tools for software maintenance. Possible scenarios could involve educational platforms that teach programming concepts or collaborative environments where code is refined iteratively. Possible benefits may arise from its ability to handle long contexts and multi-language support, but these possible applications require careful evaluation to ensure alignment with specific needs. Each application must be thoroughly evaluated and tested before use.

- code generation

- code reasoning

- code fixing

Quantized Versions & Hardware Requirements of Qwen2.5 Coder 14B Base

Qwen2.5 Coder 14B Base’s medium q4 version is optimized for a balance between precision and performance, requiring a GPU with at least 20GB VRAM (e.g., RTX 3090) and 32GB system memory for efficient operation. These are possible hardware requirements, and actual needs may vary based on workload and implementation. Possible applications may demand additional resources for larger tasks, so users should verify compatibility with their hardware.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Qwen2.5 Coder 14B Base is a large language model with 14B parameters designed for advanced code generation, reasoning, and repair across multiple languages, released under the Apache License 2.0. It supports 128K token contexts, making it suitable for complex coding tasks and real-world applications.

References

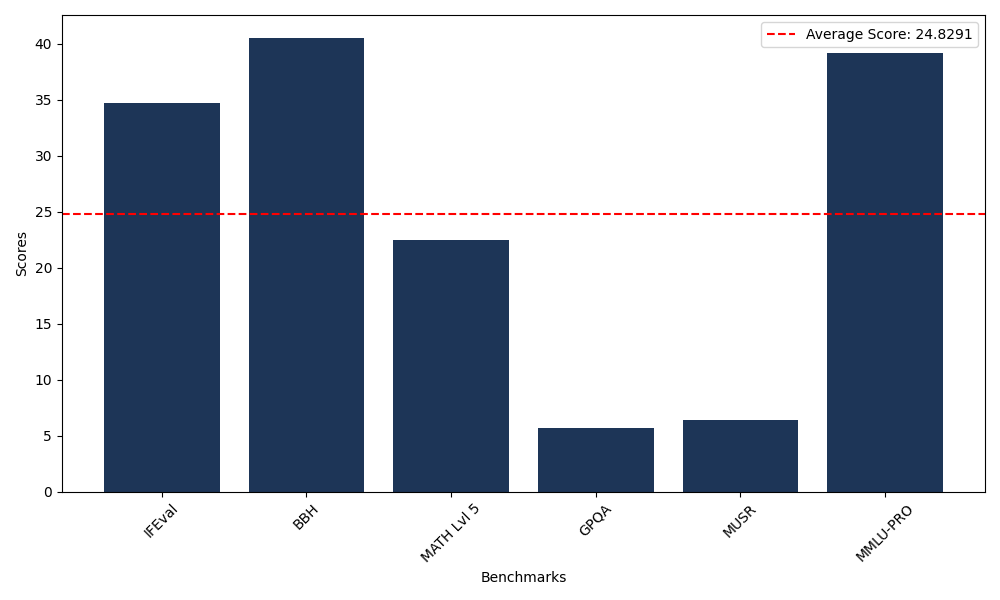

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 34.73 |

| Big Bench Hard (BBH) | 40.52 |

| Mathematical Reasoning Test (MATH Lvl 5) | 22.51 |

| General Purpose Question Answering (GPQA) | 5.70 |

| Multimodal Understanding and Reasoning (MUSR) | 6.39 |

| Massive Multitask Language Understanding (MMLU-PRO) | 39.13 |

Comments

No comments yet. Be the first to comment!

Leave a Comment