Qwen2.5 Coder 32B Base - Model Details

Qwen2.5 Coder 32B Base, developed by Alibaba Qwen, is a large language model with 32 billion parameters, released under the Apache License 2.0. It focuses on advanced code generation, reasoning, and repair across multiple languages.

Description of Qwen2.5 Coder 32B Base

Qwen2.5-Coder is the latest series of Code-Specific Qwen large language models, featuring improvements in code generation, code reasoning, and code fixing. Trained on 5.5 trillion tokens including source code, text-code grounding, and synthetic data, it supports a context length of 131,072 tokens and is designed for real-world applications such as Code Agents. It demonstrates strong capabilities in mathematics and general competencies, making it highly effective for complex coding tasks and multi-language support.

Parameters & Context Length of Qwen2.5 Coder 32B Base

Qwen2.5 Coder 32B Base is a large language model with 32 billion parameters, placing it in the large-scale category for complex tasks like code generation and reasoning, though it requires significant computational resources. Its 128,000-token context length enables handling extensive text sequences, making it suitable for intricate coding scenarios and long-document analysis, but this also demands higher memory and processing power. The model’s design balances advanced capabilities with the trade-offs of resource intensity.

- Name: Qwen2.5 Coder 32B Base

- Parameter Size: 32b

- Context Length: 128k

- Implications: Powerful for complex tasks, resource-intensive for both parameters and long contexts.

Possible Intended Uses of Qwen2.5 Coder 32B Base

Qwen2.5 Coder 32B Base is a large language model designed for code agents, code generation and reasoning, and handling long texts, with 32 billion parameters and a 128,000-token context length. Possible applications include assisting developers in writing and debugging code, generating complex code snippets, or analyzing extensive documentation. It could also support tasks requiring deep logical reasoning or processing lengthy textual data, though these uses remain possible and require further exploration. The model’s capabilities suggest it might be useful in scenarios where advanced code understanding or extended context handling is needed, but thorough testing would be necessary to confirm its effectiveness.

- Intended Uses: code agents, code generation and reasoning, handling long texts

Possible Applications of Qwen2.5 Coder 32B Base

Qwen2.5 Coder 32B Base is a large language model with 32 billion parameters and a 128,000-token context length, making it a possible tool for tasks requiring advanced code generation, reasoning, and long-text handling. Possible applications include assisting developers in creating and optimizing code through code agents, generating complex code snippets for specific tasks, or analyzing extensive documentation for insights. It could also support logical reasoning in problem-solving scenarios, though these possible uses would need thorough evaluation. The model’s design suggests it might be possible to leverage its capabilities for tasks involving multi-language code or extended context, but each possible application would require rigorous testing to ensure alignment with specific needs.

- Possible applications: code agents, code generation and reasoning, handling long texts

Quantized Versions & Hardware Requirements of Qwen2.5 Coder 32B Base

Qwen2.5 Coder 32B Base’s medium q4 version requires a GPU with at least 24GB VRAM and 32GB system memory to balance precision and performance, making it suitable for deployment on mid-range hardware. This quantization reduces computational demands while maintaining reasonable accuracy, though possible variations in performance may depend on specific workloads. The model’s 32B parameters and 128,000-token context length mean that even quantized versions demand careful resource planning.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Qwen2.5 Coder 32B Base, developed by Alibaba Qwen, is a large language model with 32 billion parameters and a 128,000-token context length, designed for advanced code generation, reasoning, and handling long texts. It operates under the Apache License 2.0, making it suitable for a range of applications requiring robust coding and text processing capabilities.

References

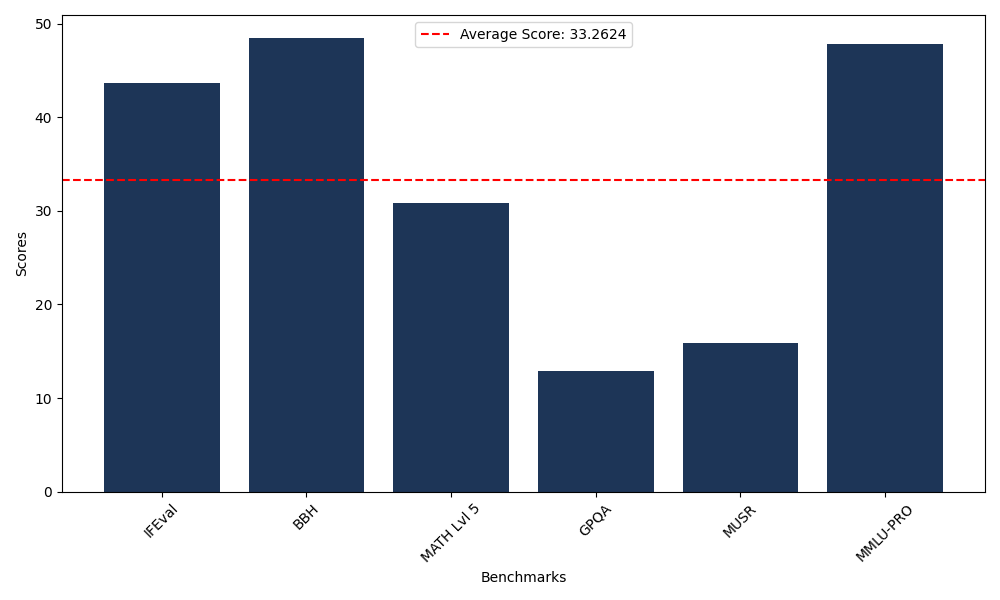

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 43.63 |

| Big Bench Hard (BBH) | 48.51 |

| Mathematical Reasoning Test (MATH Lvl 5) | 30.89 |

| General Purpose Question Answering (GPQA) | 12.86 |

| Multimodal Understanding and Reasoning (MUSR) | 15.87 |

| Massive Multitask Language Understanding (MMLU-PRO) | 47.81 |

Comments

No comments yet. Be the first to comment!

Leave a Comment