Qwen2.5 Coder 32B Instruct - Model Details

Qwen2.5 Coder 32B Instruct is a large language model developed by Alibaba Qwen with 32b parameters, released under the Apache License 2.0. It excels in advanced code generation, reasoning, and repair across multiple programming languages.

Description of Qwen2.5 Coder 32B Instruct

Qwen2.5-Coder is the latest series of Code-Specific Qwen large language models, designed to enhance code generation, code reasoning, and code fixing. Trained on 5.5 trillion tokens of diverse data including source code, text-code grounding, and synthetic data, it achieves state-of-the-art open-source codeLLM capabilities, matching GPT-4o. The model supports long-context up to 128K tokens (131,072 tokens) and is optimized for real-world applications like Code Agents, with improved coding, mathematical, and general competencies.

Parameters & Context Length of Qwen2.5 Coder 32B Instruct

Qwen2.5 Coder 32B Instruct features 32b parameters, placing it in the Large Models (20B to 70B) category, which enables robust performance for complex coding tasks, reasoning, and multi-language support but requires significant computational resources. Its 128k context length falls into the Long Contexts (8K to 128K Tokens) range, allowing it to process extended documents and intricate codebases efficiently, though it demands higher memory and processing power. This combination makes the model well-suited for advanced applications like Code Agents, where both depth of understanding and handling of lengthy inputs are critical.

- Parameter Size: 32b

- Context Length: 128k

Possible Intended Uses of Qwen2.5 Coder 32B Instruct

Qwen2.5 Coder 32B Instruct is a model designed for code generation, code reasoning, code fixing, and code agents, with possible applications in software development, automation, and programming assistance. Its 32b parameter size and 128k context length suggest it could support possible uses such as generating complex code snippets, analyzing and debugging code, or building tools that interact with codebases. However, these possible uses would require thorough testing and validation to ensure they align with specific needs. The model’s focus on code reasoning and multi-language support opens possible opportunities for tasks like translating code between languages or optimizing existing code. Still, possible applications in real-world scenarios would need careful evaluation to address limitations and ensure effectiveness.

- code generation

- code reasoning

- code fixing

- code agents

Possible Applications of Qwen2.5 Coder 32B Instruct

Qwen2.5 Coder 32B Instruct is a model with possible applications in areas like code generation, code reasoning, code fixing, and code agents, though these possible uses require careful exploration. Its 32b parameter size and 128k context length suggest it could support possible scenarios such as generating code snippets for developers, analyzing code logic for debugging, or automating code maintenance tasks. Possible opportunities might also include building tools that assist with code translation or optimization, leveraging its multi-language capabilities. However, these possible applications would need thorough testing to ensure alignment with specific requirements. Each application must be thoroughly evaluated and tested before use.

- code generation

- code reasoning

- code fixing

- code agents

Quantized Versions & Hardware Requirements of Qwen2.5 Coder 32B Instruct

Qwen2.5 Coder 32B Instruct’s q4 quantized version requires a GPU with at least 24GB VRAM for efficient operation, balancing precision and performance. This possible application is suitable for systems with mid-range GPUs, though additional considerations like 32GB+ system memory and adequate cooling are recommended. Possible uses for this version include code-related tasks where lower precision is acceptable, but thorough testing is needed to confirm compatibility.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Qwen2.5 Coder 32B Instruct is a large language model with 32b parameters and a 128k context length, designed for advanced code generation, reasoning, and fixing across multiple languages. It operates under the Apache License 2.0 and is optimized for real-world applications like code agents, with potential for further exploration in coding tasks.

References

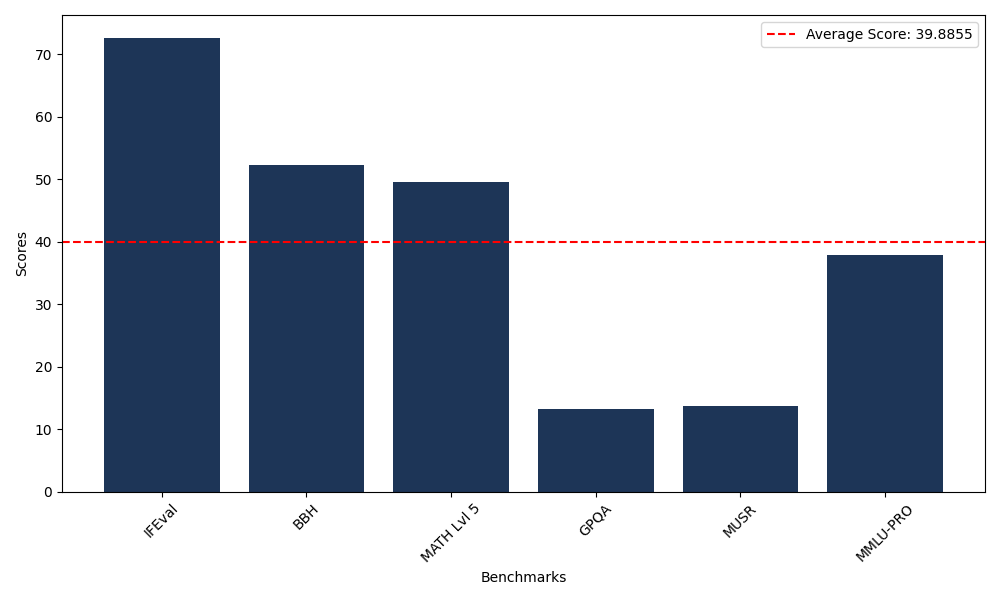

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 72.65 |

| Big Bench Hard (BBH) | 52.27 |

| Mathematical Reasoning Test (MATH Lvl 5) | 49.55 |

| General Purpose Question Answering (GPQA) | 13.20 |

| Multimodal Understanding and Reasoning (MUSR) | 13.72 |

| Massive Multitask Language Understanding (MMLU-PRO) | 37.92 |

Comments

XEvil 6.0 автоматически решает большинство типов капч, В том числе такой тип капчи: ReCaptcha-2, ReCaptcha-3, Google captcha, Solve Media, BitcoinFaucet, Steam, +12k + hCaptcha, FC, ReCaptcha Enterprize теперь поддерживается в новой версии XEvil 6.0! 1.) Быстро, легко, точно XEvil - самый быстрый убийца капчи в мире. У него нет ограничений на решение, нет ограничений на количество потоков 2.) Поддержка нескольких API-интерфейсов XEvil поддерживает более 6 различных всемирно известных API: 2Captcha, anti-captcha (antigate), rucaptcha.com, death-by-captcha, etc. просто отправьте свою капчу через HTTP-запрос, как вы можете отправить в любой из этих сервисов - и XEvil разгадает вашу капчу! Итак, XEvil совместим с сотнями приложений для SEO / SMM / восстановления паролей / парсинга /публикации / кликов / криптовалюты / и т.д. 3.) Полезная поддержка и руководства После покупки вы получили доступ к частному форуму технической поддержки, Wiki, онлайн-поддержке Skype/Telegram Разработчики обучат XEvil вашему типу captcha бесплатно и очень быстро - просто пришлите им примеры 4.) Как получить бесплатную пробную версию полной версии XEvil? - Попробуйте поискать в Google "Home of XEvil" - вы найдете IP-адреса с открытым портом 80 пользователей XEvil (нажмите на любой IP-адрес, чтобы убедиться) - попробуйте отправить свою капчу через 2captcha API на один из этих IP-адресов - если вы получили ошибку с НЕПРАВИЛЬНЫМ КЛЮЧОМ, просто введите другой IP-адрес - наслаждайтесь! :) - (это не работает для hCaptcha!) ВНИМАНИЕ: Бесплатная ДЕМО-версия XEvil не поддерживает reCAPTCHA, hCaptcha и большинство других типов captcha!

Хочу поднять тему, с которой, думаю, сталкивались многие девушки, — проблемы поиска работы. На первый взгляд кажется, что вакансий много и рынок открыт, но на практике всё не так просто. Часто уже на этапе отклика возникают сложности: либо не отвечают вообще, либо требования завышены так, будто ищут специалиста с 10-летним опытом на стартовую позицию. Отдельная боль — собеседования. Вопросы иногда касаются не профессиональных навыков, а личной жизни: планы на семью, дети, готовность «задерживаться», «быть на связи 24/7». Создаётся ощущение, что приходится доказывать не только свою компетентность, но и «удобство» для работодателя. Если есть перерыв в карьере (декрет, уход за близкими, переобучение), его нередко воспринимают как минус, даже если за это время были получены новые навыки. Девушкам без большого опыта тоже непросто — замкнутый круг «нет опыта — не берём, не берём — нет опыта» по-прежнему актуален. Нашла работу в прикреплённой ссылке

XEvil 5.0 автоматически решает большинство типов капч, В том числе такой тип капчи: ReCaptcha-2, ReCaptcha-3, Google captcha, Solve Media, BitcoinFaucet, Steam, +12k + hCaptcha, FC, ReCaptcha Enterprize теперь поддерживается в новой версии XEvil 6.0! 1.) Быстро, легко, точно XEvil - самый быстрый убийца капчи в мире. У него нет ограничений на решение, нет ограничений на количество потоков 2.) Поддержка нескольких API-интерфейсов XEvil поддерживает более 6 различных всемирно известных API: 2captcha.com, anti-captchas.com (antigate), RuCaptcha, DeathByCaptcha, etc. просто отправьте свою капчу через HTTP-запрос, как вы можете отправить в любой из этих сервисов - и XEvil разгадает вашу капчу! Итак, XEvil совместим с сотнями приложений для SEO / SMM / восстановления паролей / парсинга /публикации / кликов / криптовалюты / и т.д. 3.) Полезная поддержка и руководства После покупки вы получили доступ к частному форуму технической поддержки, Wiki, онлайн-поддержке Skype/Telegram Разработчики обучат XEvil вашему типу captcha бесплатно и очень быстро - просто пришлите им примеры 4.) Как получить бесплатную пробную версию полной версии XEvil? - Попробуйте поискать в Google "Home of XEvil" - вы найдете IP-адреса с открытым портом 80 пользователей XEvil (нажмите на любой IP-адрес, чтобы убедиться) - попробуйте отправить свою капчу через 2captcha API на один из этих IP-адресов - если вы получили ошибку с НЕПРАВИЛЬНЫМ КЛЮЧОМ, просто введите другой IP-адрес - наслаждайтесь! :) - (это не работает для hCaptcha!) ВНИМАНИЕ: Бесплатная ДЕМО-версия XEvil не поддерживает reCAPTCHA, hCaptcha и большинство других типов captcha! http://xrumersale.site/

XEvil 6.0 автоматически решает большинство типов капч, В том числе такой тип капчи: ReCaptcha v.2, ReCaptcha v.3, Google captcha, SolveMedia, BitcoinFaucet, Steam, +12k + hCaptcha, FC, ReCaptcha Enterprize теперь поддерживается в новой версии XEvil 6.0! 1.) Быстро, легко, точно XEvil - самый быстрый убийца капчи в мире. У него нет ограничений на решение, нет ограничений на количество потоков 2.) Поддержка нескольких API-интерфейсов XEvil поддерживает более 6 различных всемирно известных API: 2captcha.com, anti-captchas.com (antigate), RuCaptcha, DeathByCaptcha, etc. просто отправьте свою капчу через HTTP-запрос, как вы можете отправить в любой из этих сервисов - и XEvil разгадает вашу капчу! Итак, XEvil совместим с сотнями приложений для SEO / SMM / восстановления паролей / парсинга /публикации / кликов / криптовалюты / и т.д. 3.) Полезная поддержка и руководства После покупки вы получили доступ к частному форуму технической поддержки, Wiki, онлайн-поддержке Skype/Telegram Разработчики обучат XEvil вашему типу captcha бесплатно и очень быстро - просто пришлите им примеры 4.) Как получить бесплатную пробную версию полной версии XEvil? - Попробуйте поискать в Google "Home of XEvil" - вы найдете IP-адреса с открытым портом 80 пользователей XEvil (нажмите на любой IP-адрес, чтобы убедиться) - попробуйте отправить свою капчу через 2captcha API на один из этих IP-адресов - если вы получили ошибку с НЕПРАВИЛЬНЫМ КЛЮЧОМ, просто введите другой IP-адрес - наслаждайтесь! :) - (это не работает для hCaptcha!) ВНИМАНИЕ: Бесплатная ДЕМО-версия XEvil не поддерживает reCAPTCHA, hCaptcha и большинство других типов captcha! http://xrumersale.site/

Центр гинекологии — это место, к которому большинство женщин относится с особым вниманием и, нередко, с внутренним напряжением. На первый взгляд кажется, что выбор такого центра — стандартная медицинская задача, но на практике он часто оказывается сложнее, чем ожидалось. Одна из основных трудностей — выбор подходящего центра. Предложений много, но за внешне одинаковыми услугами может скрываться разный уровень специалистов, оборудования и подхода к пациентам. Без рекомендаций или личного опыта бывает сложно понять, где действительно оказывают качественную медицинскую помощь, а где всё ограничивается формальным приёмом. Если наберётся много полезных ответов, можно будет собрать сводку о том как выбрать центр гинекологии

Игровой ПК — это тема, которая редко бывает простой. На первый взгляд кажется, что достаточно купить «мощный компьютер», но на практике выбор, сборка и апгрейд игрового ПК оказываются процессом не менее сложным, чем ремонт дома. Одна из главных трудностей — планирование и бюджет. Без чёткого понимания, для каких игр и в каком разрешении нужен компьютер, легко переплатить за избыточную мощность или, наоборот, собрать систему, которая быстро устареет. Новички часто ориентируются только на видеокарту, забывая про баланс всей конфигурации. Если обсуждение активное — соберу сводку оптимальных пк для стримов

XEvil 5.0 автоматически решает большинство типов капч, В том числе такой тип капчи: ReCaptcha v.2, ReCaptcha v.3, Google captcha, Solve Media, BitcoinFaucet, Steam, +12k + hCaptcha, FC, ReCaptcha Enterprize теперь поддерживается в новой версии XEvil 6.0! 1.) Быстро, легко, точно XEvil - самый быстрый убийца капчи в мире. У него нет ограничений на решение, нет ограничений на количество потоков 2.) Поддержка нескольких API-интерфейсов XEvil поддерживает более 6 различных всемирно известных API: 2Captcha, anti-captchas.com (antigate), rucaptcha.com, DeathByCaptcha, etc. просто отправьте свою капчу через HTTP-запрос, как вы можете отправить в любой из этих сервисов - и XEvil разгадает вашу капчу! Итак, XEvil совместим с сотнями приложений для SEO / SMM / восстановления паролей / парсинга /публикации / кликов / криптовалюты / и т.д. 3.) Полезная поддержка и руководства После покупки вы получили доступ к частному форуму технической поддержки, Wiki, онлайн-поддержке Skype/Telegram Разработчики обучат XEvil вашему типу captcha бесплатно и очень быстро - просто пришлите им примеры 4.) Как получить бесплатную пробную версию полной версии XEvil? - Попробуйте поискать в Google "Home of XEvil" - вы найдете IP-адреса с открытым портом 80 пользователей XEvil (нажмите на любой IP-адрес, чтобы убедиться) - попробуйте отправить свою капчу через 2captcha API на один из этих IP-адресов - если вы получили ошибку с НЕПРАВИЛЬНЫМ КЛЮЧОМ, просто введите другой IP-адрес - наслаждайтесь! :) - (это не работает для hCaptcha!) ВНИМАНИЕ: Бесплатная ДЕМО-версия XEvil не поддерживает reCAPTCHA, hCaptcha и большинство других типов captcha! http://xrumersale.site/

Ремонт дома — это процесс, к которому редко относятся спокойно. Даже если он был давно запланирован, на практике почти всегда оказывается сложнее, дольше и дороже, чем ожидалось. Тем не менее, для многих ремонт — это не только стресс, но и возможность полностью адаптировать пространство под себя. Одна из главных сложностей — планирование. Без чёткого плана легко столкнуться с постоянными переделками, спонтанными решениями и перерасходом бюджета. Важно заранее определить объём работ, приоритеты и понять, что действительно необходимо, а от чего можно отказаться. Подскажите какая краска термостойкая лучше?

Центр гинекологии — это место, к которому большинство женщин относится с особым вниманием и, нередко, с внутренним напряжением. На первый взгляд кажется, что выбор такого центра — стандартная медицинская задача, но на практике он часто оказывается сложнее, чем ожидалось. Одна из основных трудностей — выбор подходящего центра. Предложений много, но за внешне одинаковыми услугами может скрываться разный уровень специалистов, оборудования и подхода к пациентам. Без рекомендаций или личного опыта бывает сложно понять, где действительно оказывают качественную медицинскую помощь, а где всё ограничивается формальным приёмом. Если наберётся много полезных ответов, можно будет собрать сводку о том как выбрать центр гинекологии

Избавьтесь от вредителей с профессионалами Обеспечим чистоту и безопасность вашего пространства Наши услуги: Уничтожение насекомых: 1. Избавление от тараканов быстро и эффективно 2. Уничтожение муравьев с гарантией 3. Полное уничтожение клопов с гарантией результата 4. Обработка от блох для домашних животных и помещений 5. Уничтожение осиных гнезд быстро и безопасно Дезинфекция: чистота и безопасность вашего дома: 1. Уничтожение болезнетворных бактерий и вирусов 2. Удаление плесени и грибка с гарантией Дератизация: избавление от грызунов: 1. Быстрое избавление от крыс и мышей 2. Установка ловушек и барьеров для грызунов Почему выбирают нас: - Эксперты в области дезинсекции, дезинфекции и дератизации. - Используем только сертифицированные и безопасные препараты. - Гарантия на все виды работ и прозрачные цены. - Срочный выезд по Москве и области. Как не ошибиться при выборе службы дезинсекции: 1. Проверьте наличие разрешительных документов 2. Отзывы клиентов и репутация компании 3. Прозрачные цены и гарантия на работы 4. Использование безопасных и сертифицированных препаратов Как с нами связаться: - Оставьте заявку, и мы перезвоним в течение 15 минут. - Мы готовы ответить на все ваши вопросы и предложить оптимальное решение. - Защитите своих близких и свой дом! Вопросы и ответы: 5. Как часто нужно проводить профилактическую обработку? Мы позаботимся о вашем комфорте и безопасности. Гербицидная обработка

Хвоя живой ёлки наполняет дом ароматом зимнего леса и рождественских сказок. Каждая ветка словно шепчет о чудесах, которые случаются только раз в году. пихта живая

Живая ёлка — это не просто дерево, а настоящее сердце праздника. Она наполняет дом тонким хвойным ароматом, словно приносит с собой зимний лес, тишину снежных вечеров и ожидание чуда. В её ветвях живёт Рождество. пихта нордмана купить

Когда в доме появляется живая ёлка, время будто замедляется. Игрушки тихо поблёскивают в огнях гирлянд, смола пахнет детством, а каждый вечер становится чуть теплее и светлее. Когда вечером гаснет свет и остаётся только мерцание огней на ветвях, становится ясно: праздник уже здесь. И, возможно, именно живая ёлка помогает нам снова поверить в рождественское чудо — тихое, тёплое и настоящее. А какой по вашему мнению должна быть настоящая пихта датская пихта нордмана

Добро пожаловать — вы на странице экспертов obrabotka-klopov Наша задача — сделать ваше жильё безопасным и спокойным, устранив клопов Услуги: Услуги по дезинсекции (уничтожение клопов): 1. Уничтожение клопов 2. Обработка спальных зон и мебельных эпицентров 3. Удаление яиц клопов 4. Локальная обработка скрытых очагов 5. Контроль и профилактика Дезинфекция — услуги по уничтожению бактерий и вирусов: 1. Обработка помещений для уничтожения патогенной микрофлоры 2. Дезинфекция после эпидемий и вспышек 3. Обезвреживание после инфекционных эпидемий Дератизационные работы (контроль грызунов): 1. Эффективное устранение грызунов 2. Монтаж ловушек и установка приманок 3. Предупреждение повторного проникновения Почему выбирают нас: - Команда экспертов: квалифицированные специалисты и сертифицированные методы. - Безопасность прежде всего: сертифицированные препараты и современные технологии. - Гарантии результата и прозрачные условия оплаты. Как выбрать организацию, занимающуюся уничтожением клопов: 1. Наличие лицензий и сертификаций 2. Репутация и кейсы 3. Прозрачная ценовая политика и договор 4. Программа контроля результатов 5. Наличие сертифицированных средств и оборудования 6. Быстрота реагирования и гибкость графиков Какая компания подходит вам: почему выбрать именно нас? - Комплексный подход: от осмотра до контроля результата; - Современные технологии и безопасные препараты; - Гарантии и прозрачная коммуникация; - Профессиональная команда и оперативность; - Поддержка клиентов на каждом этапе. - Полный цикл услуг: диагностика, план лечения, работа на объекте, контроль эффективности; - Безопасность для людей и животных; - Долговременная защита от повторного заражения; - Прозрачность и ответственность. - Опыт, лицензии и положительные кейсы; - Индивидуальный подход к каждому объекту; - Гарантированное очищение и услуги после обработки; - Честные цены и понятные условия. Кто занимается борьбой с клопами на рынке: - Крупные сетевые компании дезинсекции - Компании полного цикла (осмотр, обработка, контроль) - Профессиональные дезинsekционные фирмы Наши принципы работы по клопам: - Диагностика без боли: точный осмотр и выявление очагов - Контроль качества и соблюдение норм безопасности - Эффективная организация работ и контроль на каждом этапе - Прозрачность и отсутствие скрытых платежей Свяжитесь с нами для бесплатной консультации и осмотра Оставьте заявку на сайте — мы ответим в течение часа Сделайте первый шаг к чистому дому — звоните сейчас FAQ: Часто задаваемые вопросы по клопам и обработке 5. Какие меры предпринять после обработки? Ответ: сроки зависят от метода; термическая обработка требует выхода на время обработки, химическая — чаще без вынужденного отпуска; итоговая стоимость зависит от площади и очагов; повторная обработка проводится по результатам контроля; после обработки соблюдать инструкции специалиста и проводить уборку Контроль результатов после обработки: - Визуальная инспекция и тесты на наличие клопов Гарантийные обязательства перед клиентами: - Гарантия на результат и прозрачные условия обслуживания Почему мы — лучший выбор в борьбе с клопами: - Прозрачные цены и отсутствие скрытых платежей Клоп стоимость травля

Каждая живая елка — это не просто дерево, это хранитель волшебства, который объединяет семьи и друзей в самые светлые моменты жизни. Пусть эта елка станет центром вашего новогоднего праздника, наполненного смехом, счастьем и волшебством!- купить елки нордмана спб

Пытаюсь найти нотариуса для оформления документов, но процесс оказался сложнее, чем ожидал: нет единой информации по услугам, сложно быстро записаться, условия у всех разные. Какие способы поиска нотариуса оказались для вас самыми эффективными? Кто то уже искал нотариальный перевод на русский

Декабрь всегда приходит с особым настроением — чуть медленным, тёплым и по-настоящему домашним. В этот момент особенно ясно понимаешь, что Новый год начинается не с боя курантов, а с деталей. И одна из самых важных — живая пихта Нордмана в доме. Она выглядит так, будто создана для праздника: ровная, густая, с мягкой тёмно-зелёной хвоей, которая не осыпается и сохраняет свежесть неделями. Пихта Нордмана не требует лишних слов — она сама задаёт тон всему пространству, наполняя комнату спокойствием и ощущением уюта. Лёгкий хвойный аромат сразу переносит мыслями в зимний лес, где тихо, светло и по-новогоднему спокойно. Подскажите как дожна выглядеть пихта нордмана купить

Живые ёлки — это сердце праздника, аромат хвои которых наполняет дом теплом и уютом. Каждый иголочек будто хранит воспоминания зимних вечеров, смех детей и мерцание гирлянд. Выбирая ёлку, мы выбираем частичку сказки, которая превращает обычную комнату в волшебное место, где собираются близкие и звучит смех. И с каждым вдохом чувствуется настоящая магия Рождества — свежесть леса, лёгкая сладость смолы и ожидание чудес. А как вы создаёте свою рождественскую сказку вокруг ёлки: гирляндами, игрушками или ароматом хвои? Подскажите где пихта купить спб живая

купить Super Tadarise с доставкой по Санкт-Петербургу и Москве доступные цены высокое качество производства Индии https://rusmarketstroy.ru/

Купить Дженерик Сиалис 40мг на сайте: https://3aplus63.ru/ с доставкой высокое качество от производителя скидки для постоянных клиентов

ВНовогодние живые ёлки — это не просто символ праздника, а живая часть рождественской атмосферы, которая наполняет дом теплом, уютом и ощущением чуда. Их свежий хвойный аромат мгновенно возвращает в детство, когда ожидание праздника было особенно волшебным, а каждый огонёк гирлянды казался обещанием радости и обновления. Живая ёлка создаёт в доме особое настроение, которое невозможно воспроизвести искусственными декорациями. Мягкий свет свечей и гирлянд, блеск игрушек и натуральная зелень ветвей формируют ощущение настоящего Рождества — спокойного, семейного и наполненного смыслом. Вокруг ёлки собираются близкие, звучат тёплые разговоры, загадываются желания и рождаются новые традиции. Выбор новогодней живой ёлки — это тоже часть праздника. Высокая или компактная, пышная или строгая по форме, она становится центром дома и отражением его настроения. Правильно ухоженная ёлка долго сохраняет свежесть, радуя глаз на протяжении всех праздничных дней и создавая ощущение непрерывного торжества. Подскажите где пихта купить на новый год спб

https://test.rusosky.com/ - New Content, Every Hour. Juicy Scenes in HD. Beauties from Around the World. Smart Filters, Easy Search. Stream Instantly, No Limits. >>>

модернизация 1С ERP для ИП Нс Диджитал Франчайзинг 1С Бухгалтерия, продажа и разработка продуктов 1с

Поездка — это не просто метод добраться, а надёжное сопровождение для тех, кто ставит во главу угла своё удобство. **Фиксированная и выгодная стоимость трансфера — никаких сюрпризов!** Вы платите ровно ту стоимость, которая была предложена при резервировании — без доплат. Платформа обслуживает 24/7, обеспечивая быстрый реакцию. погода — больше не ваша забота. Вы можете наблюдать движение машины в мгновенно, заранее ознакомиться данные водителя и выбирать подходящий категорию средства передвижения — от мини до бизнеса. Оформляйте заранее — и вы минимизируете напряжения. Это безупречно подходит для рейсов в вокзал, на свидания, к родным или просто для комфортного перемещения. Надёжность, открытость и индивидуальный подход — вот что делает ваш поездку по-настоящему выгодным.

https://zzap77.ru/elektrika/akkumulyatory Аккумулятор автомобильный купить https://zzap77.ru/elektrika/akkumulyatory/560408054 купить аккумулятор varta по низким ценам В Москве ZZAP77 Большой выбор запчастей, аксессуаров, шин, дисков, инструмента, электроники и автохимии. Интернет-магазин автозапчастей В Москве ZZAP77

Эта информационная заметка предлагает лаконичное и четкое освещение актуальных вопросов. Здесь вы найдете ключевые факты и основную информацию по теме, которые помогут вам сформировать собственное мнение и повысить уровень осведомленности. Детальнее - https://vivod-iz-zapoya-1.ru/

Leave a Comment