Qwen2.5 Coder 3B Instruct - Model Details

Qwen2.5 Coder 3B Instruct is a large language model developed by Alibaba Qwen with 3b parameters. It operates under the Qwen Research License Agreement (Qwen-RESEARCH) and is designed to excel in advanced code generation, reasoning, and repair across multiple programming languages. The model is optimized for instruct-based tasks, making it a powerful tool for developers and researchers seeking efficient coding solutions.

Description of Qwen2.5 Coder 3B Instruct

Qwen2.5-3B-Instruct is part of the Qwen2.5 series and excels in knowledge, coding, mathematics, instruction following, long text generation (over 8,192 tokens), structured data understanding, and multilingual support. It features a 32,768-token input context and an 8,192-token generation context, enabling efficient handling of extended texts and complex tasks. The model is optimized for advanced coding, reasoning, and multilingual applications, making it suitable for diverse technical and research scenarios.

Parameters & Context Length of Qwen2.5 Coder 3B Instruct

Qwen2.5 Coder 3B Instruct has 3b parameters, placing it in the small model category, which ensures fast and resource-efficient performance for tasks requiring simplicity and speed. Its 32k context length allows for handling long texts effectively, though it demands more computational resources compared to shorter contexts. This combination makes it suitable for coding, reasoning, and multilingual applications where extended context is necessary but resource constraints are a consideration.

- Parameter_Size: 3b

- Context_Length: 32k

Possible Intended Uses of Qwen2.5 Coder 3B Instruct

Qwen2.5 Coder 3B Instruct is a versatile model designed for text generation, code generation, and multilingual translation, with support for Japanese, English, Russian, Italian, French, Chinese, Korean, Portuguese, Thai, Arabic, Vietnamese, German, and Spanish. Its multilingual capability makes it a possible tool for creating content across diverse linguistic contexts, while its code generation features could offer possible assistance in software development or algorithmic problem-solving. Possible applications in translation might include bridging language gaps for collaborative projects or educational materials. However, these possible uses require thorough testing to ensure alignment with specific requirements and to address limitations in real-world scenarios.

- text generation

- code generation

- multilingual translation

Possible Applications of Qwen2.5 Coder 3B Instruct

Qwen2.5 Coder 3B Instruct is a model with possible applications in areas like multilingual content creation, where its support for Japanese, English, Russian, and other languages could enable possible use cases for cross-cultural communication or localization. Its code generation capabilities might offer possible assistance for developers working on possible projects requiring scripting or algorithmic solutions. Possible uses in text generation could include drafting documents, creative writing, or summarizing information across languages. Additionally, its multilingual design might support possible scenarios for collaborative tools or educational resources. However, these possible applications require thorough evaluation to ensure they meet specific needs and avoid unintended limitations.

- multilingual content creation

- code generation for development tasks

- text generation for documentation or creative work

- cross-language collaboration tools

Quantized Versions & Hardware Requirements of Qwen2.5 Coder 3B Instruct

Qwen2.5 Coder 3B Instruct with the q4 quantization requires a GPU with at least 12GB VRAM for efficient operation, making it a possible choice for systems with moderate hardware capabilities. This version balances precision and performance, suitable for tasks like code generation or multilingual text processing. A minimum of 32GB system RAM is recommended to support smooth execution. Quantized versions: fp16, q2, q3, q4, q5, q6, q8.

Conclusion

Qwen2.5 Coder 3B Instruct is a large language model with 3b parameters and a 32k context length, optimized for advanced code generation, reasoning, and multilingual tasks across multiple programming and natural languages. It supports text generation, code creation, and cross-language translation, making it a versatile tool for developers and researchers seeking efficient, scalable solutions.

References

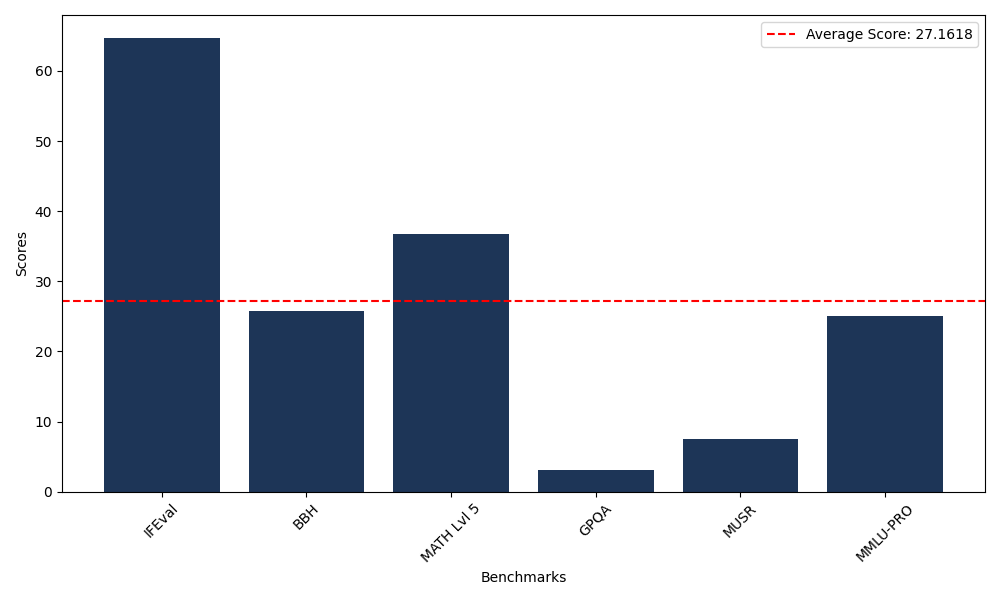

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 64.75 |

| Big Bench Hard (BBH) | 25.80 |

| Mathematical Reasoning Test (MATH Lvl 5) | 36.78 |

| General Purpose Question Answering (GPQA) | 3.02 |

| Multimodal Understanding and Reasoning (MUSR) | 7.57 |

| Massive Multitask Language Understanding (MMLU-PRO) | 25.05 |

Comments

No comments yet. Be the first to comment!

Leave a Comment