Qwq 32B - Model Details

Qwq 32B is a large language model developed by Alibaba Qwen, featuring 32b parameters. It operates under the Apache License 2.0 and is designed to excel in advanced reasoning tasks.

Description of Qwq 32B

QwQ-32B-Preview is an experimental research model developed by the Qwen Team, designed to push the boundaries of AI reasoning capabilities. It demonstrates promising analytical abilities but comes with notable limitations including Language Mixing and Code-Switching challenges, Recursive Reasoning Loops, Safety and Ethical Considerations, and Performance and Benchmark Limitations. As a preview release, it reflects ongoing research efforts while highlighting areas for improvement.

Parameters & Context Length of Qwq 32B

QwQ-32B-Preview has 32b parameters, placing it in the large model category, which enables advanced reasoning but requires significant computational resources. Its 32k context length allows handling extended inputs, making it suitable for complex tasks involving long texts, though this also increases resource demands. The model’s design balances capability with practical constraints, reflecting its role as an experimental research tool.

- Parameter Size: 32b

- Context Length: 32k

Possible Intended Uses of Qwq 32B

QwQ-32B-Preview is an experimental model designed to explore advanced reasoning capabilities, with possible applications in research, code generation, and data analysis. Its 32b parameter size and 32k context length suggest potential for handling complex tasks, though these uses remain possible and require further validation. For instance, possible research uses might involve analyzing large datasets or testing hypotheses, while possible code generation could focus on creating or optimizing scripts. Possible data analysis tasks might include identifying patterns in extensive text or numerical information. However, these possible applications need thorough investigation to ensure effectiveness and alignment with specific goals.

- research

- code generation

- data analysis

Possible Applications of Qwq 32B

QwQ-32B-Preview has possible applications in areas such as research, code generation, data analysis, and natural language processing tasks. These possible uses could involve exploring complex datasets, generating code snippets, analyzing trends, or handling extended text inputs. However, these possible applications require thorough evaluation to ensure they meet specific needs and perform effectively. Each application must be carefully assessed before deployment.

- research

- code generation

- data analysis

- natural language processing tasks

Quantized Versions & Hardware Requirements of Qwq 32B

QwQ-32B-Preview's medium q4 version requires a GPU with at least 24GB VRAM for efficient operation, making it suitable for mid-range hardware setups. This possible balance between precision and performance allows for possible deployment on systems with moderate resources, though possible limitations may arise depending on workload. The 32b parameter model’s q4 quantization reduces memory demands compared to fp16, while maintaining possible effectiveness for tasks like research or data analysis.

- fp16

- q4

- q8

Conclusion

QwQ-32B-Preview is a large language model with 32b parameters and a 32k context length, designed for advanced reasoning tasks. As an experimental research model, it offers possible applications in areas like research and data analysis but requires further evaluation for specific use cases.

References

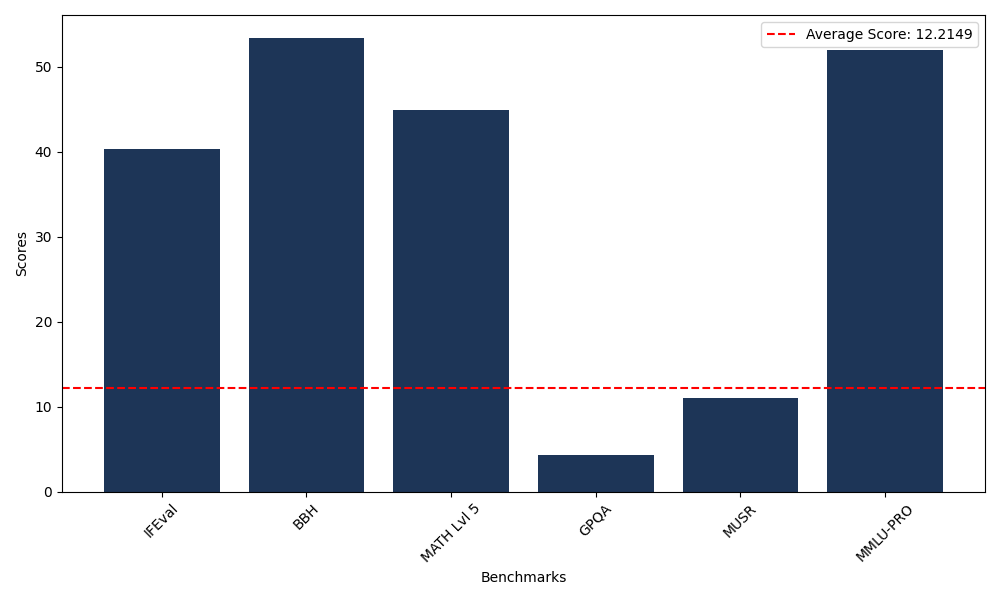

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 39.77 |

| Big Bench Hard (BBH) | 2.87 |

| Mathematical Reasoning Test (MATH Lvl 5) | 16.09 |

| General Purpose Question Answering (GPQA) | 1.34 |

| Multimodal Understanding and Reasoning (MUSR) | 11.05 |

| Massive Multitask Language Understanding (MMLU-PRO) | 2.18 |

| Instruction Following Evaluation (IFEval) | 40.35 |

| Big Bench Hard (BBH) | 53.39 |

| Mathematical Reasoning Test (MATH Lvl 5) | 44.94 |

| General Purpose Question Answering (GPQA) | 4.25 |

| Multimodal Understanding and Reasoning (MUSR) | 9.81 |

| Massive Multitask Language Understanding (MMLU-PRO) | 51.98 |

Comments

No comments yet. Be the first to comment!

Leave a Comment