R1 1776 671B - Model Details

R1 1776 671B is a large language model developed by Perplexity Enterprise, featuring a parameter size of 671B. It operates under the MIT License (MIT), ensuring open access and flexibility for users. The model prioritizes addressing bias and censorship in multilingual contexts while maintaining strong reasoning capabilities across diverse languages and tasks.

Description of R1 1776 671B

An open-source large language model trained with Reflection-Tuning to detect and correct reasoning errors. It is based on Llama 3.1 70B Instruct, utilizing synthetic data generated by Glaive. The model outputs reasoning within <thinking> tags and final answers within <output> tags, with <reflection> tags for error correction during reasoning. This approach enhances accuracy by systematically identifying and addressing flaws in the reasoning process.

Parameters & Context Length of R1 1776 671B

The R1 1776 671B model features 671b parameters, placing it in the very large models category, which excels at complex tasks but demands substantial computational resources. Its 4k context length falls under short contexts, making it suitable for concise tasks but limiting its ability to handle extended texts. The high parameter count enables advanced reasoning and multilingual capabilities, while the limited context length may restrict performance on lengthy documents or detailed analysis.

- Parameter Size: 671b

- Context Length: 4k

Possible Intended Uses of R1 1776 671B

The R1 1776 671B model is designed for general reasoning and problem-solving, with chatbot applications and task automation with reflection as possible use cases. Its ability to detect and correct reasoning errors through Reflection-Tuning suggests possible value in scenarios requiring iterative refinement of outputs. Possible applications might include educational tools for interactive learning, creative writing assistance, or collaborative workflows where real-time feedback is needed. However, these possible uses require careful evaluation to ensure alignment with specific needs and constraints. The model’s 671B parameters and 4k context length support complex reasoning but may limit possible scalability in certain environments.

- general reasoning and problem-solving

- chatbot applications

- task automation with reflection

Possible Applications of R1 1776 671B

The R1 1776 671B model offers possible applications in areas such as multilingual content creation, where its focus on reducing bias and censorship could support possible use in generating diverse, culturally aware materials. Possible scenarios include interactive educational tools that leverage its reasoning capabilities for possible task automation or collaborative problem-solving. Its possible ability to reflect on reasoning errors might also aid in possible creative writing or iterative design processes. However, these possible uses require careful validation to ensure alignment with specific goals and constraints. Each application must be thoroughly evaluated and tested before deployment.

- multilingual content creation

- interactive educational tools

- collaborative problem-solving

- creative writing or iterative design processes

Quantized Versions & Hardware Requirements of R1 1776 671B

The R1 1776 671B model’s medium q4 version requires multiple GPUs with at least 48GB VRAM total for efficient operation, along with 32GB+ system memory and adequate cooling. This configuration balances precision and performance, making it possible to run on high-end consumer or professional GPUs like the RTX 4090 or A100, though possible limitations may arise depending on the specific hardware setup. Users should verify their GPU’s VRAM and compatibility before deployment.

fp16, q4, q8

Conclusion

The R1 1776 671B is a large language model developed by Perplexity Enterprise with 671b parameters, operating under the MIT License (MIT). It focuses on mitigating bias and censorship in multilingual models while maintaining strong reasoning abilities through Reflection-Tuning and synthetic data from Glaive.

References

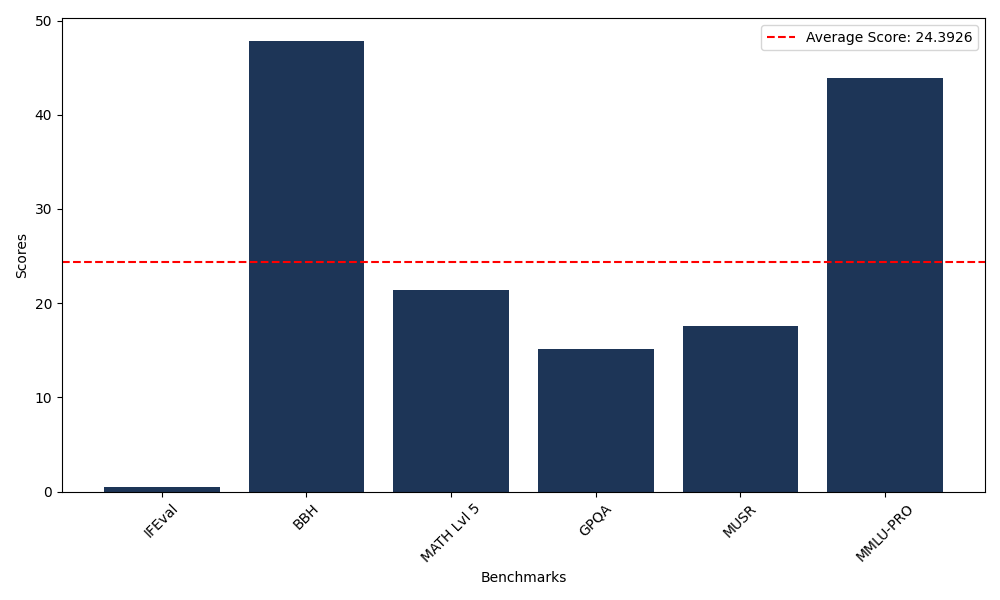

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 0.45 |

| Big Bench Hard (BBH) | 47.87 |

| Mathematical Reasoning Test (MATH Lvl 5) | 21.45 |

| General Purpose Question Answering (GPQA) | 15.10 |

| Multimodal Understanding and Reasoning (MUSR) | 17.54 |

| Massive Multitask Language Understanding (MMLU-PRO) | 43.95 |

Comments

No comments yet. Be the first to comment!

Leave a Comment