Smollm 135M Base - Model Details

Smollm 135M Base is a large language model developed by Hugging Face Smol Models Research Enterprise with 135m parameters. It operates under the Apache License 2.0 and is designed to deliver efficient performance through optimized data curation and architecture, making it suitable for small to medium-scale applications.

Description of Smollm 135M Base

SmolLM is a series of small language models available in three sizes: 135M, 360M, and 1.7B parameters. Trained on Cosmo-Corpus, a high-quality dataset comprising Cosmopedia v2 (28B tokens of synthetic textbooks and stories), Python-Edu (4B tokens of educational Python samples), and FineWeb-Edu (220B tokens of deduplicated educational web content). These models demonstrate strong performance in common sense reasoning and world knowledge benchmarks, outperforming others in their size categories. Their design emphasizes efficiency and effectiveness for small to medium-scale applications.

Parameters & Context Length of Smollm 135M Base

Smollm 135M Base has 135m parameters, placing it in the small model category, which ensures fast and resource-efficient performance for tasks requiring simplicity and speed. Its 4k context length falls under short contexts, making it suitable for concise tasks but limiting its ability to handle extended texts. This combination of 135m parameters and 4k context length reflects a design prioritizing efficiency and accessibility, ideal for applications where computational resources are constrained.

- Name: Smollm 135M Base

- Parameter Size: 135m

- Context Length: 4k

- Implications: Small parameters enable fast, resource-efficient operations; short context length suits brief tasks but restricts handling of long texts.

Possible Intended Uses of Smollm 135M Base

Smollm 135M Base is a versatile model with 135m parameters and a 4k context length, making it suitable for a range of tasks where efficiency and accessibility are prioritized. Possible uses include drafting documents, where its compact size could support quick generation of structured text, or coding assistance, leveraging its architecture to aid in simpler programming tasks. Possible applications in educational content creation might involve generating summaries, examples, or interactive materials, though its limited context length could restrict handling of extended or highly complex topics. These possible uses require careful evaluation to ensure alignment with specific needs, as the model’s design may not fully address all scenarios. The model’s focus on small-scale performance suggests it could excel in environments with constrained resources, but further testing is essential to confirm its effectiveness in these areas.

- drafting documents

- coding assistance

- educational content creation

Possible Applications of Smollm 135M Base

Smollm 135M Base is a compact model with 135m parameters and a 4k context length, making it a possible candidate for applications where efficiency and simplicity are prioritized. Possible uses include drafting documents, where its size could enable rapid generation of concise text, or coding assistance, offering support for basic programming tasks. Possible scenarios in educational content creation might involve generating summaries or interactive materials, though its limited context length could affect its ability to handle extended or complex topics. These possible applications require thorough evaluation to ensure they align with specific needs, as the model’s design may not fully address all scenarios. Each application must be thoroughly evaluated and tested before use.

- drafting documents

- coding assistance

- educational content creation

Quantized Versions & Hardware Requirements of Smollm 135M Base

Smollm 135M Base’s medium q4 version is optimized for a balance between precision and performance, requiring a GPU with at least 8GB VRAM for smooth operation. This makes it accessible for users with mid-range hardware, as the 135m parameter model falls under the 1B parameter category, where 4GB–8GB VRAM is sufficient. Possible applications for this version include lightweight tasks where computational efficiency is key, though further testing is needed to confirm compatibility. Each use case should be evaluated based on specific hardware capabilities.

- Smollm 135M Base: fp16, q2, q3, q4, q5, q6, q8

Conclusion

Smollm 135M Base is a compact language model with 135m parameters and a 4k context length, optimized for efficient performance in small to medium-scale tasks. It supports multiple quantized versions, including q4, which balances precision and performance, requiring at least 8GB VRAM for deployment.

References

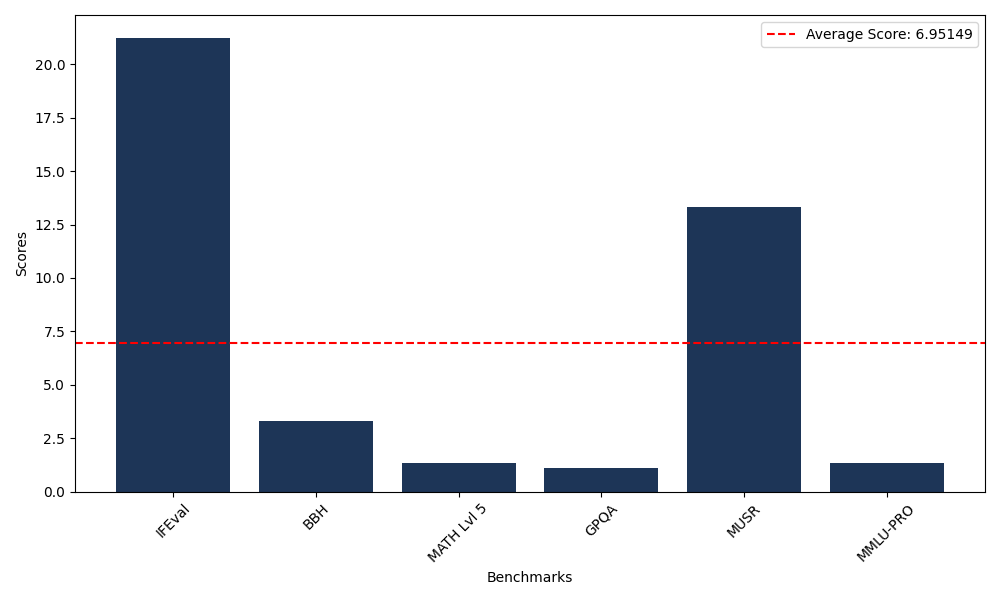

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 21.25 |

| Big Bench Hard (BBH) | 3.29 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.36 |

| General Purpose Question Answering (GPQA) | 1.12 |

| Multimodal Understanding and Reasoning (MUSR) | 13.34 |

| Massive Multitask Language Understanding (MMLU-PRO) | 1.36 |

Comments

No comments yet. Be the first to comment!

Leave a Comment