Smollm 135M Instruct - Model Details

Smollm 135M Instruct is a large language model developed by the Hugging Face Smol Models Research Enterprise community. It features 135 million parameters and is released under the Apache License 2.0. Designed to optimize data curation and architecture for superior performance in small to medium-sized models, it focuses on delivering efficient and effective language understanding and generation capabilities.

Description of Smollm 135M Instruct

Smollm 135M Instruct is part of the SmolLM series, which includes models with 135 million, 360 million, and 1.7 billion parameters. It is trained on the SmolLM-Corpus, a curated dataset of high-quality educational and synthetic data. The Instruct variant is fine-tuned using DPO (Direct Preference Optimization) to enhance response quality. It supports tasks like general knowledge, creative writing, and basic Python programming but is limited to English. The model struggles with arithmetic and complex reasoning, making it suitable for smaller-scale applications where efficiency and simplicity are prioritized.

Parameters & Context Length of Smollm 135M Instruct

Smollm 135M Instruct has 135 million parameters and a 4k context length, placing it in the small model category with short context capabilities. This configuration ensures fast and resource-efficient performance for simple tasks but limits its ability to handle extended or complex sequences. The 135m parameter size makes it ideal for lightweight applications, while the 4k context length restricts it to shorter interactions, making it less suited for tasks requiring extensive contextual understanding.

- Parameter Size: 135m

- Context Length: 4k

Possible Intended Uses of Smollm 135M Instruct

Smollm 135M Instruct is a model that could have possible applications in areas like general knowledge assistance, creative writing tasks, and basic Python programming support. Its possible uses might include helping users with straightforward information retrieval, generating text for creative projects, or offering guidance on simple coding challenges. However, these possible applications would require further testing to confirm their effectiveness, as the model’s performance may vary depending on the complexity of the task. The possible suitability for such roles depends on factors like the specific requirements of the task and the model’s inherent limitations.

- general knowledge assistance

- creative writing tasks

- basic python programming support

Possible Applications of Smollm 135M Instruct

Smollm 135M Instruct could have possible applications in areas such as general knowledge assistance, creative writing tasks, and basic Python programming support. These possible uses might include aiding users with straightforward information queries, generating text for creative projects, or providing guidance on simple coding challenges. However, the model’s possible suitability for such roles would require further investigation to ensure alignment with specific needs. The possible effectiveness of these applications may vary depending on the complexity of the task, and each potential use case would need thorough evaluation before deployment.

- general knowledge assistance

- creative writing tasks

- basic python programming support

Quantized Versions & Hardware Requirements of Smollm 135M Instruct

Smollm 135M Instruct’s medium q4 version is optimized for a balance between precision and performance, requiring a GPU with at least 8GB VRAM and a system with 32GB RAM for smooth operation. This configuration makes it suitable for mid-range hardware, though adequate cooling and a reliable power supply are recommended. The q4 quantization reduces memory usage while maintaining reasonable accuracy, making it a possible choice for users with limited resources.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Smollm 135M Instruct is a small language model developed by the Hugging Face Smol Models Research Enterprise community, featuring 135 million parameters and released under the Apache License 2.0. It is optimized for efficient performance in small to medium-scale tasks, trained on a curated dataset, and part of the SmolLM series designed for balanced accuracy and resource usage.

References

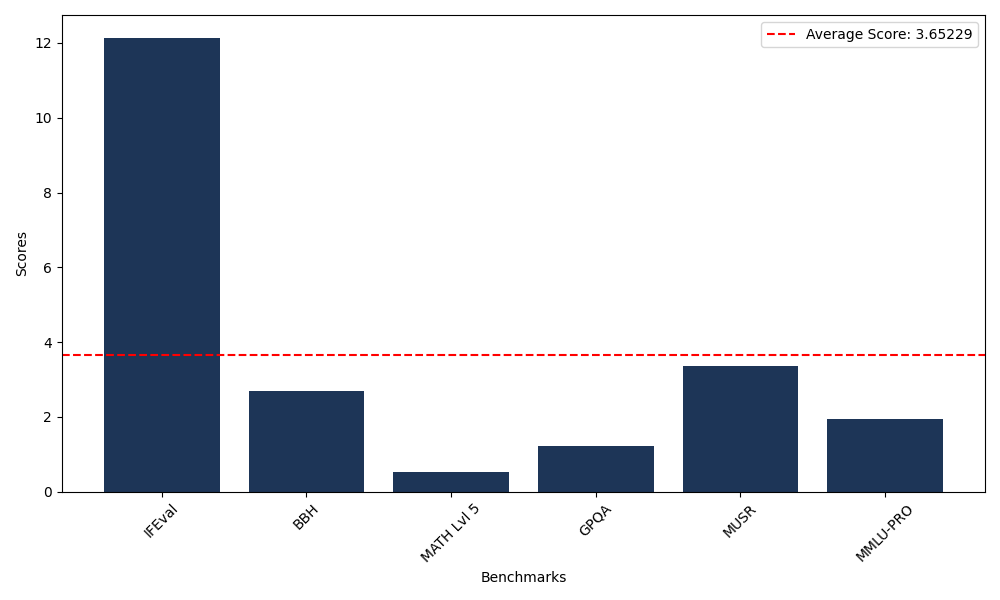

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 12.14 |

| Big Bench Hard (BBH) | 2.69 |

| Mathematical Reasoning Test (MATH Lvl 5) | 0.53 |

| General Purpose Question Answering (GPQA) | 1.23 |

| Multimodal Understanding and Reasoning (MUSR) | 3.37 |

| Massive Multitask Language Understanding (MMLU-PRO) | 1.96 |

Comments

No comments yet. Be the first to comment!

Leave a Comment