Smollm 360M Instruct - Model Details

markdown

Smollm 360M Instruct is a large language model developed by Hugging Face Smol Models Research Enterprise. It features 360 million parameters and is released under the Apache License 2.0. Designed for instruction following, this model emphasizes optimized data curation and architecture to deliver strong performance in small to medium-sized applications.

Description of Smollm 360M Instruct

SmolLM 360M is a large language model designed for efficient performance in standard prompts and everyday conversations. It is part of the SmolLM series, which includes models with 135M, 360M, and 1.7B parameters. Trained on the SmolLM-Corpus, a curated dataset of high-quality educational and synthetic data, the model benefits from fine-tuning on specialized datasets like WebInstructSub and StarCoder2-Self-OSS-Instruct. Advanced techniques such as DPO (Direct Preference Optimization) and tailored training recipes enhance its alignment and overall capabilities. The 360M version balances size and performance, making it suitable for applications requiring robust yet compact language understanding.

Parameters & Context Length of Smollm 360M Instruct

Smollm 360M Instruct is a large language model with 360 million parameters and a 4,000-token context length. The parameter size places it in the small model category, offering fast and resource-efficient performance suitable for tasks requiring moderate complexity without excessive computational demands. Its 4,000-token context length falls into the short context range, making it effective for concise interactions but limiting its ability to process very long texts. These specifications balance accessibility and capability, ideal for applications prioritizing efficiency and simplicity.

- Parameter Size: 360m

- Context Length: 4k

Possible Intended Uses of Smollm 360M Instruct

Smollm 360M Instruct is a large language model with 360 million parameters and a 4,000-token context length, making it a possible tool for tasks requiring efficient processing and moderate complexity. Its design suggests it could be explored for general knowledge questions, where it might provide concise and accurate responses. It may also serve as a possible aid for creative writing tasks, offering suggestions or generating text based on prompts. Additionally, it could be tested for basic Python programming assistance, helping with simple coding queries or explanations. These uses are possible applications that require further investigation to confirm their effectiveness and suitability for specific scenarios.

- General knowledge questions

- Creative writing tasks

- Basic Python programming assistance

Possible Applications of Smollm 360M Instruct

Smollm 360M Instruct is a large language model with 360 million parameters and a 4,000-token context length, making it a possible candidate for tasks requiring efficient processing and moderate complexity. Its design suggests it could be explored for general knowledge questions, where it might provide concise and accurate responses. It may also serve as a possible tool for creative writing tasks, offering suggestions or generating text based on prompts. Additionally, it could be tested for basic Python programming assistance, helping with simple coding queries or explanations. These applications are possible uses that require further investigation to ensure their effectiveness and suitability for specific scenarios. Each application must be thoroughly evaluated and tested before deployment to confirm its reliability and alignment with intended goals.

- general knowledge questions

- creative writing tasks

- basic Python programming assistance

Quantized Versions & Hardware Requirements of Smollm 360M Instruct

Smollm 360M Instruct with the q4 quantization offers a possible balance between precision and performance, requiring a GPU with at least 8GB VRAM for efficient operation. This version is suitable for systems with moderate hardware capabilities, enabling deployment on consumer-grade graphics cards. The fp16, q2, q3, q4, q5, q6, and q8 quantized versions are available, each tailored for different trade-offs between model size, speed, and accuracy. These configurations allow users to select the most appropriate option based on their hardware constraints and application needs.

- fp16

- q2

- q3

- q4

- q5

- q6

- q8

Conclusion

Smollm 360M Instruct is a large language model with 360 million parameters and a 4,000-token context length, designed for efficient performance in tasks like general knowledge, creative writing, and basic programming. It is part of the SmolLM series, offering multiple quantized versions to balance precision and hardware requirements, making it suitable for deployment on systems with moderate computational resources.

References

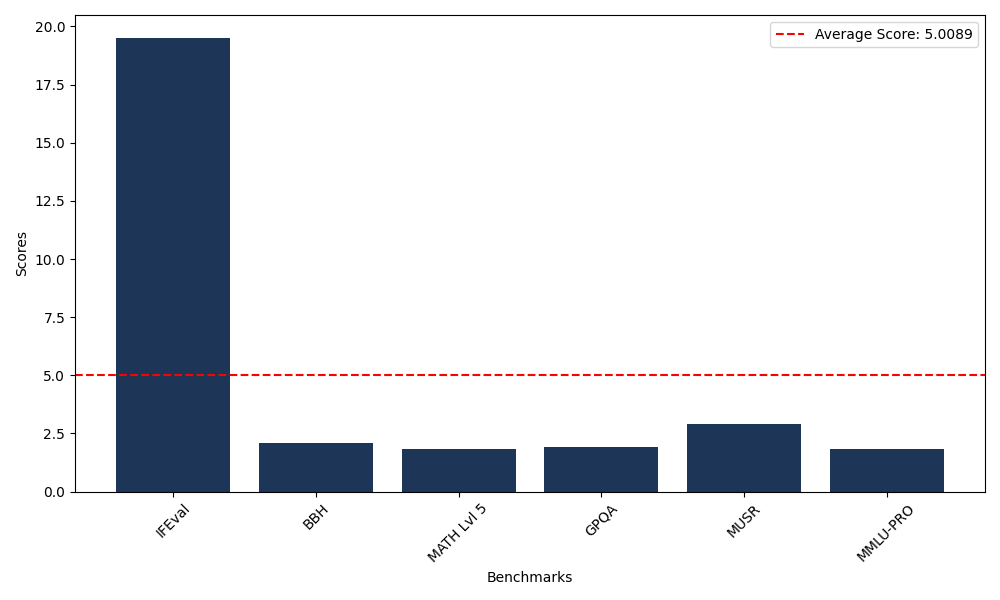

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 19.52 |

| Big Bench Hard (BBH) | 2.08 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.81 |

| General Purpose Question Answering (GPQA) | 1.90 |

| Multimodal Understanding and Reasoning (MUSR) | 2.90 |

| Massive Multitask Language Understanding (MMLU-PRO) | 1.85 |

Comments

No comments yet. Be the first to comment!

Leave a Comment