Smollm2 1.7B Instruct - Model Details

Smollm2 1.7B Instruct is a large language model developed by Hugging Face Tb Research Enterprise with 1.7b parameters. It is designed for on-device execution with a focus on compact sizes. The model is released under the Apache License 2.0 (Apache-2.0), making it accessible for both research and commercial use. Its instruct type ensures it is optimized for interactive tasks and user guidance.

Description of Smollm2 1.7B Instruct

SmolLM2 is a family of compact language models available in three sizes 135M 360M and 1.7B parameters. The 1.7B variant excels in instruction following knowledge reasoning and mathematics trained on 11 trillion tokens from diverse datasets. It supports tasks like text rewriting summarization and function calling while prioritizing compact sizes for efficient on-device execution. The model is designed for interactive applications and assistive tools making it suitable for resource-constrained environments. Its versatility and optimization make it a strong choice for developers seeking balance between performance and efficiency.

Parameters & Context Length of Smollm2 1.7B Instruct

Smollm2 1.7B Instruct is a compact large language model with 1.7b parameters, placing it in the small model category that prioritizes fast and resource-efficient performance for tasks requiring moderate complexity. Its 4k context length falls within the short context range, making it well-suited for concise interactions but less optimized for extended text processing. The model’s design emphasizes efficiency for on-device execution, balancing capability with accessibility. These specifications enable it to handle tasks like text rewriting and summarization while maintaining low computational demands.

- Name: Smollm2 1.7B Instruct

- Parameter Size: 1.7b

- Context Length: 4k

- Implications: Efficient for on-device tasks, limited to short to moderate context lengths.

Possible Intended Uses of Smollm2 1.7B Instruct

Smollm2 1.7B Instruct is a compact language model with 1.7b parameters and a 4k context length, designed for efficient on-device execution. Its possible uses include text rewriting, summarization, and function calling, which could be explored for tasks requiring concise and adaptable language processing. These possible applications might benefit from the model’s balance of performance and resource efficiency, though further investigation is needed to confirm their viability. The model’s focus on compactness suggests it could support scenarios where computational constraints are a priority, but possible uses in other domains would require careful evaluation.

- Intended Uses: text rewriting

- Intended Uses: summarization

- Intended Uses: function calling

Possible Applications of Smollm2 1.7B Instruct

Smollm2 1.7B Instruct is a compact large language model with 1.7b parameters and a 4k context length, designed for efficient on-device execution. Its possible applications could include text rewriting, summarization, and function calling, which might be explored for tasks requiring concise and adaptable language processing. These possible uses could also extend to scenarios like interactive task automation or content adaptation, though further investigation is needed to confirm their suitability. The model’s focus on compactness suggests it could support possible applications in environments where computational resources are limited, but possible uses in other contexts would require careful evaluation. Each possible application must be thoroughly assessed to ensure alignment with specific requirements and constraints.

- Possible Applications: text rewriting

- Possible Applications: summarization

- Possible Applications: function calling

Quantized Versions & Hardware Requirements of Smollm2 1.7B Instruct

Smollm2 1.7B Instruct with the medium q4 quantization is optimized for balanced performance and precision, requiring a GPU with at least 8GB VRAM and a system with 32GB RAM. This version is suitable for devices with moderate resources, enabling efficient execution of tasks like text rewriting and summarization. Possible applications may vary, so users should verify their hardware compatibility. The q4 version reduces memory demands compared to higher-precision variants, making it accessible for a wider range of setups.

fp16, q2, q3, q4, q5, q6, q8

Conclusion

Smollm2 1.7B Instruct is a compact large language model with 1.7b parameters and a 4k context length, optimized for efficient on-device execution under the Apache License 2.0. It balances performance and resource efficiency, making it suitable for tasks like text rewriting and summarization while prioritizing accessibility for diverse applications.

References

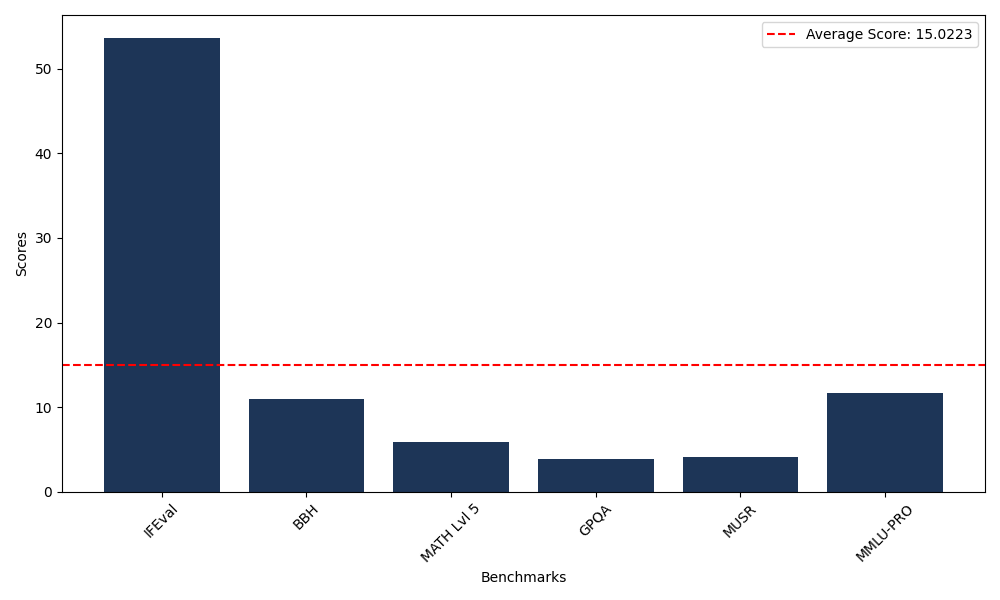

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 53.68 |

| Big Bench Hard (BBH) | 10.92 |

| Mathematical Reasoning Test (MATH Lvl 5) | 5.82 |

| General Purpose Question Answering (GPQA) | 3.91 |

| Multimodal Understanding and Reasoning (MUSR) | 4.10 |

| Massive Multitask Language Understanding (MMLU-PRO) | 11.71 |

Comments

No comments yet. Be the first to comment!

Leave a Comment