Solar 10.7B

Solar 10.7B is a large language model developed by Upstage, a company specializing in AI innovation. With 10.7 billion parameters, it introduces a novel approach called Depth Up-Scaling to enhance efficiency in scaling large language models. The model is released under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license, allowing non-commercial use while reserving commercial rights.

Description of Solar 10.7B

Solar 10.7B is a large language model with 10.7 billion parameters that achieves exceptional performance in natural language processing tasks. It employs a depth up-scaling (DUS) methodology, integrating Mistral 7B weights into upscaled layers and continuing pre-training to enhance efficiency. This approach allows it to outperform models with up to 30 billion parameters, such as Mixtral 8X7B, while maintaining scalability. A specialized version, SOLAR-10.7B-Instruct-v1.0, demonstrates significant improvements in fine-tuning capabilities, making it versatile for diverse applications.

Parameters & Context Length of Solar 10.7B

Solar 10.7B is a large language model with 10.7 billion parameters, placing it in the mid-scale category, which balances performance and resource efficiency for moderate complexity tasks. Its 4,000-token context length falls into the short context range, making it suitable for short tasks but less effective for very long texts. This configuration allows efficient handling of diverse applications while maintaining resource efficiency.

- Name: Solar 10.7B

- Parameter_Size: 10.7b

- Context_Length: 4k

- Implications: Mid-scale parameters for balanced performance; short context length for basic tasks.

Possible Intended Uses of Solar 10.7B

Solar 10.7B is a large language model that could be used for a range of natural language processing (NLP) tasks, such as text generation, translation, or summarization, due to its 10.7 billion parameters and 4,000-token context length. It may offer possible applications in fine-tuning for specific domains, allowing customization of its behavior through additional training. Chatting could also be a potential use case, though this would likely require fine-tuning to adapt the model to conversational scenarios. These uses are possible but would need thorough testing to ensure effectiveness and alignment with specific requirements. The model’s design suggests it could support tasks requiring moderate complexity, though its 4,000-token context might limit its suitability for very long texts.

- Intended_Uses: nlp tasks

- Intended_Uses: fine-tuning

- Intended_Uses: chatting (requires fine-tuning)

Possible Applications of Solar 10.7B

Solar 10.7B is a large language model that could be used for possible applications in natural language processing (NLP) tasks, such as text generation, translation, or summarization, due to its 10.7 billion parameters and 4,000-token context length. It may offer possible opportunities for fine-tuning to adapt to specific domains, enabling tailored performance for specialized tasks. Possible uses could include chatting scenarios, though this would likely require fine-tuning to align with conversational needs. Additionally, it might support possible applications in content creation or data analysis, leveraging its mid-scale parameter count for balanced efficiency and capability. These possible uses are not guaranteed and would require thorough evaluation to ensure alignment with specific goals.

- Possible applications: natural language processing tasks

- Possible applications: fine-tuning

- Possible applications: chatting (requires fine-tuning)

- Possible applications: content creation or data analysis

Quantized Versions & Hardware Requirements of Solar 10.7B

Solar 10.7B’s medium q4 version requires a GPU with at least 16GB VRAM and a system with 32GB RAM for efficient operation, making it suitable for mid-scale models. This quantization balances precision and performance, allowing deployment on standard GPUs without excessive resource demands. However, these are possible requirements and may vary based on workload and optimization.

- fp16

- q2

- q3

- q4

- q5

- q6

- q8

Conclusion

Solar 10.7B is a large language model with 10.7 billion parameters and a 4,000-token context length, designed for efficient scaling through depth up-scaling while outperforming larger models. It balances performance and resource usage, making it suitable for moderate complexity tasks with potential for fine-tuning and specialized applications.

References

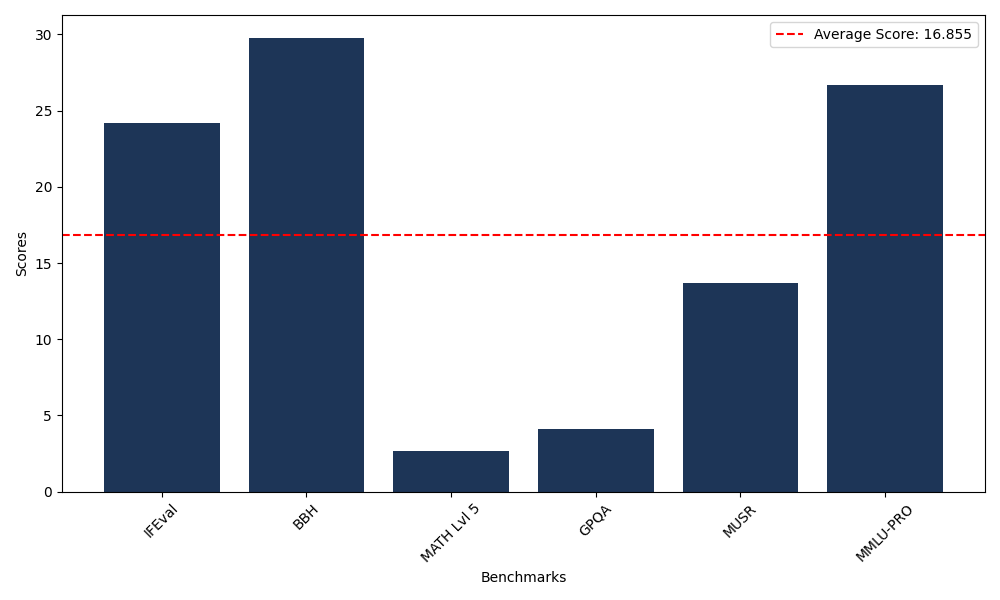

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 24.21 |

| Big Bench Hard (BBH) | 29.79 |

| Mathematical Reasoning Test (MATH Lvl 5) | 2.64 |

| General Purpose Question Answering (GPQA) | 4.14 |

| Multimodal Understanding and Reasoning (MUSR) | 13.68 |

| Massive Multitask Language Understanding (MMLU-PRO) | 26.67 |