Solar 10.7B Instruct - Model Details

Solar 10.7B Instruct is a large language model developed by Upstage with 10.7B parameters. It is licensed under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0). The model introduces Depth Up-Scaling for efficient large language model scaling.

Description of Solar 10.7B Instruct

Solar 10.7B Instruct is a large language model with 10.7B parameters developed by Upstage, designed for efficient scaling through Depth Up-Scaling (DUS). It leverages architectural modifications and continued pretraining to achieve performance surpassing models up to 30B parameters. The model is fine-tuned for single-turn conversations and excels in tasks like text generation, translation, and code generation. It operates under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license, allowing non-commercial use while maintaining open access for research and development.

Parameters & Context Length of Solar 10.7B Instruct

Solar 10.7B Instruct has 10.7B parameters, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 4K context length suits short to moderate-length tasks but limits its ability to handle very long texts. The parameter size enables robust handling of diverse NLP tasks without excessive computational demands, while the context length ensures efficiency for focused interactions. The model’s design emphasizes practicality for applications requiring depth over sheer scale.

- Name: Solar 10.7B Instruct

- Parameter Size: 10.7B

- Context Length: 4K

- Implications: Mid-scale parameters for balanced performance; 4K context for efficient short-to-moderate tasks.

Possible Intended Uses of Solar 10.7B Instruct

Solar 10.7B Instruct is a versatile model with 10.7B parameters and a 4K context length, making it a candidate for text generation, translation, and code generation. These are possible applications that could benefit from its architecture, but further investigation is needed to confirm their viability. Possible uses might include creating dynamic content, enabling cross-language communication, or assisting with programming tasks. However, the effectiveness of these possible uses would depend on specific implementation details, training data, and user requirements. The model’s design suggests it could support such tasks, but potential uses should be thoroughly tested before deployment.

- text generation

- translation

- code generation

Possible Applications of Solar 10.7B Instruct

Solar 10.7B Instruct is a large language model with 10.7B parameters and a 4K context length, which could support possible applications such as dynamic text generation, multilingual translation, code development, and creative content creation. These possible uses might leverage its capacity for structured reasoning and language fluency, but they require thorough evaluation to ensure alignment with specific needs. Possible applications like automated documentation, language learning tools, or collaborative coding environments could benefit from its design, though further testing is essential. The model’s flexibility suggests potential uses across diverse domains, but each possible application must be rigorously assessed before implementation.

- text generation

- translation

- code generation

- creative content creation

Quantized Versions & Hardware Requirements of Solar 10.7B Instruct

Solar 10.7B Instruct in its medium q4 version requires a GPU with at least 20GB VRAM (e.g., RTX 3090) and system memory of at least 32GB to operate efficiently, balancing precision and performance. This possible configuration ensures smoother execution for tasks like text generation and translation, though specific hardware may vary based on workload. Potential users should verify their GPU’s VRAM and system capabilities to confirm compatibility.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Solar 10.7B Instruct is a large language model with 10.7B parameters and a 4K context length, designed for efficient scaling through Depth Up-Scaling and optimized for tasks like text generation, translation, and code generation. It operates under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license, making it accessible for non-commercial research and development.

- fp16, q2, q3, q4, q5, q6, q8

References

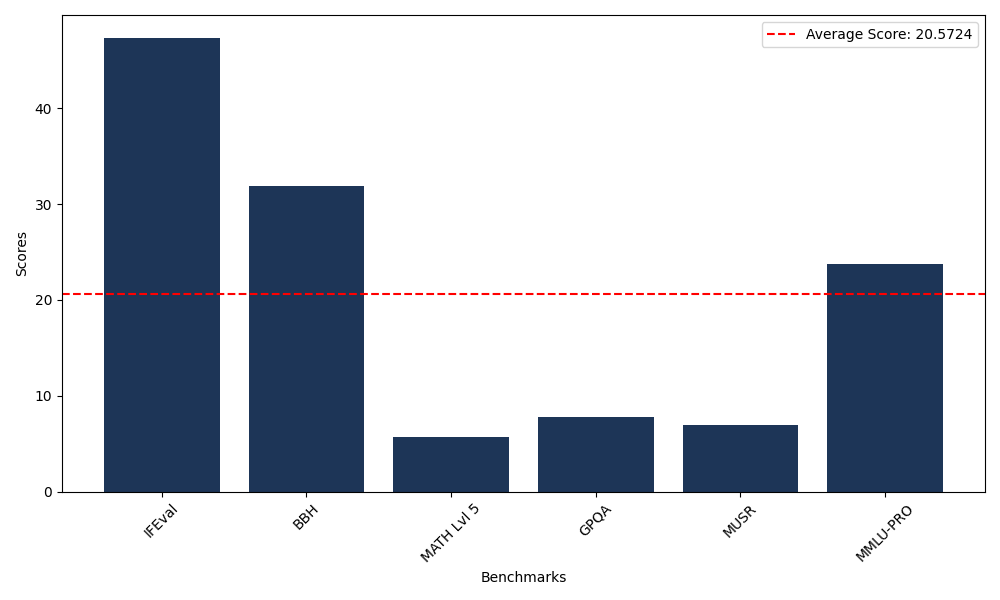

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 47.37 |

| Big Bench Hard (BBH) | 31.87 |

| Mathematical Reasoning Test (MATH Lvl 5) | 5.66 |

| General Purpose Question Answering (GPQA) | 7.83 |

| Multimodal Understanding and Reasoning (MUSR) | 6.94 |

| Massive Multitask Language Understanding (MMLU-PRO) | 23.76 |

Comments

No comments yet. Be the first to comment!

Leave a Comment