Solar Pro 22B Instruct - Model Details

Solar Pro 22B Instruct is a large language model developed by Upstage, a company specializing in advanced AI solutions. With 22b parameters, it is designed for efficient single GPU operation, delivering high performance at scale. The model is released under the MIT License, ensuring open access and flexibility for developers and researchers. Its instruct-focused architecture emphasizes practical applications, making it a versatile tool for a wide range of tasks.

Description of Solar Pro 22B Instruct

Solar Pro Preview is a large language model with 22 billion parameters designed to operate efficiently on a single GPU, offering performance that rivals models with significantly larger parameter counts such as Llama 3.1 with 70 billion parameters. It leverages an enhanced depth up-scaling method to scale a Phi-3-medium model (14 billion parameters) to 22 billion parameters, optimized for GPUs with 80GB of VRAM. The model’s training strategy and dataset improvements have led to strong results on benchmarks like MMLU-Pro and IFEval. As a pre-release version, it has limited language coverage and a maximum context length of 4,000 tokens, with the official release planned for November 2024 to include expanded language support and longer context windows.

Parameters & Context Length of Solar Pro 22B Instruct

Solar Pro 22B Instruct has 22b parameters, placing it in the large model category, which offers powerful performance for complex tasks but requires significant computational resources. Its 4k context length falls into the short context range, making it suitable for concise tasks but limiting its ability to handle extended texts. The model’s parameter size enables advanced capabilities, while the context length restricts its use for longer sequences.

- Name: Solar Pro 22B Instruct

- Parameter Size: 22b (Large Models: 20B to 70B) - Implications: Powerful for complex tasks, resource-intensive.

- Context Length: 4k (Short Contexts: up to 4K Tokens) - Implications: Suitable for short tasks, limited for long texts.

Possible Intended Uses of Solar Pro 22B Instruct

Solar Pro 22B Instruct is a versatile large language model with 22b parameters, designed for tasks that require advanced language understanding and generation. Its 4k context length allows for handling moderately complex queries, though its full potential remains to be explored. Possible uses include answering questions with detailed explanations, generating creative or technical text, and assisting with tasks like drafting, summarizing, or analyzing information. These possible applications could extend to educational support, content creation, or interactive problem-solving, but further research is needed to confirm their effectiveness. The model’s design suggests it could adapt to a range of scenarios, though its suitability for specific tasks would depend on factors like data quality, user needs, and computational constraints.

- Name: Solar Pro 22B Instruct

- Purpose: Answering questions, generating text, assisting with tasks

- Parameter Size: 22b

- Context Length: 4k

Possible Applications of Solar Pro 22B Instruct

Solar Pro 22B Instruct is a large language model with 22b parameters and a 4k context length, offering possible applications in areas like generating detailed text, answering complex questions, assisting with task automation, and supporting interactive learning. Possible scenarios include creating content for creative projects, providing explanations for technical topics, streamlining repetitive tasks, and enhancing user interactions through natural language processing. These possible uses could benefit from the model’s capacity to handle nuanced queries and generate coherent responses, though each possible application would require careful assessment to ensure alignment with specific needs. The model’s design suggests it could adapt to various contexts, but possible effectiveness in any given area would depend on further testing and validation. Each possible application must be thoroughly evaluated and tested before deployment to ensure reliability and suitability.

- Name: Solar Pro 22B Instruct

- Possible Applications: answering questions, generating text, assisting with tasks, supporting interactive learning

- Parameter Size: 22b

- Context Length: 4k

Quantized Versions & Hardware Requirements of Solar Pro 22B Instruct

Solar Pro 22B Instruct’s medium q4 version is optimized for a balance between precision and performance, requiring a GPU with at least 16GB VRAM for efficient operation. This makes it suitable for systems with moderate hardware capabilities, though further testing is needed to confirm compatibility. The model’s quantized versions include fp16, q2, q3, q4, q5, q6, q8, each offering trade-offs between speed, memory usage, and accuracy.

- Name: Solar Pro 22B Instruct

- Quantized Versions: fp16, q2, q3, q4, q5, q6, q8

- Parameter Size: 22b

- Context Length: 4k

Conclusion

Solar Pro 22B Instruct is a large language model with 22b parameters and a 4k context length, optimized for single GPU operation with enhanced depth scaling. It delivers strong performance on benchmarks like MMLU-Pro and IFEval, though it remains a pre-release version with limitations on language coverage and context length, with the official release planned for November 2024.

References

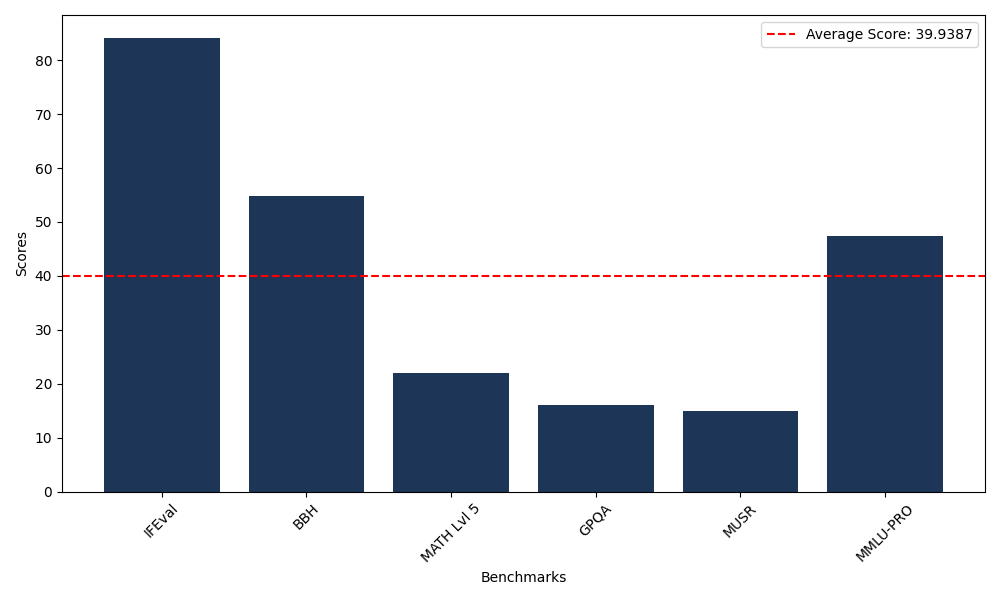

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 84.16 |

| Big Bench Hard (BBH) | 54.82 |

| Mathematical Reasoning Test (MATH Lvl 5) | 22.05 |

| General Purpose Question Answering (GPQA) | 16.11 |

| Multimodal Understanding and Reasoning (MUSR) | 15.01 |

| Massive Multitask Language Understanding (MMLU-PRO) | 47.48 |

Comments

No comments yet. Be the first to comment!

Leave a Comment