Stable Beluga 70B - Model Details

Stable Beluga 70B is a large language model developed by Stability-Ai, a company known for its innovative approaches in AI research. With 70b parameters, it is designed to handle complex tasks with enhanced reasoning capabilities. The model operates under the Stable Beluga Non-Commercial Community License Agreement, ensuring its use is restricted to non-commercial purposes. Built upon the Llama 2 foundation, Stable Beluga 70B emphasizes improved performance in logical and analytical tasks.

Description of Stable Beluga 70B

Stable Beluga 2 is a 70b parameter auto-regressive language model developed by Stability AI using the HuggingFace Transformers library. It is a fine-tuned version of the Llama2 model, trained on an Orca-style dataset to enhance reasoning and analytical capabilities. The model is distributed under the STABLE BELUGA NON-COMMERCIAL COMMUNITY LICENSE AGREEMENT, restricting its use to non-commercial purposes. This version builds on the Llama2 foundation, emphasizing improved performance in complex tasks through specialized training data.

Parameters & Context Length of Stable Beluga 70B

Stable Beluga 70B is a 70b parameter model with a 4k context length, placing it in the category of very large models and short contexts. The 70b parameter size enables advanced reasoning and complex task handling but requires significant computational resources, making it suitable for specialized applications. A 4k context length allows for processing moderately long texts but limits its effectiveness for extended documents or continuous reasoning over vast data. This combination reflects a balance between power and practicality, prioritizing efficiency for tasks that demand depth without excessive resource consumption.

- Parameter Size: 70b – Very Large Models (70B+)

- Context Length: 4k – Short Contexts (up to 4K Tokens)

Possible Intended Uses of Stable Beluga 70B

Stable Beluga 70B is a large language model with 70b parameters and a 4k context length, designed for tasks requiring advanced reasoning and text generation. Its possible uses include answering questions, generating text, and providing coding assistance, though these applications remain possible scenarios that require further exploration. The model’s scale and training data suggest it could support complex tasks, but its effectiveness in specific domains depends on factors like data quality, user needs, and technical constraints. Possible uses might extend to creative writing, technical documentation, or algorithmic problem-solving, but these possible applications need rigorous testing before deployment. The model’s non-commercial license also influences its accessibility for such possible uses.

- answering questions

- generating text

- coding assistance

Possible Applications of Stable Beluga 70B

Stable Beluga 70B is a large-scale language model with 70b parameters and a 4k context length, offering possible applications in areas like answering complex questions, generating creative or technical text, and assisting with coding tasks. Possible uses might include supporting research workflows, automating content creation, or enhancing educational tools, though these possible scenarios require careful validation. The model’s possible utility in tasks requiring deep reasoning or structured output could be explored further, but possible implementations must be thoroughly tested to ensure alignment with specific goals. Possible applications in non-sensitive domains could also extend to collaborative writing or data analysis, but possible outcomes depend on context and requirements.

- answering questions

- generating text

- coding assistance

Quantized Versions & Hardware Requirements of Stable Beluga 70B

Stable Beluga 70B in its medium q4 version requires significant hardware resources, with possible VRAM needs exceeding 48GB for optimal performance, depending on implementation and workload. This version balances precision and efficiency but may still demand high-end GPUs or multiple GPUs for large-scale tasks. System memory of at least 32GB and adequate cooling are also possible prerequisites.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Stable Beluga 70B is a large language model with 70b parameters developed by Stability-Ai, designed for complex reasoning tasks and built upon the Llama2 foundation. It operates under a non-commercial license, emphasizing accessibility for research and non-commercial applications while requiring significant computational resources for deployment.

References

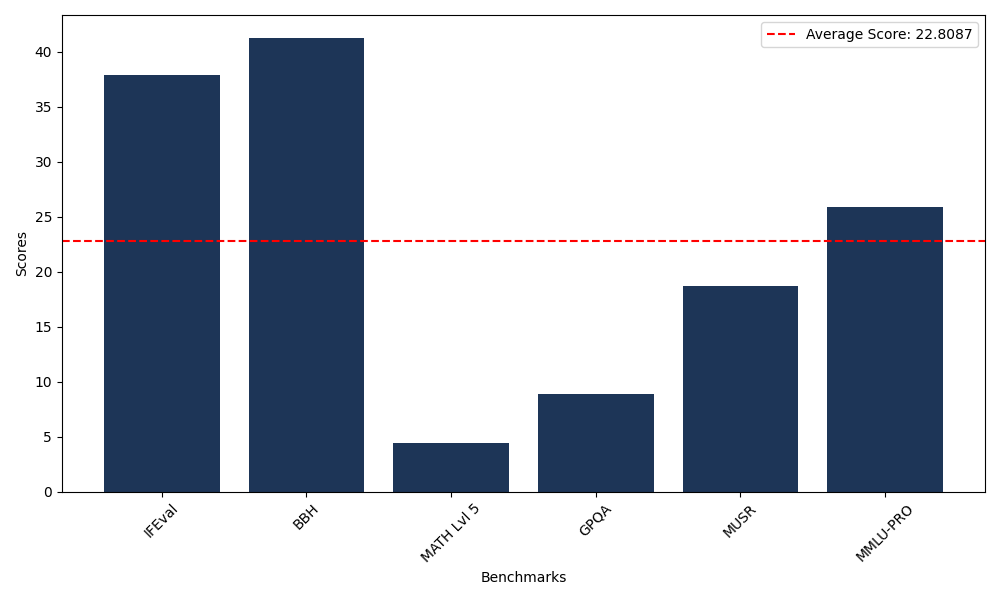

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 37.87 |

| Big Bench Hard (BBH) | 41.26 |

| Mathematical Reasoning Test (MATH Lvl 5) | 4.38 |

| General Purpose Question Answering (GPQA) | 8.84 |

| Multimodal Understanding and Reasoning (MUSR) | 18.65 |

| Massive Multitask Language Understanding (MMLU-PRO) | 25.85 |

Comments

No comments yet. Be the first to comment!

Leave a Comment