Stablelm Zephyr 3B - Model Details

Stablelm Zephyr 3B is a large language model developed by Stability-Ai, featuring a compact 3b parameter size designed for efficient performance on edge devices. The model operates under the Stability AI Non-Commercial Research Community License Agreement, allowing researchers and developers to utilize it for non-commercial purposes while adhering to specific usage guidelines. Its lightweight architecture prioritizes accessibility and adaptability in resource-constrained environments.

Description of Stablelm Zephyr 3B

StableLM Zephyr 3B is a 3 billion parameter instruction-tuned language model inspired by HugginFaceH4's Zephyr 7B training pipeline. It was trained on a combination of publicly available datasets and synthetic datasets using Direct Preference Optimization (DPO) to enhance alignment with user preferences. The model achieved a score of 6.64 on the MT Bench and a 76.00% win rate on the Alpaca Benchmark, demonstrating strong performance. Designed as a foundational base model, it supports application-specific fine-tuning for tailored use cases while maintaining efficiency and adaptability.

Parameters & Context Length of Stablelm Zephyr 3B

Stablelm Zephyr 3B is a 3b parameter model with a 4k context length, positioning it as a compact and efficient solution for tasks requiring moderate computational resources. The 3b parameter size places it in the small model category, offering fast inference and lower resource demands, ideal for edge devices or applications prioritizing speed over extreme complexity. Its 4k context length supports short to moderate tasks but may limit performance on extended text processing compared to models with longer contexts. This balance makes it suitable for specific use cases where efficiency and simplicity are critical.

- Name: Stablelm Zephyr 3B

- Parameter Size: 3b

- Context Length: 4k

- Implications: Efficient for edge devices, limited to short/medium tasks, resource-friendly for simple applications.

Possible Intended Uses of Stablelm Zephyr 3B

Stablelm Zephyr 3B is a 3b parameter model designed for efficient performance on edge devices, with a 4k context length that supports moderate-length tasks. Its possible uses include text generation for creative writing or content creation, code generation to assist developers in drafting or debugging, and language understanding for tasks like summarization or translation. These possible applications could benefit from the model’s lightweight design, but further investigation is needed to ensure suitability for specific scenarios. The model’s intended purpose aligns with general-purpose tasks, though its effectiveness in specialized domains remains to be validated. Possible uses may vary depending on the complexity of the input and the requirements of the application.

- text generation

- code generation

- language understanding

Possible Applications of Stablelm Zephyr 3B

Stablelm Zephyr 3B is a 3b parameter model with a 4k context length, offering possible applications in areas like text generation for creative or educational content, code generation to support software development workflows, language understanding for multilingual tasks, and conversational interfaces for general-purpose interactions. These possible uses could leverage the model’s efficiency and adaptability, but further exploration is necessary to confirm their viability. The intended purpose of the model aligns with lightweight, non-critical tasks, and its possible effectiveness in specific scenarios depends on rigorous testing. Possible applications may vary based on context, input complexity, and user requirements.

- text generation

- code generation

- language understanding

- conversational interfaces

Quantized Versions & Hardware Requirements of Stablelm Zephyr 3B

Stablelm Zephyr 3B’s medium q4 version requires a GPU with at least 12GB VRAM and a system with 32GB RAM to ensure smooth operation, making it suitable for mid-range hardware setups. This quantized version balances precision and performance, allowing possible use on devices with moderate computational resources. However, possible variations in workload complexity may affect performance, so users should verify compatibility with their specific hardware. Additional considerations include adequate cooling and a stable power supply for optimal results.

- q2, q3, q32, q4, q5, q6, q8

Conclusion

Stablelm Zephyr 3B is a 3b parameter language model optimized for efficient edge device performance with a 4k context length, making it suitable for lightweight applications. It serves as a foundational base model for tasks like text generation, code generation, and language understanding, offering a balance between accessibility and adaptability for specific use cases.

References

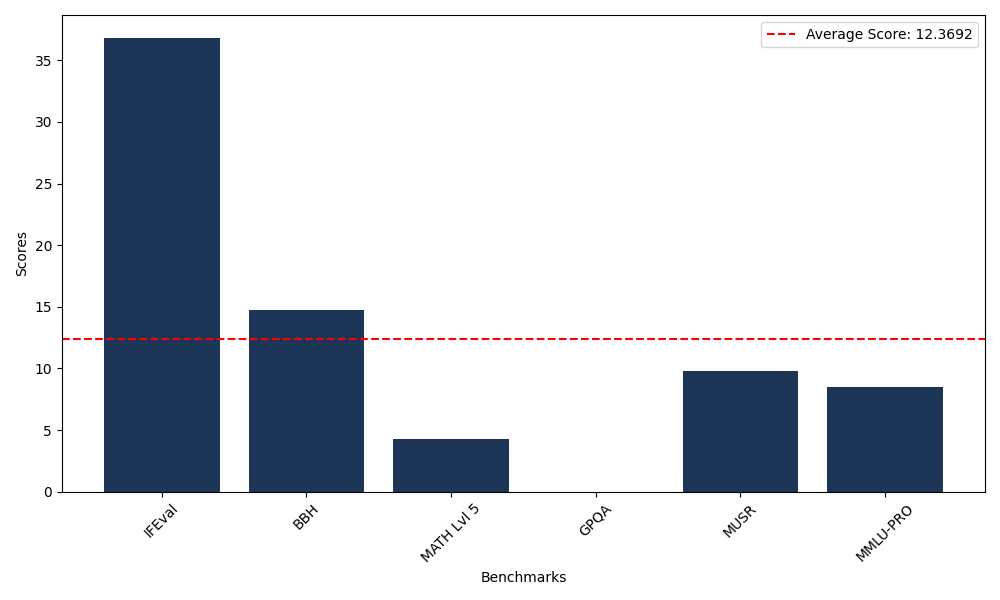

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 36.83 |

| Big Bench Hard (BBH) | 14.76 |

| Mathematical Reasoning Test (MATH Lvl 5) | 4.31 |

| General Purpose Question Answering (GPQA) | 0.00 |

| Multimodal Understanding and Reasoning (MUSR) | 9.79 |

| Massive Multitask Language Understanding (MMLU-PRO) | 8.53 |

Comments

No comments yet. Be the first to comment!

Leave a Comment