Stablelm2 12B - Model Details

Stablelm2 12B is a large language model developed by Stability-Ai, a company known for its contributions to AI research and development. This model features 12b parameters, making it a robust tool for complex tasks. It operates under the Stability AI Non-Commercial Research Community License Agreement (STABILITY-AI-NCRCLA), which allows for non-commercial use and research. The model is designed to offer state-of-the-art multilingual capabilities, supporting a wide range of languages and applications.

Description of Stablelm2 12B

Stablelm2 12B is a 12.1 billion parameter decoder-only language model pre-trained on 2 trillion tokens of diverse multilingual and code datasets for two epochs. Developed by Stability AI, it serves as a foundational base model for application-specific fine-tuning. The model incorporates Rotary Position Embeddings and FlashAttention-2 optimizations to enhance efficiency and performance. It was trained on extensive datasets including Falcon RefinedWeb, RedPajama-Data, The Pile, and StarCoder, ensuring strong capabilities in both natural language and programming tasks.

Parameters & Context Length of Stablelm2 12B

Stablelm2 12B features 12b parameters, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 4k token context length is classified as short, making it suitable for concise tasks but limiting its ability to process extended texts. This configuration implies the model is optimized for efficiency rather than handling very long sequences, aligning with its role as a foundational base for specialized applications.

- Parameter Size: 12b

- Context Length: 4k

Possible Intended Uses of Stablelm2 12B

Stablelm2 12B is a versatile large language model that could support a range of possible applications, including text generation for general-purpose applications, code generation and analysis, and fine-tuning for domain-specific tasks. Its 12b parameter size and 4k context length suggest it may be well-suited for scenarios requiring moderate complexity and efficiency, though these possible uses would need careful evaluation to ensure alignment with specific requirements. The model’s design as a foundational base for customization implies it could be adapted to possible tasks like content creation, programming assistance, or specialized data processing, but further research would be necessary to confirm its effectiveness in these areas.

- text generation for general-purpose applications

- code generation and analysis

- fine-tuning for domain-specific tasks

Possible Applications of Stablelm2 12B

Stablelm2 12B is a versatile model that could support possible applications such as text generation for general-purpose tasks, code analysis and development, domain-specific fine-tuning, and multilingual content creation. These possible uses would require careful assessment to ensure alignment with specific needs, as the model’s design emphasizes efficiency and adaptability for moderate complexity tasks. While its 12b parameter size and 4k context length suggest suitability for scenarios like creative writing, programming assistance, or tailored data processing, the possible effectiveness of these applications would need thorough validation. The model’s flexibility as a foundational tool means it could be adapted to possible new areas, but each use case would demand rigorous testing before deployment.

- text generation for general-purpose tasks

- code analysis and development

- domain-specific fine-tuning

- multilingual content creation

Quantized Versions & Hardware Requirements of Stablelm2 12B

Stablelm2 12B’s medium q4 version requires a GPU with at least 20GB VRAM (e.g., RTX 3090) for efficient operation, making it suitable for systems with moderate to high-end graphics cards. This possible configuration balances precision and performance, though users should verify their hardware compatibility. The model’s 12b parameter size and q4 quantization reduce memory demands compared to full-precision versions, but specific requirements may vary based on workload and implementation. Each possible use case should be tested to ensure hardware alignment.

q2, q3, q32, q4, q5, q6, q8

Conclusion

Stablelm2 12B is a 12b parameter language model developed by Stability AI, featuring a 4k context length and optimizations like Rotary Position Embeddings and FlashAttention-2 for efficient performance. It operates under a non-commercial research license and is designed for flexible application-specific fine-tuning, text generation, and code analysis.

References

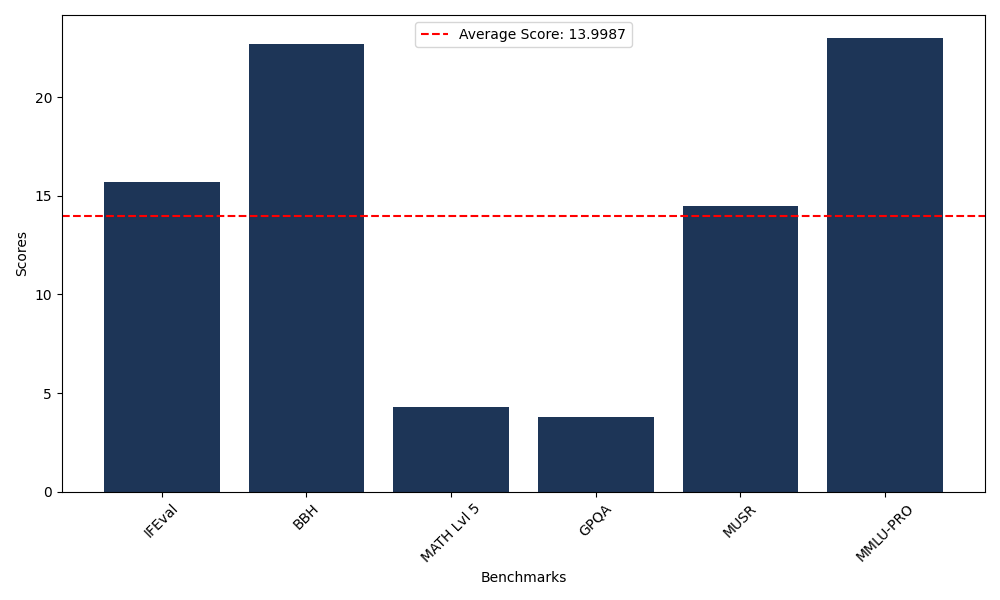

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 15.69 |

| Big Bench Hard (BBH) | 22.69 |

| Mathematical Reasoning Test (MATH Lvl 5) | 4.31 |

| General Purpose Question Answering (GPQA) | 3.80 |

| Multimodal Understanding and Reasoning (MUSR) | 14.49 |

| Massive Multitask Language Understanding (MMLU-PRO) | 23.02 |

Comments

No comments yet. Be the first to comment!

Leave a Comment