Starcoder 15B Base - Model Details

Starcoder 15B Base is a large language model developed by the Bigcodeproject, a non-profit organization. With 15b parameters, it is designed to excel in coding tasks, offering extensive knowledge across programming languages. The model is released under the BigCode Open Rail-M V1 License Agreement, ensuring open access and collaborative development. Its focus on code-related applications makes it a valuable tool for developers and researchers.

Description of Starcoder 15B Base

Starcoder2-15B is a 15 billion parameter model trained on 600+ programming languages from The Stack v2 dataset, excluding opt-out requests. It uses Grouped Query Attention with a 16,384 token context window and a 4,096 token sliding window attention to handle complex coding tasks. The model was trained using the Fill-in-the-Middle objective on 4+ trillion tokens via the NVIDIA NeMo Framework on the NVIDIA Eos Supercomputer with DGX H100 systems, ensuring scalability and efficiency for large-scale code generation and analysis.

Parameters & Context Length of Starcoder 15B Base

Starcoder 15B Base features 15b parameters, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for handling moderate complexity in coding tasks. Its 16k context length falls into the long-context range, enabling it to process extended code sequences and maintain coherence over longer texts, though this requires more computational resources. The model’s parameter size and context length make it well-suited for intricate coding challenges while remaining accessible for research and development.

- Parameter Size: 15b

- Context Length: 16k

Possible Intended Uses of Starcoder 15B Base

Starcoder 15B Base is a model designed for coding tasks, with possible applications in generating and completing code, translating between programming languages, and adapting to specific coding challenges through fine-tuning. These possible uses could support developers in automating repetitive tasks, improving cross-language compatibility, or tailoring the model to niche programming needs. However, the possible effectiveness of these applications depends on factors like dataset quality, task complexity, and computational resources, which require further investigation. The model’s design suggests it might be well-suited for scenarios where code accuracy and adaptability are critical, but possible limitations or unintended behaviors could arise without rigorous testing.

- code generation and completion

- code translation between programming languages

- model fine-tuning for specific coding tasks

Possible Applications of Starcoder 15B Base

Starcoder 15B Base is a model with possible applications in areas like code generation, cross-language translation, and task-specific fine-tuning, as its design emphasizes programming expertise. Possible uses could include automating code snippets, translating between programming languages, or adapting the model for specialized coding workflows. These possible applications might benefit developers by streamlining repetitive tasks or improving interoperability across languages. However, the possible effectiveness of these uses depends on factors like input quality, task complexity, and computational constraints, which require thorough evaluation. Each possible application must be rigorously tested before deployment to ensure reliability and alignment with specific needs.

- code generation and completion

- code translation between programming languages

- model fine-tuning for specific coding tasks

Quantized Versions & Hardware Requirements of Starcoder 15B Base

Starcoder 15B Base's q4 version is optimized for a balance between precision and performance, requiring a GPU with at least 20GB VRAM (e.g., RTX 3090) and a system with 32GB+ RAM for smooth operation. This configuration ensures efficient execution while maintaining reasonable computational demands. The exact hardware needs may vary based on workload and model size.

fp16, q2, q3, q4, q5, q6, q8

Conclusion

Starcoder 15B Base is a 15 billion parameter model developed by the Bigcodeproject, a non-profit organization, and is released under the BigCode Open Rail-M V1 License Agreement, with a strong focus on coding tasks. It is designed to handle complex programming challenges, leveraging extensive programming language knowledge and a long context length for efficient code generation and analysis.

References

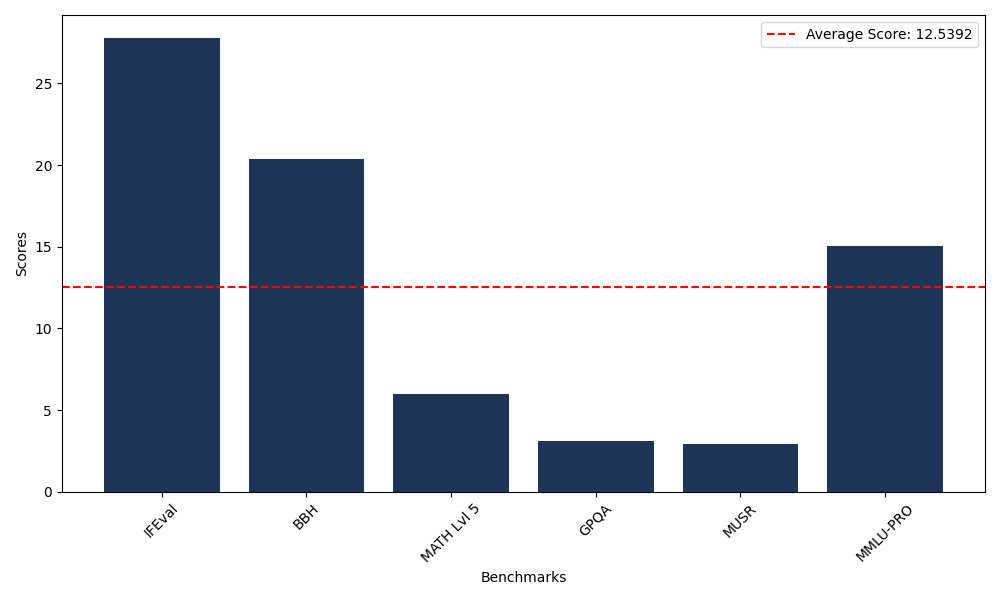

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 27.80 |

| Big Bench Hard (BBH) | 20.37 |

| Mathematical Reasoning Test (MATH Lvl 5) | 5.97 |

| General Purpose Question Answering (GPQA) | 3.13 |

| Multimodal Understanding and Reasoning (MUSR) | 2.93 |

| Massive Multitask Language Understanding (MMLU-PRO) | 15.03 |

Comments

No comments yet. Be the first to comment!

Leave a Comment