Starcoder 3B Base - Model Details

Starcoder 3B Base, a 3b parameter language model developed by Bigcodeproject, is licensed under the BigCode Open Rail-M V1 License Agreement. It focuses on coding tasks with extensive programming language knowledge.

Description of Starcoder 3B Base

Starcoder2-3B is a 3B parameter model trained on 17 programming languages from The Stack v2, with opt-out requests excluded. It employs Grouped Query Attention and features a context window of 16,384 tokens alongside a sliding window attention of 4,096 tokens. The model was trained using the Fill-in-the-Middle objective on 3+ trillion tokens, emphasizing coding tasks with extensive programming language knowledge.

Parameters & Context Length of Starcoder 3B Base

Starcoder2-3B is a 3b parameter model with a context length of 16k tokens, designed to balance efficiency and capability. The 3b parameter size places it in the small to mid-scale range, offering resource-efficient performance for coding tasks while maintaining sufficient complexity for diverse programming challenges. Its 16k token context length falls into the long context category, enabling it to process extended codebases or documents effectively, though it requires more computational resources than shorter contexts. This combination makes it well-suited for developers needing a compact yet powerful tool for coding tasks.

- Parameter Size: 3b

- Context Length: 16k

Possible Intended Uses of Starcoder 3B Base

Starcoder2-3B is a 3b parameter model designed for coding tasks, with potential applications in code generation, code completion, and code translation. Its ability to process programming languages makes it a possible tool for developers seeking assistance with writing, refining, or converting code across different languages. However, these possible uses require thorough investigation to ensure they align with specific project needs and technical constraints. The model’s focus on coding tasks suggests it could be a possible resource for automating repetitive coding workflows or supporting multi-language development, but further testing would be necessary to validate its effectiveness in these areas. The model’s context length and parameter size also imply it could handle complex coding scenarios, though this remains a possible benefit rather than a guaranteed outcome.

- code generation

- code completion

- code translation

Possible Applications of Starcoder 3B Base

Starcoder2-3B is a 3b parameter model with potential applications in code generation, code completion, and code translation, among other possible uses. Its design for programming tasks suggests it could be a possible tool for automating code writing, assisting with partial code development, or converting code between languages. These possible applications might also extend to generating documentation or analyzing code structures, though further exploration would be necessary to confirm their viability. The model’s focus on coding implies it could be a possible resource for developers working on multi-language projects or seeking efficiency in repetitive coding tasks, but each potential use case would require thorough evaluation to ensure alignment with specific needs.

- code generation

- code completion

- code translation

- code documentation assistance

Quantized Versions & Hardware Requirements of Starcoder 3B Base

Starcoder2-3B with the medium q4 quantization is designed to balance precision and performance, requiring a GPU with at least 12GB VRAM and a system with 32GB RAM for optimal operation. This version is suitable for mid-range hardware, making it a possible choice for developers seeking efficiency without sacrificing too much accuracy. The model’s q4 variant reduces memory usage compared to higher-precision versions like fp16, while maintaining reasonable computational capabilities. Additional considerations include adequate GPU cooling and a power supply that supports the required hardware.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Starcoder2-3B is a 3b parameter model with a 16k token context length, optimized for coding tasks and capable of handling code generation, completion, and translation. Its design emphasizes efficiency and versatility, making it a possible choice for developers seeking a balance between performance and resource usage, though further evaluation is needed for specific applications.

References

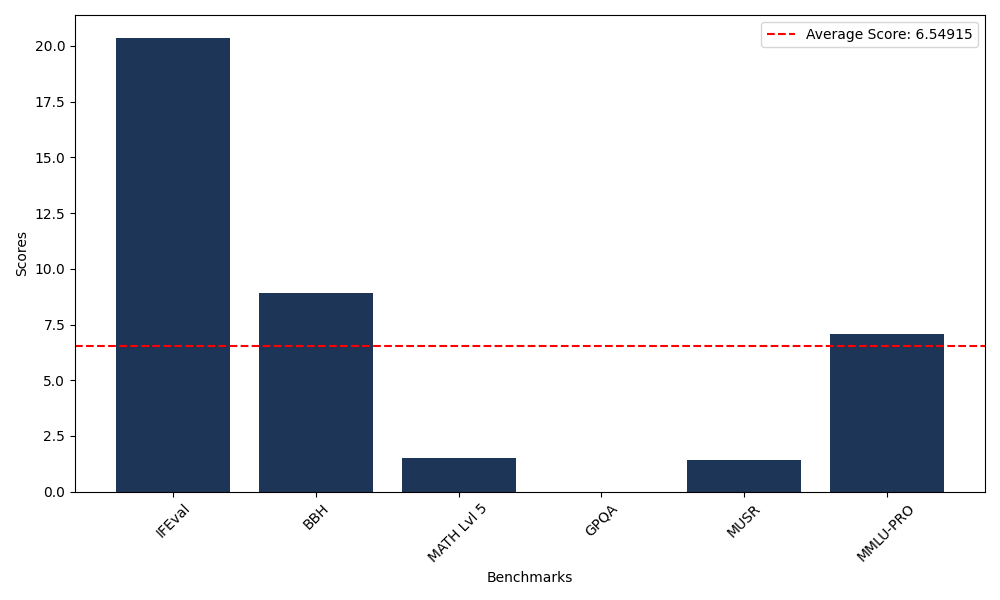

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 20.37 |

| Big Bench Hard (BBH) | 8.91 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.51 |

| General Purpose Question Answering (GPQA) | 0.00 |

| Multimodal Understanding and Reasoning (MUSR) | 1.43 |

| Massive Multitask Language Understanding (MMLU-PRO) | 7.07 |

Comments

No comments yet. Be the first to comment!

Leave a Comment