Starcoder2 15B - Model Details

Starcoder2 15B is a large language model developed by the non-profit organization Bigcodeproject, featuring 15b parameters. It operates under the BigCode Open Rail-M V1 License Agreement (BigCode-Open-RAIL-M-v1), emphasizing transparency in its training process. The model offers three distinct sizes, delivering strong performance while prioritizing openness and accessibility in AI development.

Description of Starcoder2 15B

Starcoder2 15B is a large language model with 15B parameters designed for programming tasks, trained on 600+ programming languages from The Stack v2 dataset. It employs Grouped Query Attention to enhance efficiency and supports a context window of 16,384 tokens with a sliding window of 4,096 tokens, enabling extended text processing. The model was trained on 4+ trillion tokens, ensuring robust performance across diverse coding scenarios. Its architecture and training scale make it a powerful tool for developers and AI researchers.

Parameters & Context Length of Starcoder2 15B

Starcoder2 15B features 15b parameters, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for handling moderate complexity tasks. Its 16k context length falls into the long context range, enabling it to process extended texts effectively, though it requires more computational resources compared to shorter contexts. This combination makes it well-suited for programming tasks requiring both depth and extended contextual understanding.

- Parameter Size: 15b

- Context Length: 16k

Possible Intended Uses of Starcoder2 15B

Starcoder2 15B is a large language model designed for programming tasks, with 15b parameters and a 16k context length, making it a potential tool for various applications. Possible uses include code generation, where it could assist in creating code snippets or entire programs, though this would require validation for accuracy and relevance. Possible applications in code completion might involve suggesting lines of code or fixing errors, but its effectiveness in dynamic or niche programming environments remains to be tested. Possible roles in code translation could include converting code between languages, though challenges like syntax differences and domain-specific nuances would need addressing. These possible uses highlight the model’s flexibility but also underscore the need for careful evaluation before deployment.

- code generation

- code completion

- code translation

Possible Applications of Starcoder2 15B

Starcoder2 15B is a large language model with 15b parameters and a 16k context length, which could offer possible applications in areas like code generation, where it might assist in drafting code snippets or entire programs, though its accuracy and adaptability would need verification. Possible uses in code completion could involve suggesting context-aware code additions, but its performance in specialized or evolving programming environments would require further testing. Possible roles in code translation might include converting code between languages, though handling syntax variations and domain-specific requirements would demand careful evaluation. Possible applications in educational tools or collaborative coding platforms could also emerge, but these would need rigorous validation to ensure reliability. Each of these possible uses highlights the model’s versatility but underscores the necessity of thorough assessment before implementation.

- code generation

- code completion

- code translation

Quantized Versions & Hardware Requirements of Starcoder2 15B

Starcoder2 15B with the medium q4 quantization offers a good balance between precision and performance, requiring a GPU with at least 16GB VRAM and a multi-core CPU, along with 32GB of system memory for optimal operation. This version is possible to run on mid-range hardware, but users should verify compatibility with their graphics card specifications. Each possible application of the model should be tested for hardware suitability.

fp16, q2, q3, q4, q5, q6, q8

Conclusion

Starcoder2 15B is a large language model with 15b parameters and a 16k context length, developed by the non-profit Bigcodeproject under the BigCode Open Rail-M V1 License Agreement, emphasizing transparency and open-source accessibility. It is designed for code generation, code completion, and code translation, with possible applications in programming tasks, though its effectiveness in specific scenarios requires thorough evaluation.

References

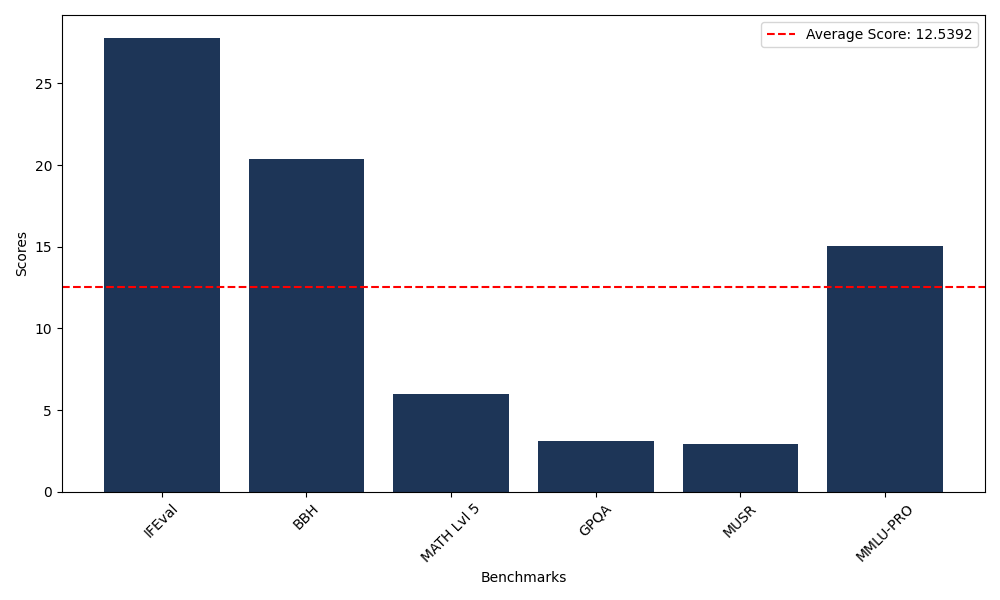

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 27.80 |

| Big Bench Hard (BBH) | 20.37 |

| Mathematical Reasoning Test (MATH Lvl 5) | 5.97 |

| General Purpose Question Answering (GPQA) | 3.13 |

| Multimodal Understanding and Reasoning (MUSR) | 2.93 |

| Massive Multitask Language Understanding (MMLU-PRO) | 15.03 |

Comments

No comments yet. Be the first to comment!

Leave a Comment