Starling Lm 7B - Model Details

Starling Lm 7B is a large language model developed by Berkeley-Nest, a non-profit organization. It features 7 billion parameters and is released under the Apache License 2.0. The model is designed to enhance chatbot helpfulness and harmlessness through research on reinforcement learning from human feedback (RLAIF).

Description of Starling Lm 7B

Starling-7B-alpha is an open large language model trained using Reinforcement Learning from AI Feedback (RLAIF). It leverages the GPT-4 labeled ranking dataset, the berkeley-nest/Nectar dataset, and a reward training and policy tuning pipeline. The model is finetuned from Openchat 3.5 with a reward model and policy optimization method, achieving strong performance on MT-Bench, outperforming many models except for GPT-4 and GPT-4 Turbo. It emphasizes enhancing chatbot helpfulness and harmlessness through RLAIF, developed by Berkeley-Nest, a non-profit organization, under the Apache License 2.0.

Parameters & Context Length of Starling Lm 7B

Starling Lm 7B is a 7b parameter model with a 4k context length, placing it in the small-scale category for parameters and short-context category for input length. The 7b parameter size ensures efficiency and accessibility for resource-constrained environments while limiting its capacity for highly complex tasks. The 4k context length allows it to handle moderate-length inputs but restricts its ability to process very long texts, making it suitable for tasks like dialogue or concise queries rather than extended document analysis. These choices reflect a balance between performance and practical deployment.

- Parameter Size: 7b

- Context Length: 4k

Possible Intended Uses of Starling Lm 7B

Starling Lm 7B is a 7b parameter model designed for chat-based interactions, code generation, and research and development, offering potential applications in areas where its training and architecture align with specific needs. Possible uses could include enhancing conversational agents with more natural dialogue, assisting developers in writing or debugging code, or supporting exploratory research through text generation and analysis. However, these possible applications require careful evaluation to ensure they meet technical, ethical, and practical requirements. The model’s focus on helpfulness and harmlessness through RLAIF suggests it may be particularly suited for tasks involving user engagement or iterative problem-solving, but further investigation is needed to confirm its effectiveness in these domains.

- chat-based interactions

- code generation

- research and development

Possible Applications of Starling Lm 7B

Starling Lm 7B is a 7b parameter model with a 4k context length, designed for chat-based interactions, code generation, and research and development, and could be used for possible applications in areas like educational tools, content creation, or collaborative problem-solving. Possible uses might include assisting with drafting text, generating code snippets, or supporting exploratory research tasks, though these possible scenarios require thorough evaluation to ensure alignment with specific needs. The model’s focus on helpfulness and harmlessness through RLAIF suggests it may be particularly suited for tasks involving iterative feedback, but possible applications in other domains would need rigorous testing. Each possible use case must be carefully assessed before deployment to ensure reliability and appropriateness.

- chat-based interactions

- code generation

- research and development

- content creation

Quantized Versions & Hardware Requirements of Starling Lm 7B

Starling Lm 7B's medium q4 version requires a GPU with at least 16GB VRAM and a multi-core CPU, making it suitable for mid-range hardware. Possible applications on such systems may include tasks like chat interactions or code generation, though possible performance and memory needs should be tested on specific hardware. System memory of at least 32GB is recommended for stability.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Starling Lm 7B is a 7b parameter open-source language model developed by Berkeley-Nest under the Apache License 2.0, designed to enhance chatbot helpfulness and harmlessness through RLAIF. It offers multiple quantized versions for varied hardware requirements, making it accessible for diverse applications.

References

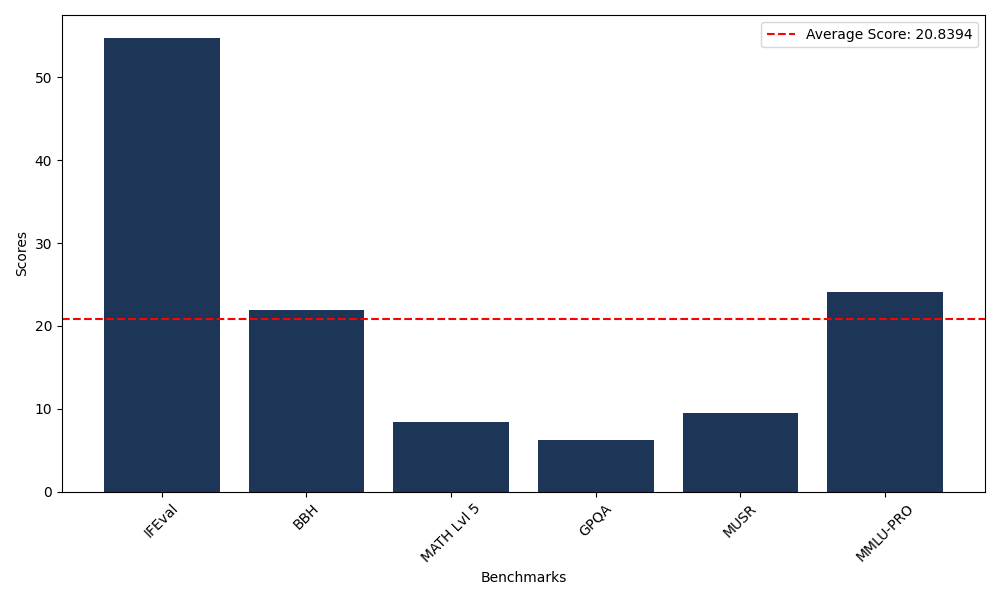

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 54.80 |

| Big Bench Hard (BBH) | 21.95 |

| Mathematical Reasoning Test (MATH Lvl 5) | 8.38 |

| General Purpose Question Answering (GPQA) | 6.26 |

| Multimodal Understanding and Reasoning (MUSR) | 9.50 |

| Massive Multitask Language Understanding (MMLU-PRO) | 24.13 |

Comments

No comments yet. Be the first to comment!

Leave a Comment