Tulu3 8B - Model Details

Tulu3 8B is a large language model developed by Allen Institute For Artificial Intelligence (Ai2 Enterprise), a nonprofit organization. It features 8b parameters, making it a robust tool for complex tasks. The model is released under the Llama 31 Community License Agreement (LLAMA-31-CCLA), ensuring accessibility while promoting responsible use. Its design emphasizes advanced post-training techniques for improved performance, aiming to enhance accuracy and efficiency in various applications.

Description of Tulu3 8B

Tülu 3 is a leading instruction-following model family designed for state-of-the-art performance across diverse tasks including MATH, GSM8K, and IFEval. It provides a post-training package with fully open-source data, code, and recipes, serving as a comprehensive guide for modern techniques. The model is trained on a mix of publicly available, synthetic, and human-created datasets and is released under the Llama 3.1 Community License Agreement. Developed by the Allen Institute For Artificial Intelligence (Ai2 Enterprise), a nonprofit organization, Tülu 3 emphasizes advanced post-training methods to enhance performance while contributing to broader efforts in training fully open-source models like OLMo. Its 8B parameter size enables robust capabilities for complex applications.

Parameters & Context Length of Tulu3 8B

Tulu3 8B is a mid-scale model with 8b parameters, offering a balance between performance and resource efficiency, making it suitable for moderate complexity tasks. Its 8k context length enables handling long texts, ideal for applications requiring extended contextual understanding but demands more computational resources. The model’s design emphasizes practicality and accessibility while supporting advanced capabilities.

- Name: Tulu3 8B

- Parameter_Size: 8b

- Context_Length: 8k

- Implications: Mid-scale parameters for balanced performance, 8k context for long texts but resource-intensive.

Possible Intended Uses of Tulu3 8B

Tulu3 8B is a versatile model with 8b parameters and an 8k context length, making it a possible tool for research, educational use, and task completion such as math or coding. Its design allows for potential applications in exploring complex problems, supporting learning environments, or automating specific workflows. However, these possible uses require thorough investigation to ensure alignment with specific goals and constraints. The model’s open-source nature and focus on post-training techniques suggest it could be adapted for various scenarios, but each application would need careful evaluation. The model’s capabilities are not limited to these areas, but their effectiveness depends on the context and requirements of the task.

- Name: Tulu3 8B

- Intended_Uses: research, educational use, task completion (e.g., math, coding)

- Parameter_Size: 8b

- Context_Length: 8k

Possible Applications of Tulu3 8B

Tulu3 8B is a versatile model with 8b parameters and an 8k context length, offering possible applications in research, educational use, and task completion such as math or coding. Its design suggests possible value in scenarios requiring complex reasoning, like analyzing datasets, generating educational content, or assisting with programming challenges. However, these possible uses must be thoroughly evaluated to ensure they meet specific requirements and constraints. The model’s open-source nature and focus on post-training techniques highlight its potential for adaptability, but each possible application demands rigorous testing before deployment.

- Name: Tulu3 8B

- Possible Applications: research, educational use, task completion (e.g., math, coding)

- Parameter_Size: 8b

- Context_Length: 8k

Quantized Versions & Hardware Requirements of Tulu3 8B

Tulu3 8B’s medium q4 version requires a GPU with at least 16GB VRAM for efficient operation, along with a system with at least 32GB RAM, adequate cooling, and a reliable power supply. This configuration ensures a balance between precision and performance, making it suitable for users with mid-range hardware. However, these possible requirements may vary based on workload and optimization.

- Name: Tulu3 8B

- Quantized_Versions: fp16, q4, q8

- Parameter_Size: 8b

- Context_Length: 8k

Conclusion

Tulu3 8B is a large language model developed by the Allen Institute For Artificial Intelligence (Ai2 Enterprise), featuring 8b parameters and an 8k context length, designed for advanced post-training techniques and open-source accessibility under the Llama 31 Community License Agreement. It aims to support diverse applications while emphasizing transparency and adaptability in modern AI research and development.

References

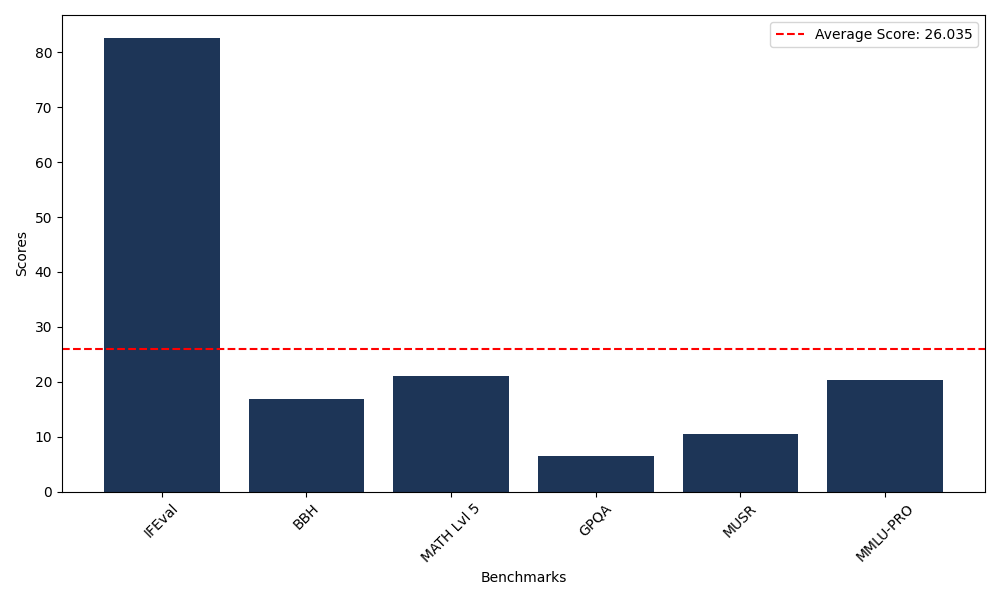

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 82.67 |

| Big Bench Hard (BBH) | 16.67 |

| Mathematical Reasoning Test (MATH Lvl 5) | 19.64 |

| General Purpose Question Answering (GPQA) | 6.49 |

| Multimodal Understanding and Reasoning (MUSR) | 10.45 |

| Massive Multitask Language Understanding (MMLU-PRO) | 20.30 |

| Instruction Following Evaluation (IFEval) | 82.55 |

| Big Bench Hard (BBH) | 16.86 |

| Mathematical Reasoning Test (MATH Lvl 5) | 21.15 |

| General Purpose Question Answering (GPQA) | 6.26 |

| Multimodal Understanding and Reasoning (MUSR) | 10.52 |

| Massive Multitask Language Understanding (MMLU-PRO) | 20.23 |

Comments

No comments yet. Be the first to comment!

Leave a Comment