Vicuna 7B - Model Details

Vicuna 7B is a high-context, open-source chat assistant model developed by the Large Model Systems Organization, a community-driven initiative. With 7 billion parameters, it achieves near-ChatGPT quality while being freely available under an open-source license. The model is designed to handle complex conversations and deliver responsive, accurate interactions, making it a versatile tool for various applications. Its community-maintained nature ensures continuous improvements and adaptability to user needs.

Description of Vicuna 7B

Vicuna 7B is a chat assistant model developed by LMSYS, trained by fine-tuning Llama 2 on user-shared conversations collected from ShareGPT. It is an auto-regressive language model based on the transformer architecture, designed to deliver conversational capabilities comparable to commercial systems. The model operates under the Llama 2 Community License Agreement, allowing open access and use while adhering to specific terms. Its foundation on Llama 2 ensures robust performance, and its focus on chat interactions makes it suitable for a wide range of dialogue-based applications.

Parameters & Context Length of Vicuna 7B

Vicuna 7B is a large language model with 7 billion parameters and a context length of 4,000 tokens. The 7B parameter size places it in the small model category, enabling fast and resource-efficient performance ideal for simple tasks, while the 4,000-token context length allows it to handle short to moderate conversations but may limit its effectiveness for extended texts. This balance makes it accessible for a wide range of applications without requiring significant computational resources.

- Parameter Size: 7b

- Context Length: 4k

Possible Intended Uses of Vicuna 7B

Vicuna 7B is a large language model designed for tasks requiring conversational understanding and text generation, with a 7 billion parameter size and 4,000-token context length. Possible uses include research on large language models, where its open-source nature and performance could support exploration of training methods or model behavior. Possible applications in chatbot development might involve testing dialogue systems or improving response accuracy, though further evaluation would be needed to confirm effectiveness. Possible studies in natural language processing could leverage its architecture for tasks like text analysis or language understanding, but these uses remain speculative and require rigorous testing. The model’s design and licensing make it a flexible tool for experimentation, though its suitability for specific tasks would depend on additional analysis.

- research on large language models

- development of chatbots

- natural language processing studies

Possible Applications of Vicuna 7B

Vicuna 7B is a large language model with 7 billion parameters and a 4,000-token context length, making it suitable for various possible applications. Possible uses include research on large language models, where its open-source nature could support exploration of training techniques or model behavior. Possible applications in chatbot development might involve testing dialogue systems or improving response accuracy, though further evaluation would be needed. Possible studies in natural language processing could leverage its architecture for tasks like text analysis or language understanding, but these uses remain speculative and require rigorous testing. Each application must be thoroughly evaluated and tested before use.

- research on large language models

- development of chatbots

- natural language processing studies

Quantized Versions & Hardware Requirements of Vicuna 7B

Vicuna 7B's medium q4 version requires a GPU with at least 16GB VRAM and 32GB system memory to run efficiently, making it suitable for medium-scale tasks while balancing precision and performance. This version is ideal for users seeking a compromise between speed and accuracy without excessive resource demands.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Vicuna 7B is a 7B parameter, 4k context length large language model developed by LMSYS, available under the Llama 2 Community License, offering open-source flexibility for research and development. It supports applications in chatbot development, natural language processing studies, and model research, though these uses require careful evaluation before deployment.

References

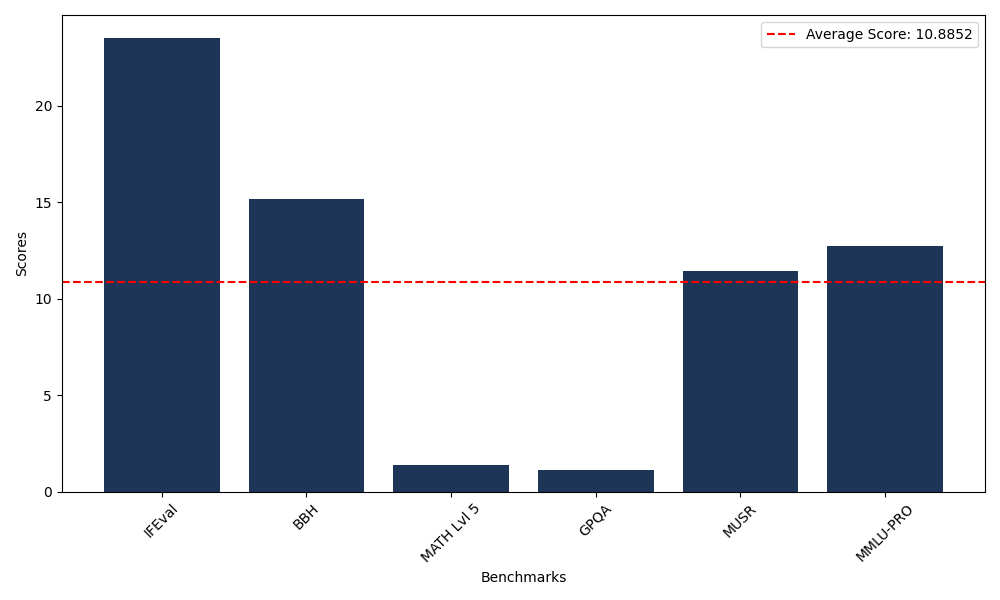

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 23.52 |

| Big Bench Hard (BBH) | 15.15 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.36 |

| General Purpose Question Answering (GPQA) | 1.12 |

| Multimodal Understanding and Reasoning (MUSR) | 11.42 |

| Massive Multitask Language Understanding (MMLU-PRO) | 12.74 |

Comments

No comments yet. Be the first to comment!

Leave a Comment