Wizard Math 13B - Model Details

Wizard Math 13B is a large language model developed by the organization Wizardlm, featuring 13 billion parameters. It is designed to excel in mathematical reasoning tasks, achieving high benchmark scores. The model is released under the Microsoft Research License Terms (MSRLT), allowing for flexible use while adhering to specific licensing conditions. Its specialized focus on mathematical problem-solving makes it a valuable tool for applications requiring advanced numerical and logical capabilities.

Description of Wizard Math 13B

WizardLM is a large language model trained from Llama-2 13b, designed to follow complex instructions with high performance on benchmarks like MT-Bench, AlpacaEval, and WizardLM Eval. It supports multi-turn conversations and comes in versions such as WizardLM-13B-V1.2 and WizardLM-30B-V1.0. Specialized variants include WizardCoder for coding tasks and WizardMath for mathematical problem-solving, showcasing its versatility across domains.

Parameters & Context Length of Wizard Math 13B

WizardLM is a large language model with 13b parameters, placing it in the mid-scale category, which offers a balance between performance and resource efficiency for handling moderate complexity. Its 4k context length falls into the short context range, making it suitable for tasks requiring concise input but limiting its ability to process extended texts. The model’s parameter size enables it to manage complex instructions effectively, while its context length ensures efficiency for shorter interactions. These specifications make it versatile for applications where resource constraints and task brevity are critical.

- Name: WizardLM

- Parameter Size: 13b

- Context Length: 4k

- Implications: Mid-scale parameters for balanced performance, short context for efficient handling of brief tasks.

Possible Intended Uses of Wizard Math 13B

WizardLM is a versatile large language model with possible applications in scenarios requiring complex instruction following, multi-turn conversations, and domain-specific tasks. Its possible use in coding tasks through specialized variants like WizardCoder suggests potential for assisting with programming challenges, though further testing would be needed to confirm effectiveness. Similarly, the possible integration of WizardMath variants could support mathematical problem-solving, offering possible benefits for educational or analytical tasks. These possible uses highlight the model’s adaptability but also underscore the need for careful evaluation to ensure suitability for specific contexts. The model’s design emphasizes flexibility, making it a possible candidate for experimental or exploratory projects where its capabilities could be further explored.

- Name: WizardLM

- Intended Uses: following complex instructions and multi-turn conversations, performing coding tasks with specialized variants like wizardcoder, solving mathematical problems with wizardmath variants

Possible Applications of Wizard Math 13B

WizardLM is a large language model with possible applications in areas such as assisting with complex instruction following, facilitating multi-turn conversations, and supporting domain-specific tasks through specialized variants like WizardCoder and WizardMath. Its possible use in coding tasks could offer possible benefits for developers, while its possible ability to solve mathematical problems might aid in educational or analytical scenarios. The model’s possible adaptability to structured interactions and problem-solving suggests possible value in experimental or exploratory projects. However, these possible applications require thorough evaluation to ensure alignment with specific needs and constraints.

- Name: WizardLM

- Possible Applications: following complex instructions, multi-turn conversations, coding tasks with WizardCoder, mathematical problem-solving with WizardMath

Quantized Versions & Hardware Requirements of Wizard Math 13B

WizardLM’s medium q4 version requires a GPU with at least 16GB–32GB VRAM, such as an RTX 3090 or similar, and a system with 32GB or more RAM for stable performance. This quantization balances precision and efficiency, making it suitable for mid-range hardware. Users should verify their GPU’s VRAM and cooling capabilities to ensure compatibility.

WizardLM supports the following quantized versions: fp16, q2, q3, q4, q5, q6, q8.

Conclusion

WizardLM is a large language model with 13 billion parameters and a 4k context length, optimized for complex instruction following, multi-turn conversations, and specialized tasks like coding and mathematics through variants such as WizardCoder and WizardMath. Its design emphasizes versatility and performance, making it suitable for a range of applications while requiring careful evaluation for specific use cases.

References

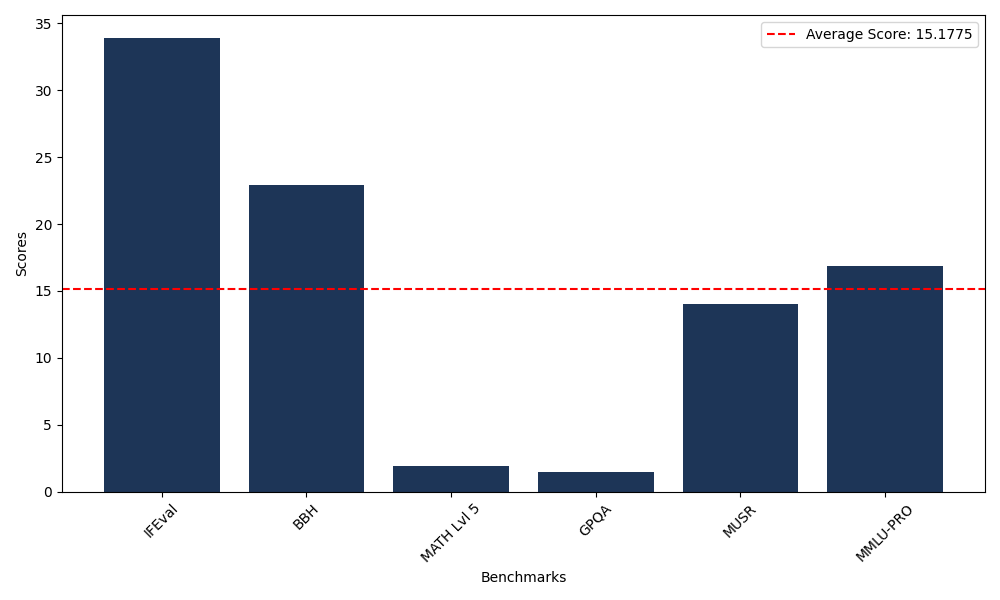

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 33.92 |

| Big Bench Hard (BBH) | 22.89 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.89 |

| General Purpose Question Answering (GPQA) | 1.45 |

| Multimodal Understanding and Reasoning (MUSR) | 14.03 |

| Massive Multitask Language Understanding (MMLU-PRO) | 16.88 |

Comments

No comments yet. Be the first to comment!

Leave a Comment