Yarn Mistral 7B - Model Details

Yarn Mistral 7B, developed by Nousresearch, is a large language model with 7 billion parameters designed for enhanced long-context processing up to 128k tokens. The model is maintained by a company and focuses on handling extended sequences efficiently. Its open-source nature allows for flexible use and customization.

Description of Yarn Mistral 7B

Yarn Mistral 7B is a state-of-the-art language model optimized for long-context tasks, further pretrained on long-context data for 1500 steps using the YaRN extension method. It builds upon Mistral-7B-v0.1 and extends its capabilities to support a 128k token context window, enabling efficient handling of extended sequences. This model is designed to enhance performance in scenarios requiring deep contextual understanding and extended input processing.

Parameters & Context Length of Yarn Mistral 7B

Yarn Mistral 7B is a large language model with 7b parameters, placing it in the small to mid-scale category, which ensures fast and resource-efficient performance for tasks requiring moderate complexity. Its 128k token context length enables handling of extended sequences, making it ideal for long-text processing, though this demands more computational resources compared to shorter contexts. The combination of a compact parameter size and a vast context window positions it as a balanced choice for applications needing both efficiency and extended contextual understanding.

- Parameter_Size: 7b

- Context_Length: 128k

Possible Intended Uses of Yarn Mistral 7B

Yarn Mistral 7B is a versatile large language model with 7b parameters and a 128k token context length, making it suitable for possible applications in text generation, code generation, and language translation. Its ability to process extended sequences could enable possible uses such as creating detailed narratives, assisting with programming tasks, or translating complex multilingual content. However, these possible applications require further exploration to ensure effectiveness and alignment with specific requirements. The model’s design emphasizes efficiency and scalability, which could support possible scenarios where long-context understanding is critical.

- text generation

- code generation

- language translation

Possible Applications of Yarn Mistral 7B

Yarn Mistral 7B, with its 7b parameters and 128k token context length, could be a possible tool for tasks requiring extended contextual understanding. Possible applications might include generating detailed creative texts, assisting with coding workflows, translating complex multilingual content, or supporting interactive storytelling. These possible uses could benefit from the model’s ability to handle long sequences, though they require thorough evaluation to ensure alignment with specific needs. Possible scenarios such as educational content creation or collaborative writing could also emerge, but each possible application must be rigorously tested before deployment.

- text generation

- code generation

- language translation

- interactive storytelling

Quantized Versions & Hardware Requirements of Yarn Mistral 7B

Yarn Mistral 7B’s medium q4 version, optimized for a balance between precision and performance, requires a GPU with at least 16GB VRAM and a system with 32GB RAM to run efficiently. This quantized variant reduces memory usage compared to higher-precision formats, making it possible to deploy on mid-range hardware while maintaining reasonable inference speed. Possible applications for this version include tasks needing extended context without overwhelming computational demands.

fp16, q2, q3, q4, q5, q6, q8

Conclusion

Yarn Mistral 7B, developed by Nousresearch, is a large language model with 7b parameters and a 128k token context length, optimized for enhanced long-context processing. Its architecture supports efficient handling of extended sequences, making it suitable for tasks requiring deep contextual understanding.

References

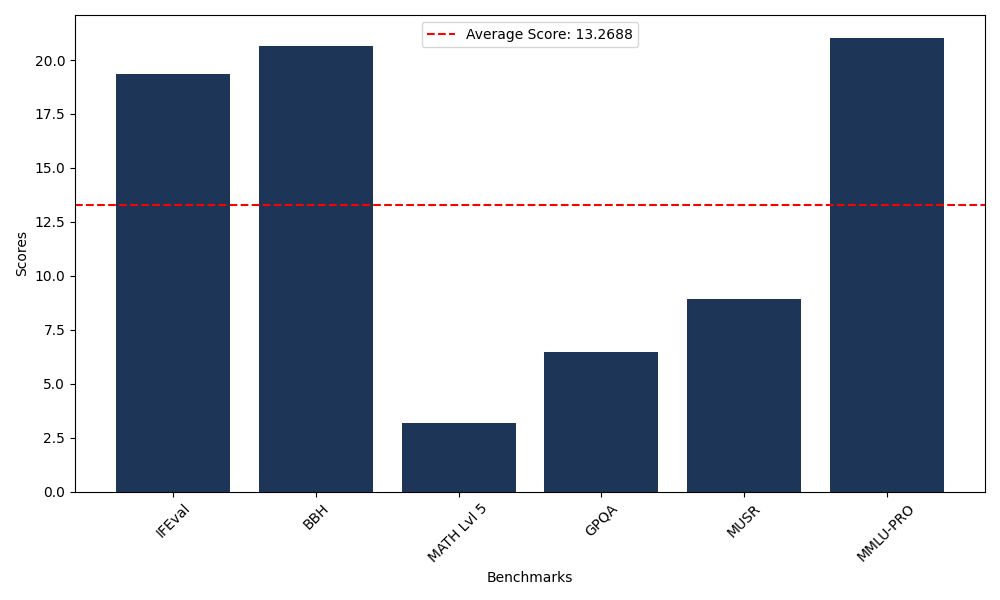

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 19.34 |

| Big Bench Hard (BBH) | 20.63 |

| Mathematical Reasoning Test (MATH Lvl 5) | 3.17 |

| General Purpose Question Answering (GPQA) | 6.49 |

| Multimodal Understanding and Reasoning (MUSR) | 8.95 |

| Massive Multitask Language Understanding (MMLU-PRO) | 21.03 |

Comments

No comments yet. Be the first to comment!

Leave a Comment