Yi 6B - Model Details

Yi 6B is a large language model developed by 01-Ai, a company specializing in artificial intelligence research and applications. The model features 6 billion parameters and is designed as a bilingual English-Chinese model, trained on 3 trillion tokens to enhance its multilingual capabilities. It is released under multiple licenses including the Apache License 2.0, Yi Series Models Community License Agreement, and Yi Series Models License Agreement, offering flexibility for various use cases.

Description of Yi 6B

Yi series models are next-generation open-source large language models developed by 01.AI, designed as bilingual English-Chinese models trained on a 3 trillion token multilingual corpus. They excel in language understanding, commonsense reasoning, reading comprehension, and coding. The Yi-34B-Chat model ranks second to GPT-4 Turbo on AlpacaEval, while Yi-34B leads open-source models in English and Chinese benchmarks. The models use the Transformer architecture but are not derivatives of Llama, relying on proprietary training datasets and infrastructure. Their open-source nature and strong performance make them a significant contribution to multilingual AI research.

Parameters & Context Length of Yi 6B

Yi 6B is a large language model with 6 billion parameters and a 200,000-token context length, positioning it as a small-scale model suitable for resource-efficient tasks and a very long context ideal for handling extensive texts. Its design balances speed and efficiency while supporting complex, lengthy input processing, though it requires significant computational resources for optimal performance.

- Name: Yi 6B

- Parameter Size: 6b

- Context Length: 200k

- Implications: Small-scale model for resource-efficient tasks; very long context for extensive text processing, but resource-intensive.

Possible Intended Uses of Yi 6B

Yi 6B is a large language model designed for bilingual English-Chinese tasks with a 6 billion parameter size and a 200,000-token context length, making it a possible tool for coding, math, and reasoning applications. Its monolingual nature suggests it may excel in focused tasks within a single language, though possible uses could include generating code snippets, solving mathematical problems, or performing logical reasoning. The model’s support for both English and Chinese opens possible avenues for cross-lingual tasks, but further exploration is needed to confirm its effectiveness in these areas. Possible applications might also extend to educational tools, research assistance, or creative writing, though these remain possible scenarios requiring rigorous testing. The model’s design emphasizes resource efficiency and long-context handling, which could possibly benefit tasks involving extensive text analysis or multi-step reasoning.

- Name: Yi 6B

- Intended Uses: coding, math, reasoning

- Supported Languages: english, chinese

- Is_Mono_Lingual: yes

Possible Applications of Yi 6B

Yi 6B is a large language model with 6 billion parameters and a 200,000-token context length, designed for bilingual English-Chinese tasks. Its monolingual nature suggests possible applications in specialized coding, mathematical problem-solving, and logical reasoning, where focused language processing could yield possible benefits. Possible scenarios might include generating code in either language, assisting with complex mathematical derivations, or supporting multi-step reasoning tasks. The model’s long-context capability could possibly enable handling extended texts or intricate workflows, though possible uses in these areas require thorough validation. Possible applications in educational tools or creative writing could also emerge, but possible effectiveness remains to be confirmed through rigorous testing.

- Name: Yi 6B

- Possible Applications: coding, math, reasoning

- Supported Languages: english, chinese

- Context Length: 200k

Quantized Versions & Hardware Requirements of Yi 6B

Yi 6B’s medium q4 version requires a GPU with at least 16GB VRAM for efficient operation, making it suitable for systems with mid-range graphics cards. This quantization balances precision and performance, allowing the model to run on hardware with 12GB–24GB VRAM while maintaining reasonable computational efficiency. A system with at least 32GB RAM is recommended, along with adequate cooling and power supply to handle the workload.

- Quantized Versions: fp16, q2, q3, q4, q5, q6, q8

- Name: Yi 6B

- Hardware Requirements: 16GB+ VRAM, 32GB+ RAM, adequate cooling and power supply

Conclusion

Yi 6B is a large language model developed by 01-Ai, featuring 6 billion parameters and a 200,000-token context length, designed as a bilingual English-Chinese model with open-source licenses including Apache-2.0 and others. It is intended for applications in coding, math, and reasoning, with potential uses requiring further evaluation for specific tasks.

References

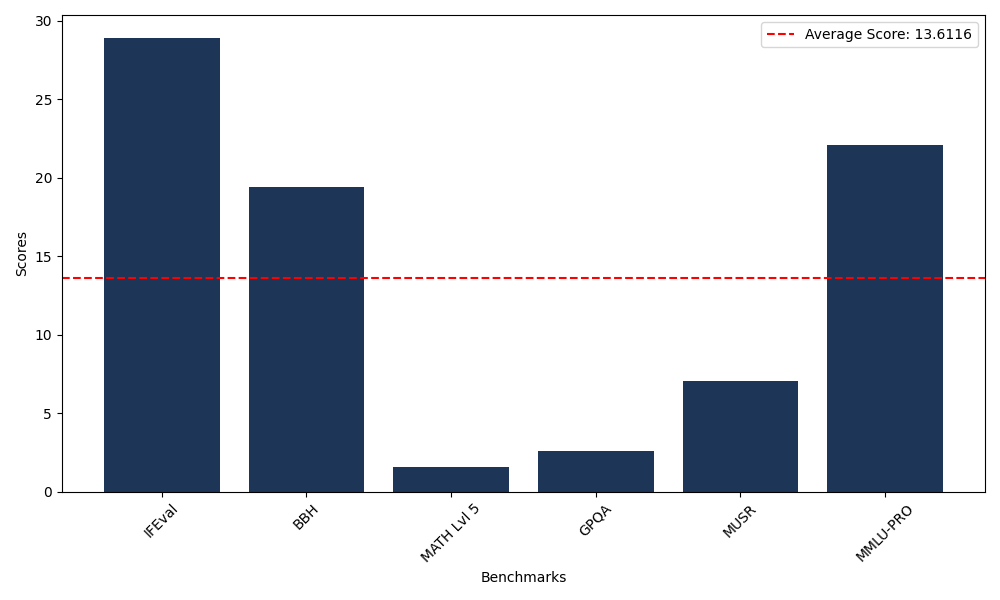

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 28.93 |

| Big Bench Hard (BBH) | 19.41 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.59 |

| General Purpose Question Answering (GPQA) | 2.57 |

| Multimodal Understanding and Reasoning (MUSR) | 7.04 |

| Massive Multitask Language Understanding (MMLU-PRO) | 22.12 |

Comments

No comments yet. Be the first to comment!

Leave a Comment