Zephyr 7B - Model Details

Zephyr 7B is a large language model developed by Huggingface, a company known for its contributions to the AI community. With 7b parameters, it is part of the Zephyr series, which includes fine-tuned assistants designed for various tasks, ranging in size from 7B to 141B parameters. The model is released under the Apache License 2.0 (Apache-2.0), MIT License (MIT), and MIT License (MIT), making it accessible for both research and commercial use. Its design emphasizes versatility and efficiency, catering to a wide range of applications.

Description of Zephyr 7B

Zephyr 7B is a 7B parameter GPT-like model fine-tuned on a mix of publicly available and synthetic datasets. It was initially trained on UltraChat and aligned with UltraFeedback, making it suitable for chat applications. However, it lacks alignment techniques like RLHF, which may result in problematic outputs. The model emphasizes conversational capabilities but requires caution due to its limited alignment strategies.

Parameters & Context Length of Zephyr 7B

Zephyr 7B is a 7B parameter model with a 4K context length, placing it in the small to mid-scale category of open-source LLMs. The 7B parameter size ensures it is resource-efficient and suitable for tasks requiring speed and simplicity, though it may lack the complexity of larger models. Its 4K context length allows handling moderate-length inputs but limits its effectiveness for extended texts, requiring careful management of input length. These characteristics make it ideal for applications prioritizing efficiency over extensive contextual understanding.

- Name: Zephyr 7B

- Parameter Size: 7B

- Context Length: 4K

- Implications: Balances efficiency and capability for simpler tasks but has limitations in handling long contexts or highly complex operations.

Possible Intended Uses of Zephyr 7B

Zephyr 7B is a 7B parameter model designed for chat applications, text generation, and code generation, offering possible applications in scenarios requiring conversational interfaces, creative writing, or programming assistance. Its 4K context length and 7B parameter size make it a possible choice for tasks where efficiency and moderate complexity are prioritized, though its limited alignment techniques and shorter context may restrict its effectiveness for extended or highly nuanced interactions. While possible uses include supporting dialogue systems or automating content creation, these applications require thorough investigation to ensure they meet specific requirements. The model’s open-source nature and flexibility suggest possible adaptability across domains, but users should carefully evaluate its suitability for their needs.

- Intended Uses: chat applications

- Intended Uses: text generation

- Intended Uses: code generation

Possible Applications of Zephyr 7B

Zephyr 7B is a 7B parameter model with 4K context length, making it a possible candidate for applications like chat applications, text generation, code generation, and content creation. Its possible suitability for conversational interfaces or automated writing tasks stems from its design, though possible limitations in alignment and context handling may affect performance. Possible uses in creative or technical domains could benefit from its efficiency, but possible outcomes require careful validation. Possible adaptations for educational tools or collaborative workflows might emerge, yet possible effectiveness depends on specific use cases. Each possible application must be thoroughly evaluated and tested before deployment.

- Intended Uses: chat applications

- Intended Uses: text generation

- Intended Uses: code generation

- Intended Uses: content creation

Quantized Versions & Hardware Requirements of Zephyr 7B

Zephyr 7B's medium q4 version requires a GPU with at least 16GB VRAM and 32GB system memory for optimal performance, making it suitable for mid-scale deployments. This quantization balances precision and efficiency, allowing the model to run on consumer-grade hardware while maintaining reasonable accuracy. Additional considerations include adequate cooling and a power supply capable of supporting the GPU.

Quantized Versions: fp16, q2, q3, q4, q5, q6, q8

Conclusion

Zephyr 7B is a 7B parameter model developed by Huggingface, designed for chat applications, text generation, and code generation, with a 4K context length and multiple quantized versions (fp16, q2, q3, q4, q5, q6, q8) to optimize performance across hardware. It operates under Apache-2.0 and MIT licenses, offering flexibility for research and commercial use while requiring careful evaluation for specific tasks.

References

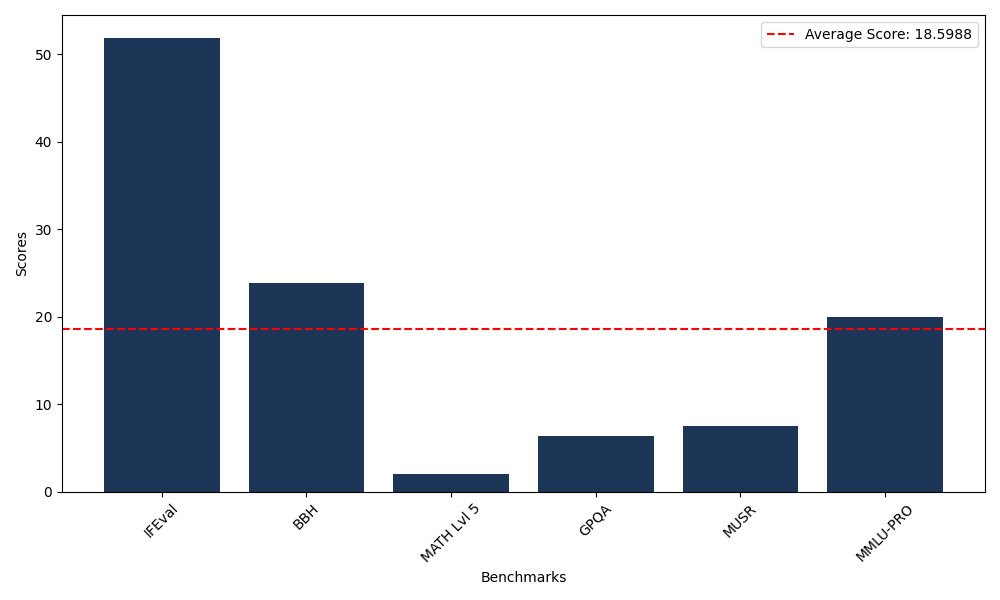

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 51.91 |

| Big Bench Hard (BBH) | 23.89 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.96 |

| General Purpose Question Answering (GPQA) | 6.38 |

| Multimodal Understanding and Reasoning (MUSR) | 7.50 |

| Massive Multitask Language Understanding (MMLU-PRO) | 19.95 |

Comments

No comments yet. Be the first to comment!

Leave a Comment